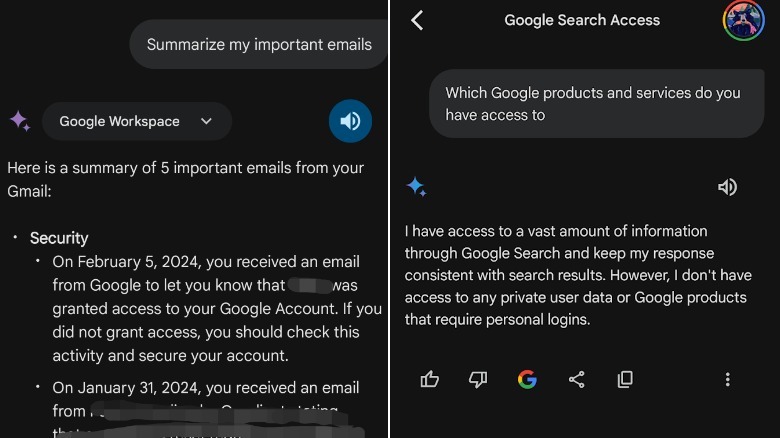

Some of the most perplexing responses I got from Gemini occurred when I tried to use the Google services it integrates with. With all the extensions turned on in settings, I asked it which Google products and services it has access to. Its response was a blatant lie, as the chatbot confidently told me, “I don’t have access to any private user data or Google products that require personal logins.” This was moments after it had successfully combed through my emails to summarize my inbox.

Gemini, like any other current-generation AI, doesn’t actually know what it knows. It may speak in the first person or display other hallmarks of cognizance, but it is merely spitting out words one after the other based on the statistical likelihood that those words make sense together. As physicist and AI expert Dan McQuillan wrote in a blog post, “Despite the impressive technical ju-jitsu of transformer models and the billions of parameters they learn, it’s still a computational guessing game … If a generated sentence makes sense to you, the reader, it means the mathematical model has made sufficiently good guess to pass your sense-making filter. The language model has no idea what it’s talking about because it has no idea about anything at all.”

Google is showing its ambitions with Gemini, but when those ambitions dash against the rocks of reality, the game is given up. Gemini, by being the most capable consumer AI model, shows that consumer AI is still an undercooked alpha product. Unless you love beta testing, you should avoid using Gemini — or any other current-generation AI — as your phone’s smart assistant.