Intel

When it announced the new Copilot key for PC keyboards last month, Microsoft declared 2024 “the year of the AI PC.” On one level, this is just an aspirational PR-friendly proclamation, meant to show investors that Microsoft intends to keep pushing the AI hype cycle that has put it in competition with Apple for the title of most valuable publicly traded company.

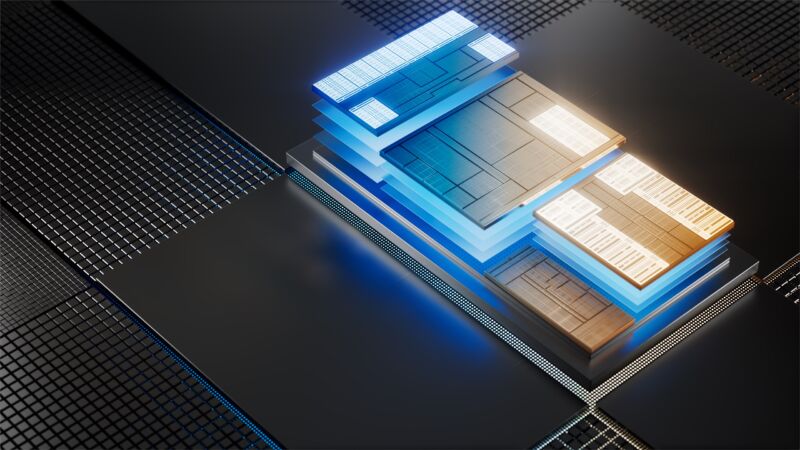

But on a technical level, it is true that PCs made and sold in 2024 and beyond will generally include AI and machine-learning processing capabilities that older PCs don’t. The main thing is the neural processing unit (NPU), a specialized block on recent high-end Intel and AMD CPUs that can accelerate some kinds of generative AI and machine-learning workloads more quickly (or while using less power) than the CPU or GPU could.

Qualcomm’s Windows PCs were some of the first to include an NPU, since the Arm processors used in most smartphones have included some kind of machine-learning acceleration for a few years now (Apple’s M-series chips for Macs all have them, too, going all the way back to 2020’s M1). But the Arm version of Windows is a insignificantly tiny sliver of the entire PC market; x86 PCs with Intel’s Core Ultra chips, AMD’s Ryzen 7040/8040-series laptop CPUs, or the Ryzen 8000G desktop CPUs will be many mainstream PC users’ first exposure to this kind of hardware.

Right now, even if your PC has an NPU in it, Windows can’t use it for much, aside from webcam background blurring and a handful of other video effects. But that’s slowly going to change, and part of that will be making it relatively easy for developers to create NPU-agnostic apps in the same way that PC game developers currently make GPU-agnostic games.

The gaming example is instructive, because that’s basically how Microsoft is approaching DirectML, its API for machine-learning operations. Though up until now it has mostly been used to run these AI workloads on GPUs, Microsoft announced last week that it was adding DirectML support for Intel’s Meteor Lake NPUs in a developer preview, starting in DirectML 1.13.1 and ONNX Runtime 1.17.

Though it will only run an unspecified “subset of machine learning models that have been targeted for support” and that some “may not run at all or may have high latency or low accuracy,” it opens the door to more third-party apps to start taking advantage of built-in NPUs. Intel says that Samsung is using Intel’s NPU and DirectML for facial recognition features in its photo gallery app, something that Apple also uses its Neural Engine for in macOS and iOS.

The benefits can be substantial, compared to running those workloads on a GPU or CPU.

“The NPU, at least in Intel land, will largely be used for power efficiency reasons,” Intel Senior Director of Technical Marketing Robert Hallock told Ars in an interview about Meteor Lake’s capabilities. “Camera segmentation, this whole background blurring thing… moving that to the NPU saves about 30 to 50 percent power versus running it elsewhere.”

Intel and Microsoft are both working toward a model where NPUs are treated pretty much like GPUs are today: developers generally target DirectX rather than a specific graphics card manufacturer or GPU architecture, and new features, one-off bug fixes, and performance improvements can all be addressed via GPU driver updates. Some GPUs run specific games better than others, and developers can choose to spend more time optimizing for Nvidia cards or AMD cards, but generally the model is hardware agnostic.

Similarly, Intel is already offering GPU-style driver updates for its NPUs. And Hallock says that Windows already essentially recognizes the NPU as “a graphics card with no rendering capability.”