Joy Buolamwini‘s AI research was attracting attention years before she received her Ph.D. from the MIT Media Lab in 2022. As a graduate student, she made waves with a 2016 TED talk about algorithmic bias that has received more than 1.6 million views to date. In the talk, Buolamwini, who is Black, showed that standard facial detection systems didn’t recognize her face unless she put on a white mask. During the talk, she also brandished a shield emblazoned with the logo of her new organization, the Algorithmic Justice League, which she said would fight for people harmed by AI systems, people she would later come to call the excoded.

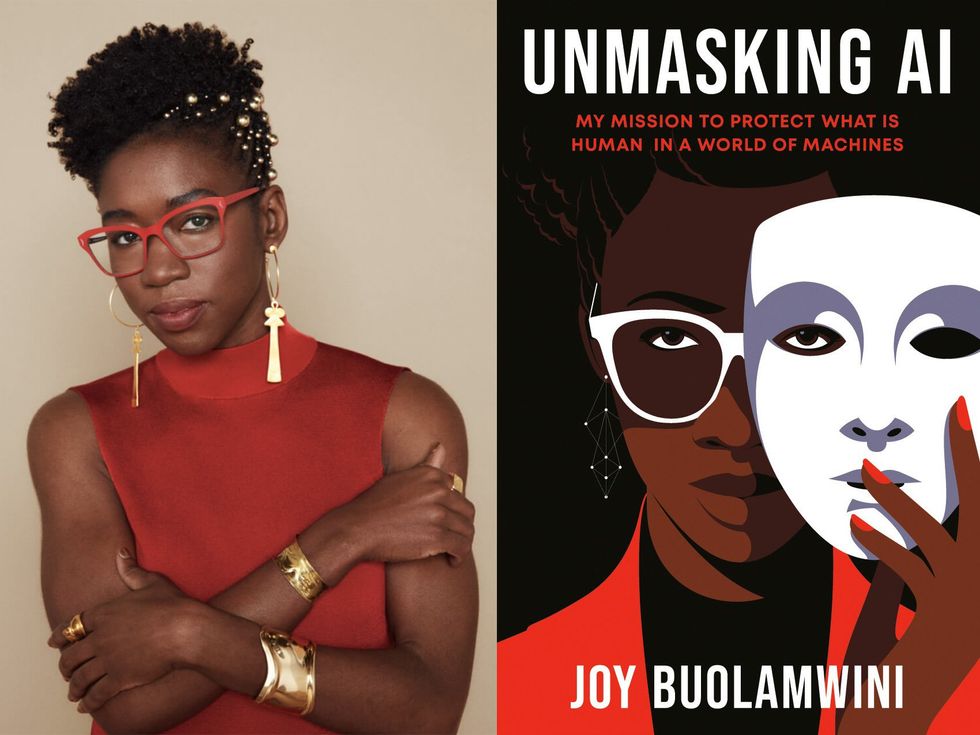

In her new book, Unmasking AI: My Mission to Protect What Is Human in a World of Machines, Buolamwini describes her own awakenings to the clear and present dangers of today’s AI. She explains her research on facial recognition systems and the Gender Shades research project, in which she showed that commercial gender classification systems consistently misclassified dark-skinned women. She also narrates her stratospheric rise—in the years since her TED talk, she has presented at the World Economic Forum, testified before Congress, and participated in President Biden’s roundtable on AI.

While the book is an interesting read on a autobiographical level, it also contains useful prompts for AI researchers who are ready to question their assumptions. She reminds engineers that default settings are not neutral, that convenient datasets may be rife with ethical and legal problems, and that benchmarks aren’t always assessing the right things. Via email, she answered IEEE Spectrum‘s questions about how to be a principled AI researcher and how to change the status quo.

One of the most interesting parts of the book for me was your detailed description of how you did the research that became Gender Shades: how you figured out a data collection method that felt ethical to you, struggled with the inherent subjectivity in devising a classification scheme, did the labeling labor yourself, and so on. It seemed to me appreciate the opposite of the Silicon Valley “advance fast and break things” ethos. Can you visualize a world in which every AI researcher is so scrupulous? What would it take to get to such a state of affairs?

Joy Buolamwini: When I was earning my academic degrees and learning to code, I did not have examples of ethical data collection. Basically if the data were available online it was there for the taking. It can be difficult to visualize another way of doing things, if you never see an alternative pathway. I do believe there is a world where more AI researchers and practitioners exercise more caution with data-collection activities, because of the engineers and researchers who accomplish out to the Algorithmic Justice League looking for a better way. Change starts with conversation, and we are having important conversations today about data provenance, classification systems, and AI harms that when I started this work in 2016 were often seen as insignificant.

What can engineers do if they’re concerned about algorithmic bias and other issues regarding AI ethics, but they work for a typical big tech company? The kind of place where nobody questions the use of convenient datasets or asks how the data was collected and whether there are problems with consent or bias? Where they’re expected to produce results that measure up against standard benchmarks? Where the choices seem to be: Go along with the status quo or find a new job?

Buolamwini: I cannot stress enough the importance of documentation. In conducting algorithmic audits and approaching well-known tech companies with the results, one issue that came up time and time again was the lack of internal awareness about the limitations of the AI systems that were being deployed. I do believe adopting tools appreciate datasheets for datasets and model cards for models, approaches that furnish an opportunity to see the data used to train AI models and the performance of those AI models in various contexts, is an important starting point.

Just as important is also acknowledging the gaps, so AI tools are not presented as working in a universal manner when they are optimized for just a specific context. These approaches can show how robust or not an AI system is. Then the question becomes, Is the company willing to release a system with the limitations documented or are they willing to go back and make improvements.

It can be helpful to not view AI ethics separately from developing robust and resilient AI systems. If your tool doesn’t work as well on women or people of color, you are at a disadvantage compared to companies who create tools that work well for a variety of demographics. If your AI tools create harmful stereotypes or hate speech you are at risk for reputational damage that can impede a company’s ability to recruit necessary talent, safeguard future customers, or gain follow-on investment. If you adopt AI tools that discriminate against protected classes for core areas appreciate hiring, you risk litigation for violating antidiscrimination laws. If AI tools you adopt or create use data that violates copyright protections, you open yourself up to litigation. And with more policymakers looking to regulate AI, companies that ignore issues or algorithmic bias and AI discrimination may end up facing costly penalties that could have been avoided with more forethought.

“It can be difficult to visualize another way of doing things, if you never see an alternative pathway.” —Joy Buolamwini, Algorithmic Justice League

You write that “the choice to stop is a viable and necessary option” and say that we can reverse course even on AI tools that have already been adopted. Would you appreciate to see a course reversal on today’s tremendously popular generative AI tools, including chatbots appreciate ChatGPT and image generators appreciate Midjourney? Do you think that’s a feasible possibility?

Buolamwini: Facebook (now Meta) deleted a billion faceprints around the time of a [US] $650 million settlement after they faced allegations of collecting face data to train AI models without the expressed consent of users. Clearview AI stopped offering services in a number of Canadian provinces after investigations into their data-collection process were challenged. These actions show that when there is resistance and scrutiny there can be change.

You describe how you welcomed the AI Bill of Rights as an “affirmative vision” for the kinds of protections needed to protect civil rights in the age of AI. That document was a nonbinding set of guidelines for the federal government as it began to think about AI regulations. Just a few weeks ago, President Biden issued an executive order on AI that followed up on many of the ideas in the Bill of Rights. Are you satisfied with the executive order?

Buolamwini: The EO [executive order] on AI is a welcomed development as governments take more steps toward preventing harmful uses of AI systems, so more people can benefit from the promise of AI. I commend the EO for centering the values of the AI Bill of Rights including protection from algorithmic discrimination and the need for effective AI systems. Too often AI tools are adopted based on hype without seeing if the systems themselves are fit for purpose.

You’re dismissive of concerns about AI becoming superintelligent and posing an existential risk to our species, and write that “existing AI systems with demonstrated harms are more dangerous than hypothetical ‘sentient’ AI systems because they are real.” I recall a tweet from last June in which you talked about people concerned with existential risk and said that you “see room for strategic cooperation” with them. Do you still feel that way? What might that strategic cooperation look appreciate?

Buolamwini: The “x-risk” I am concerned about, which I talk about in the book, is the x-risk of being excoded—that is, being harmed by AI systems. I am concerned with lethal autonomous weapons and giving AI systems the ability to make extinguish decisions. I am concerned with the ways in which AI systems can be used to extinguish people slowly through lack of access to adequate health care, housing, and economic opportunity.

I do not think you make change in the world by only talking to people who agree with you. A lot of the work with AJL has been engaging with stakeholders with different viewpoints and ideologies to better comprehend the incentives and concerns that are driving them. The recent U.K. AI Safety Summit is an example of a strategic cooperation where a variety of stakeholders convened to examine safeguards that can be put in place on near-term AI risks as well as emerging threats.

As part of the Unmasking AI book tour, Sam Altman and I recently had a conversation on the future of AI where we discussed our varying viewpoints as well as found common ground: namely that companies cannot be left to govern themselves when it comes to preventing AI harms. I believe these kinds of discussions furnish opportunities to go beyond incendiary headlines. When Sam was talking about AI enabling humanity to be better—a frame we see so often with the creation of AI tools—I asked which humans will benefit. What happens when the digital divide becomes an AI chasm? In asking these questions and bringing in marginalized perspectives, my aim is to challenge the entire AI ecosystem to be more robust in our analysis and hence less harmful in the processes we create and systems we deploy.

What’s next for the Algorithmic Justice League?

Buolamwini: AJL will continue to raise public awareness about specific harms that AI systems produce, steps we can put in place to address those harms, and continue to build out our harms reporting platform which serves as an early-warning mechanism for emerging AI threats. We will continue to protect what is human in a world of machines by advocating for civil rights, biometric rights, and creative rights as AI continues to evolve. Our latest campaign is around TSA use of facial recognition which you can learn more about via fly.ajl.org.

Think about the state of AI today, encompassing research, commercial activity, public discourse, and regulations. Where are you on a scale of 1 to 10, if 1 is something along the lines of outraged/horrified/depressed and 10 is hopeful?

Buolamwini: I would offer a less quantitative measure and instead offer a poem that better captures my sentiments. I am overall hopeful, because my experiences since my fateful confront with a white mask and a face-tracking system years ago has shown me change is possible.

THE EXCODED

To the Excoded

Resisting and revealing the lie

That we must adopt

The surrender of our faces

The harvesting of our data

The plunder of our traces

We celebrate your courage

No Silence

No Consent

You show the path to algorithmic justice requires a league

A sisterhood, a neighborhood,

Hallway gatherings

Sharpies and posters

Coalitions Petitions Testimonies, Letters

Research and potlucks

Dancing and music

Everyone playing a role to orchestrate change

To the excoded and freedom fighters around the world

Persisting and prevailing against

algorithms of oppression

automating inequality

through weapons of math destruction

we Stand with you in gratitude

You display the people have a voice and a choice.

When defiant melodies harmonize to lift

human life, dignity, and rights.

The victory is ours.

From Your Site Articles

Related Articles Around the Web