As Kamala Harris gets ready to meet Rishi Sunak at an AI summit in the UK this week, the US president has unveiled his attempt to lead regulation in the space.

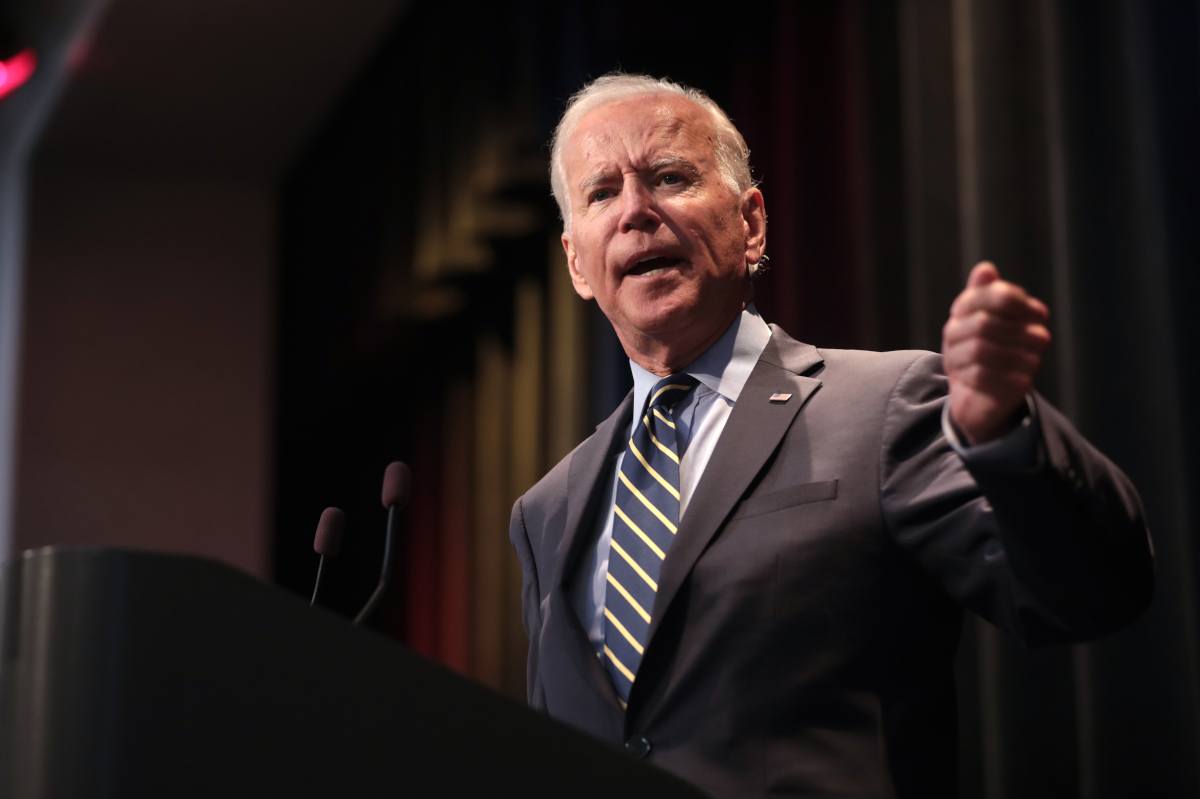

In the latest effort to regulate the rapidly advancing field of artificial intelligence, US president Joe Biden signed an executive order yesterday (30 October) to create AI safeguards.

While the technology holds promise to improve a vast range of human activity, from discovering new medicines for diseases to predicting natural disasters caused by the climate crisis, many have raised concerns about the potential dangers of leaving its development unchecked.

“One thing is clear: To realise the promise of AI and avoid the risks, we need to govern this technology,” Biden said. “There’s no other way around it, in my view. It must be governed.”

National security concerns

The executive order, a mechanism by which a US president can create limited and temporary laws without needing consent from the legislature, requires private companies developing AI to report to the federal government about the many risks that their systems could pose, such as aiding other countries and terrorist groups in the creation of weapons of mass destruction.

Biden also focused on the pressing issue of deepfakes, which uses AI to manipulate a person’s appearance or voice to create duplicitous content.

“Deepfakes use AI-generated audio and video to smear reputations, spread fake news and commit fraud. I’ve watched one of me,” Biden said, referring to an experiment his staff showed him to explicate the potential of the tech. “I said, ‘When the hell did I say that?’”

Private companies are sometimes opposed to signs of heightened federal vigilance, often arguing that regulations stifle innovation, especially when it comes to emerging technologies. But surprisingly, that has not been the case for AI.

Executives of some of the biggest AI makers in the US – and by extension the world – have been neutral, or even welcoming to regulations in the space. This includes the likes of Microsoft, Google and OpenAI, who have been spearheading the advancement of generative AI over the past year.

Long-term approach

In July, for instance, major AI product makers including Amazon, Anthropic, Inflection and Microsoft made voluntary commitments to the White House to ensure robust AI security measures.

One of the major commitments is to test the safety of their AI products internally and externally before releasing them to the public, all the while remaining transparent about the process by sharing information with governments, civil society and academia.

And now, experts have called the latest executive order “one of the most comprehensive approaches to governing” AI technology.

“As we have seen with recent AI developments, the technology is moving at a rapid pace and has already made an impact on society, with diverse applications across industries and regions,” said Michael Covington, vice-president of strategy at Jamf, a software company based in the US.

“Whilst there have been calls for regulations to govern the development of AI technologies, most have been focused on preserving end-user privacy and maintaining the accuracy and reliability of information coming from AI-based systems.”

Covington argued that the Biden administration’s executive action is “broad-based and takes a long-term” approach, with considerations for security and privacy as well as equity and civil rights, consumer protections and labour market monitoring.

“The intention is valid – ensuring AI is developed and used responsibly. But the execution must be balanced to avoid regulatory paralysis. Efficient and nimble regulatory processes will be needed to truly realise the benefits of comprehensive AI governance,” he said.

“I am optimistic that this holistic approach to governing the use of AI will lead to not only safer and more secure systems but will favour those that have a more positive and sustainable impact on society as a whole.”

‘A model for international action’

While the order is an attempt by the US government to become a leader in regulating the tech it has become the breeding ground of innovation for, it is far from first in this attempt. On this side of the pond, both the UK and Europe have been working to catch-up with the burgeoning powers of AI before it spirals out of control.

European lawmakers passed the landmark AI Act in June to rein in ‘high-risk’ AI activities and protect the rights of citizens. The rules will make certain AI technology prohibited and add others to a high-risk list, forcing certain obligations on the tech’s creators.

Meanwhile, UK prime minister Rishi Sunak is hosting a global AI summit later this week, which is expected to be attended by some big names from both the public and private sectors, including US vice-president Kamala Harris and Elon Musk.

AI does not respect borders, so it cannot be developed alone.

That’s why we’re hosting the world’s first ever AI Safety Summit next week.

We’ll work closely with international partners to address the risks head on so we can make the most of the opportunities AI can bring. pic.twitter.com/dXn6F9HtdN

— Rishi Sunak (@RishiSunak) October 26, 2023

“We have a moral, ethical and societal duty to make sure that AI is adopted and advanced in a way that protects the public from potential harm,” Harris said yesterday. “We intend that the actions we are taking domestically will serve as a model for international action.”

With AI advancing in leaps and bounds and geopolitical tensions rising globally, it remains to be seen whether governments can keep up and prevent artificial intelligence from causing some real damage.

10 things you need to know direct to your inbox every weekday. Sign up for the Daily Brief, Silicon Republic’s digest of essential sci-tech news.

Joe Biden in 2019. Image: Gage Skidmore/Flickr (CC BY-SA 4.0 DEED)