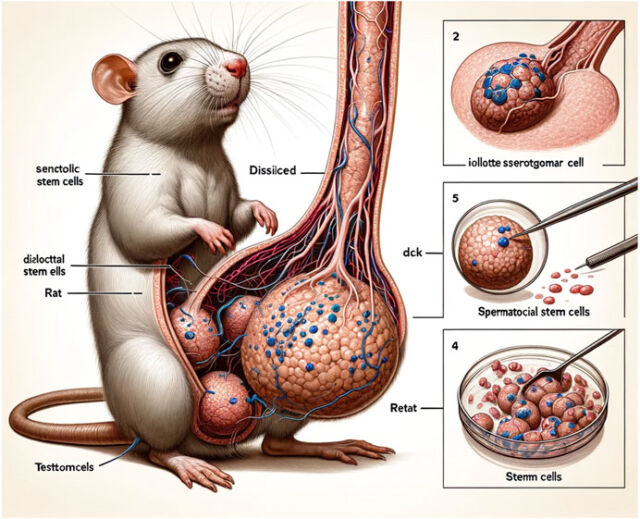

Appall and scorn ripped through scientists’ social media networks Thursday as several egregiously bad AI-generated figures circulated from a peer-reviewed article recently published in a reputable journal. Those figures—which the authors acknowledge in the article’s text were made by Midjourney—are all uninterpretable. They contain gibberish text and, most strikingly, one includes an image of a rat with grotesquely large and bizarre genitals, as well as a text label of “dck.”

On Thursday, the publisher of the review article, Frontiers, posted an “expression of concern,” noting that it is aware of concerns regarding the published piece. “An investigation is currently being conducted and this notice will be updated accordingly after the investigation concludes,” the publisher wrote.

The article in question is titled “Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway,” which was authored by three researchers in China, including the corresponding author Dingjun Hao of Xi’an Honghui Hospital. It was published online Tuesday in the journal Frontiers in Cell and Developmental Biology.

Frontiers did not immediately respond to Ars’ request for comment, but we will update this post with any response.

The first figure in the paper, the one containing the rat, drew immediate attention as scientists began widely sharing it and commenting on it on social media platforms, including Bluesky and the platform formerly known as Twitter. From a distance, the anatomical image is clearly all sorts of wrong. But, looking closer only reveals more flaws, including the labels “dissilced,” Stemm cells,” “iollotte sserotgomar,” and “dck.” Many researchers expressed surprise and dismay that such a blatantly bad AI-generated image could pass through the peer-review system and whatever internal processing is in place at the journal.

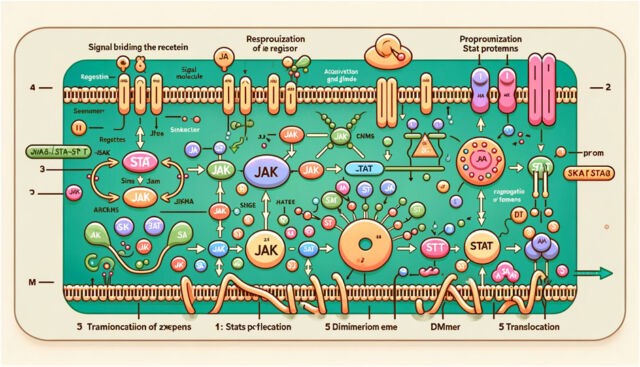

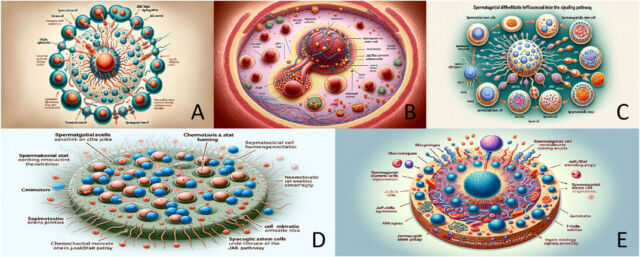

But the rat’s package is far from the only problem. Figure 2 is less graphic but equally mangled. While it’s intended to be a diagram of a complex signaling pathway, it instead is a jumbled mess. One scientific integrity expert questioned whether it provide an overly complicated explanation of “how to make a donut with colorful sprinkles.” Like the first image, the diagram is rife with nonsense text and baffling images. Figure 3 is no better, offering a collage of small circular images that are densely annotated with gibberish. The image is supposed to provide visual representations of how the signaling pathway from Figure 2 regulates the biological properties of spermatogonial stem cells.

Some scientists online questioned whether the text was also AI-generated. One user noted that AI detection software determined that it was likely to be AI-generated; however, as Ars has reported previously, such software is unreliable.

The images, while egregious examples, highlight a growing problem in scientific publishing. A scientist’s success relies heavily on their publication record, with a large volume of publications, frequent publishing, and articles appearing in top-tier journals, all of which earn scientists more prestige. The system incentivizes less-than-scrupulous researchers to push through low-quality articles, which, in the era of AI chatbots, could potentially be generated with the help of AI. Researchers worry that the growing use of AI will make published research less trustworthy. As such, research journals have recently set new authorship guidelines for AI-generated text to try to address the problem. But for now, as the Frontiers article shows, there are clearly some gaps.