VentureBeat presents: AI Unleashed – An exclusive executive event for enterprise data leaders. Network and learn with industry peers. Learn More

Researchers from the Australian National University, the University of Oxford, and the Beijing Academy of Artificial Intelligence have developed a new AI system called “3D-GPT” that can generate 3D models simply from text-based descriptions provided by a user.

The system, described in a paper published on arXiv, offers a more efficient and intuitive way to create 3D assets compared to traditional 3D modeling workflows.

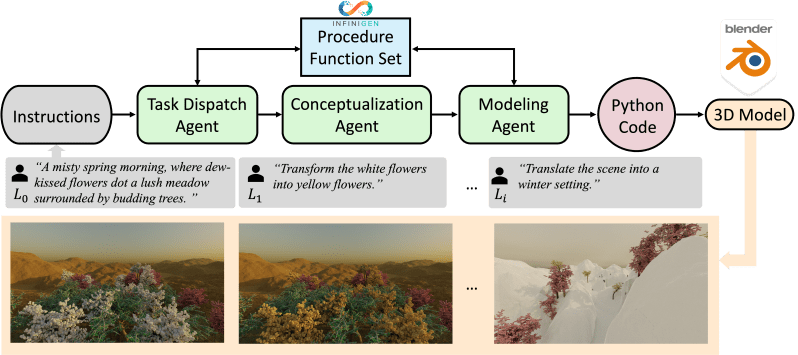

3D-GPT is able to “dissect procedural 3D modeling tasks into accessible segments and appoint the apt agent for each task,” according to the paper. It utilizes multiple AI agents that each focus on a different part of understanding the text prompt and executing modeling functions.

“3D-GPT positions LLMs [large language models] as proficient problem solvers, dissecting the procedural 3D modeling tasks into accessible segments and appointing the apt agent for each task,” the researchers stated.

Event

AI Unleashed

An exclusive invite-only evening of insights and networking, designed for senior enterprise executives overseeing data stacks and strategies.

The key agents include a “task dispatch agent” that parses the text instructions, a “conceptualization agent” that adds details missing from the initial description, and a “modeling agent” that sets parameters and generates code to drive 3D software like Blender.

By breaking down the modeling process and assigning specialized AI agents, 3D-GPT is able to interpret text prompts, enhance the descriptions with extra detail, and ultimately generate 3D assets that match what the user envisioned.

“It enhances concise initial scene descriptions, evolving them into detailed forms while dynamically adapting the text based on subsequent instructions,” the paper explained.

The system was tested on prompts like “a misty spring morning, where dew-kissed flowers dot a lush meadow surrounded by budding trees.” 3D-GPT was able to generate complete 3D scenes with realistic graphics that accurately reflected elements described in the text.

While the quality of the graphics is not yet photorealistic, the early results suggest this agent-based approach shows promise for simplifying 3D content creation. The modular architecture could also allow each agent component to be improved independently.

“Our empirical investigations confirm that 3D-GPT not only interprets and executes instructions, delivering reliable results but also collaborates effectively with human designers,” the researchers wrote.

By generating code to control existing 3D software instead of building models from scratch, 3D-GPT provides a flexible foundation to build on as modeling techniques continue to advance.

The researchers conclude that their system “highlights the potential of LLMs in 3D modeling, offering a basic framework for future advancements in scene generation and animation.”

This research could revolutionize the 3D modeling industry, making the process more efficient and accessible. As we move further into the metaverse era, with 3D content creation serving as a catalyst, tools like 3D-GPT could prove invaluable to creators and decision-makers in a range of industries, from gaming and virtual reality to cinema and multimedia experiences.

The 3D-GPT framework is still in its early stages and has some limitations, but its development marks a significant step forward in AI-driven 3D modeling and opens up exciting possibilities for future advancements.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.