A lot has changed in the two years since Facebook released its Ray Ban-branded smart glasses. Facebook is now called Meta. And its smart glasses also have a new name: the Ray-Ban Meta smart glasses. Two years ago, I was unsure exactly how I felt about the product. The Ray-Ban Stories were the most polished smart glasses I’d tried, but with mediocre camera quality, they felt like more of a novelty than something most people could use.

After a week with the company’s latest $299 sunglasses, they still feel a little bit like a novelty. But Meta has managed to improve the core features, while making them more useful with new abilities like livestreaming and hands-free photo messaging. And the addition of an AI assistant opens up some intriguing possibilities. There are still privacy concerns, but the improvements might make the tradeoff feel more worth it, especially for creators and those already comfortable with Meta’s platform.

Meta

Meta Ray-Ban Meta smart glasses

Pros

- Sleeker charging case

- Better audio and camera quality

- Hands-free photo messaging

- Instagram livestreaming abilities

Cons

- Meta AI isn’t super useful yet

- Limited functionality outside of Meta’s ecosystem

What’s changed

Just like its predecessor, the Ray-Ban Meta smart glasses look and feel much more like a pair of Ray-Bans than a gadget and that’s still a good thing. Meta has slimmed down both the frames and the charging case, which now looks like the classic tan leather Ray-Ban pouch. The glasses are still a bit bulkier than a typical pair of shades, but they don’t feel heavy, even with extended use.

This year’s model has ditched the power switch of the original, which is nice. The glasses now automatically turn on when you pull them out of the case and put them on (though you sometimes have to launch the Meta View app to get them to connect to your phone).

Image by Karissa Bell for Engadget

The glasses themselves now charge wirelessly through the nosepiece, rather than near the hinges. According to Meta, the device can go about four hours on one charge, and the case holds an additional four charges. In a week of moderate use, I haven’t had to top up the case, but I do wish there was a more precise indication of its battery level than the light at the front (the Meta View app will display the exact power level of your glasses, but not the case.)

My other minor complaint is that the new charging setup makes it slightly more difficult to pull the glasses out of the case. It takes a little bit of force to yank the frames off the magnetic charging contacts and the vertical orientation of the case makes it easy to grab (and smudge) the lenses.

The latest generation of smart glasses comes in both the signature Wayfarer style, which start at $299, as well as a new, rounder “Headliner” design, which sells for $329. I opted for a pair of Headliners in the blue “shiny jean” color, but there are tan and black variations as well. One thing to note about the new colors is that both the “shiny jeans” and “shiny caramel” options are slightly transparent, so you can see some of the circuitry and other tech embedded in the frames.

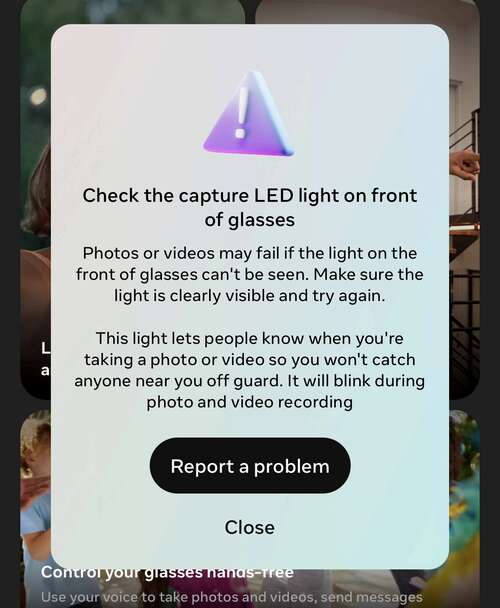

The lighter colors also make the camera and LED indicator on the top corner of each lens stand out a bit more than on their black counterparts. (Meta has also updated its software to prevent the camera from being used when the LED is covered.) None of this bothered me, but if you want a more subtle look, the black frames are better at disguising the tech inside.

New camera, better audio

Look closely at the transparent frames, though, and you can see evidence of the many upgrades. There are now five mics embedded in each pair, two in each arm and one in the nosepiece. The additional mics also enable some new “immersive” audio features for videos. If you record a clip with sound coming from multiple sources — like someone speaking in front of you and another person behind you — you can hear their voices coming from different directions when you play back the video through the frames. It’s a neat trick, but doesn’t feel especially useful.

The directional audio is, however, a sign of how dramatically the sound quality has improved. The open-ear speakers are 50 percent louder and, unlike the previous generation, don’t distort at higher volumes. Meta says the new design also has reduced the amount of sound leakage, but I found this really depends on the volume you’re listening at and your surrounding noise conditions.

Gallery: Ray-Ban Meta smart glasses review | 5 Photos

Gallery: Ray-Ban Meta smart glasses review | 5 Photos

There will always be some quality tradeoffs when it comes to open-ear speakers, but it’s still one of my favorite features of the glasses. The design makes for a much more balanced level of ambient noise than any kind of “transparency mode” I’ve experienced with earbuds or headphones. And it’s especially useful for things like jogging or hiking when you want to maintain some awareness of what’s around you.

Camera quality was one of the most disappointing features on the first-generation Ray-Ban Stories so I was happy to see that Meta and Luxottica ditched the underpowered 5-megapixel cameras for a 12MP ultra-wide.

The upgraded camera still isn’t as sharp as most phones, but it’s more than adequate for social media. Shots in broad daylight were clear and the colors were more balanced than snaps from the original Ray-Ban Stories, which tended to look over-processed. I was surprised that even photos I took indoors or at dusk — occasions when most people wouldn’t wear sunglasses — also looked decent. One note of caution about the ultra-wide lens, however: if you have long hair or bangs, it’s very easy for wisps of hair to end up in the edges of your frame if you’re not careful.

Gallery: Ray-Ban Meta smart glasses sample photos | 8 Photos

Gallery: Ray-Ban Meta smart glasses sample photos | 8 Photos

The camera also has a few new tricks of its own. In addition to 60-second videos, you can now livestream directly from the glasses to your Instagram or Facebook account. You can even use touch controls on the side of the glasses to hear a readout of likes and comments from your followers. As someone who has live streamed to my personal Instagram account exactly one time before this week, I couldn’t imagine myself using this feature.

But after trying it out, it was a lot cooler than I expected. Streaming a first-person view from your glasses is much easier than holding up your phone, and being able to seamlessly switch between the first-person view and the one from your phone’s camera is something I could see being incredibly useful to creators. I still don’t see many IG Lives in my future, but the smart glasses could enable some really creative use cases for content creators.

The other new camera feature I really appreciated was the ability to snap a photo and share it directly with a contact via WhatsApp or Messenger (but not Instagram DMs) using only voice commands. While this means you can’t review the photo before sending it, it’s a much faster and convenient way to share photos on the go.

Two years ago, I really didn’t see the point of having voice commands on the Ray-Ban Stories. Saying “hey Facebook” felt too cringey to utter in public, and it just didn’t seem like there was much point to the feature. However, the addition of Meta’s AI assistant makes voice interactions a key feature rather than an afterthought.

The Meta Ray-Ban smart glasses are one of the first hardware products to ship with Meta’s new generative AI assistant built in. This means you can chat with the assistant about a range of topics. Answers to queries are broadcast through the internal speakers, and you can revisit your past questions and responses in the Meta View app.

To be clear: I still feel really weird saying “hey Meta,” or “OK Meta,” and haven’t yet done so in public. But there is now, at least, a reason you may want to. For now, the assistant is unable to provide “real-time” information other than the current time or weather forecast. So it won’t be able to help with some practical queries, like those about sports standings or traffic conditions. The assistant’s “knowledge cutoff” is December 2022, and it will remind you of that for most questions related to current events. However, there were a few questions I asked where it hallucinated and gave made-up (but nonetheless real-sounding) answers. Meta has said this kind of thing is an expected part of the development of large language models, but it’s important to keep in mind when using Meta AI.

Karissa Bell

Meta has suggested you should instead use it more for creative or general interest questions, like basic trivia or travel ideas. As with other generative AI tools, I found that the more creative and specific your questions, the better the answer. For example, “Hey Meta, what’s an interesting Instagram caption for a view of the Golden Gate Bridge,” generated a pretty generic response that sounded more like an ad. But “hey Meta, write a fun and interesting caption for a photo of the Golden gate Bridge that I can share on my cat’s Instagram account,” was slightly better.

That said, I’ve been mostly underwhelmed by my interactions with Meta AI. The feature still feels like something of a novelty, though I appreciated the mostly neutral personality of Meta AI on the glasses compared to the company’s corny celebrity-infused chatbots.

And, skeptical as I am, Meta has given a few hints about intriguing future possibilities for the technology. Onstage at Connect, the company offered a preview of an upcoming feature that will allow wearers to ask questions based on what they’re seeing through their glasses. For example, you could look at a monument and ask Meta to identify what you’re looking at. This “multi-modal” search capability is coming sometime next year, according to the company, and I’m looking forward to revisiting Meta AI once the update rolls out.

Privacy

The addition of generative AI also raises new privacy concerns. First, even if you already have a Facebook or Instagram account, you’ll need a Meta account to use the glasses. While this also means they don’t require you to use Facebook or Instagram, not everyone will be thrilled at the idea of creating another Meta-linked account.

The Meta View app still has no ads and the company says it won’t use the contents of your photos or video for advertising. The app will store transcripts of your voice commands by default, though you can opt to remove transcripts and associated voice recordings from the app’s settings. If you do allow the app to store voice recordings, these can be surfaced to “trained reviewers” to “improve, troubleshoot and train Meta’s products.”

Karissa Bell

I asked the company if it plans to use Meta AI queries to inform its advertising and a spokesperson said that “at this time we do not use the generative AI models that power our conversational AIs, including those on smart glasses, to personalize ads.” So you can rest easy that your interactions with Meta AI won’t be fed into Meta’s ad-targeting machine, at least for now. But it’s not unreasonable to imagine that could one day change. Meta tends to keep new products ad-free in the beginning and introduce ads once they start to reach a critical mass of users. And other companies, like Snap, are already using generative AI to boost their ad businesses.

Are they worth it?

If any of that makes you uncomfortable, or you’re interested in using the shades with non-Meta apps, then you might want to steer clear of the Ray-Ban Meta smart glasses. Though your photos and videos can be exported to any app, most of the devices’ key features work best when you’re playing in Meta’s ecosystem. For example, you can connect your WhatsApp and Messenger accounts to send hands-free photos or texts but the glasses don’t support other third-party messaging apps. (Meta AI will read out incoming texts and can send photos and texts via your phone’s native messaging app, however). Likewise, the livestreaming abilities are limited to Instagram and Facebook, and won’t work with other platforms.

If you’re a creator or already spend a lot of time in Meta’s apps, though, there are plenty of reasons to give the second-generation shades a look. While the Ray-Ban Stories of two years ago were a fun, if overly expensive, novelty, the $299 Ray-Ban Meta smart glasses feel more like a finished product. The improved audio and photo quality better justify the price, and the addition of AI makes them feel like a product that’s likely to improve rather than a gadget that will start to become obsolete as soon as you buy it.

Update, October 18 2023, 12:33PM ET: This story has been updated to reflect that the Ray-Ban Meta smart glasses support sending text messages with voice commands.