Securing artificial intelligence (AI) and machine learning (ML) workflows is a complex challenge that can involve multiple components.

Seattle-based startup Protect AI is growing its platform solution to the challenge of securing AI today with the acquisition of privately-held Laiyer AI, which is the lead firm behind the popular LLM Guard open-source project. Financial terms of the deal are not being publicly disclosed. The acquisition will allow Protect AI to extend the capabilities of its AI security platform to better protect organizations against potential risks from the development and usage of large language models (LLMs).

The core commercial platform developed by Protect AI is called Radar, which provides visibility, detection and management capabilities for AI/ML models. The company raised $35 million in a Series A round of funding in July 2023 to help expand its AI security efforts.

“We want to drive the industry to adopt MLSecOps,” Daryan (D) Dehghanpisheh, president and founder of Protect AI told VentureBeat. “The adoption of MLSecOps fundamentally helps you see, know and manage all forms of your AI risk and security vulnerabilities.”

What LLM Guard and Laiyer bring to Protect AI

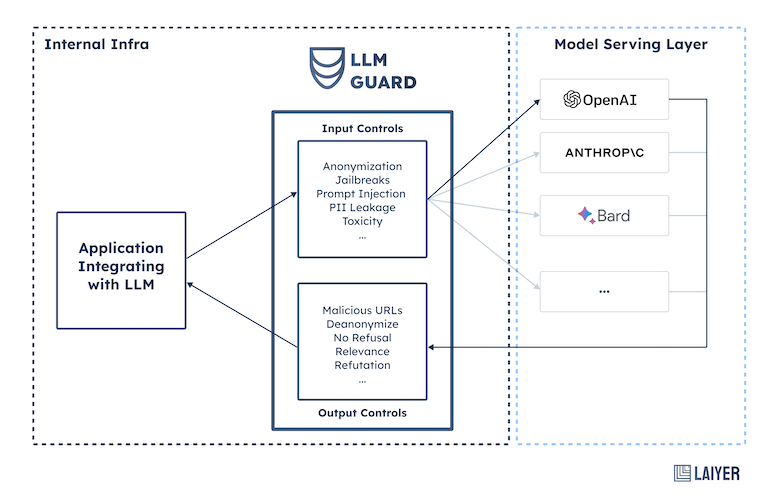

The LLM Guard open-source project that Laiyer AI leads helps to govern the usage of LLM operations.

LLM Guard has input controls to help protect against prompt injection attacks, which is an increasingly dangerous risk for AI usage. The open source technology also has input control to help limit the risk of personally identifiable information (PII) leakage as well as toxic language. On the output side, LLM Guard can help protect users against various risks including malicious URLs.

Dehghanpisheh emphasized that Protect AI remains committed to keeping the core LLM Guard technology open source. The plan is to develop a commercial offering called Laiyer AI that will provide additional performance and enterprise capabilities that are not present in the core open-source project.

Protect AI will also be working to integrate the LLM Guard technology into its broader platform approach to help protect AI usage from the model development and selection stage, right through to deployment.

Open source experience extends to vulnerability scanning

The approach of starting with an open-source effort and building it into a commercial product is something that Protect AI has done before.

The company also leads the ModelScan open source project which helps to identify security risks in machine learning models. The model scan technology is the basis for Protect AI’s commercial Guardian technology which was announced just last week on Jan. 24.

Scanning models for vulnerabilities is a complicated process. Unlike a traditional virus scanner in application software, there commonly aren’t specific known vulnerabilities in ML model code to scan against.

“The thing you need to understand about a model is that it’s a self-executing piece of code,” Dehghanpisheh explained. “The problem is it’s really easy to embed executable calls in that model file that can be doing things that have nothing to do with machine learning.”

Those executable calls could potentially be malicious, which is a risk that Protect AI’s Guardian technology helps to identify.

Protect AI’s growing platform ambitions

Having individual point products to help protect AI is only the beginning of Dehghanpisheh’s vision for Protect AI.

In the security space, organizations likely are already using any number of different technologies from multiple vendors. The goal is to bring Protect AI’s tools together with its Radar platform that can also integrate into existing security tools that an organization might use, like SIEM (Security Information and Event Management) tools which are commonly deployed in security operations centers (SOCs).

Dehghanpisheh explained that Radar helps to provide a bill of materials about what’s inside of an AI model. Having visibility into the components that enable a model is critical for governance as well as security. With the Guardian technology, Protect AI is now able to scan models to identify potential risks before a model is deployed, while the LLM Guard protects against usage risks. The overall platform goal for Protect AI is to have a full approach to enterprise AI security.

“You’ll be able to have one policy that you can invoke at an enterprise level that encompasses all forms of AI security,” he said.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.