Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

Perplexity AI, the year-old startup founded by former Google AI researchers Andy Konwinski, Aravind Srinivas, Denis Yarats, and Johnny Ho, has the potential to dethrone their former employer’s position as the top destination for web explore by combining a web index and up-to-date information with a conversational, AI chatbot style interface. Its chatbot, called Perplexity Copilot, has until recently used existing AI models — OpenAI’s GPT-4 and Anthropic’s Claude 2 — as the “smarts” behind the scenes, which paying subscribers can toggle between.

Now, the company has taken another step toward the possibility of being the premiere explore destination, releasing its own AI large language models (LLMs) — pplx-7b-online and pplx-70b-online, named for their parameter sizes, 7 billion and 70 billion respectively. They are fine-tuned and augmented versions of the open source mistral-7b and llama2-70b models from Mistral and Meta.

Parameters in AI refer to how many connections there are between each model’s artificial neurons, and thus, typically suggest how powerful and “intelligent” the models are, with higher parameters generally indicating more knowledgable, smarter, and performant models.

Why Perplexity’s new online LLMs matter and how they differ from ChatGPT and others

Perplexity’s new LLMs are notable because, in addition to being available for other organizations to use and build their own apps upon through Perplexity’s API (application programming interface), they also aim to offer “helpful, factual, and up-to-date information” — the latter something most other leading LLMs, including OpenAI’s GPT-3.5 and GPT-4 (which power ChatGPT), struggle to do.

VB Event

The AI Impact Tour

Connect with the enterprise AI community at VentureBeat’s AI Impact Tour coming to a city near you!

As Perplexity CEO Aravind Srinivas posted on X, the new PPX LLMs are “the first-ever live LLM APIs that are grounded with web explore data and have no knowledge cutoff!”

GPT-3.5 and 4’s cutoff dates for stored knowledge were famously limited to September 2021 until recently, when they were bumped to earlier this year. That’s still a far cry from having knowledge of current events and breaking news baked in, though it is something that is mitigated to an extent by the return of web browsing capabilities to ChatGPT via OpenAI partner Microsoft’s Bing explore, which was restored in late September 2023.

The race to furnish current knowledge through LLM chatbots is heating up, too, with Elon Musk boasting that his company xAI’s new chatbot Grok will have this capability thanks to its direct integration with sibling company X (formerly Twitter) and all the realtime information posted by users on that platform. Grok has already been available to selected users in a limited beta, and will be rolling out for anyone to use this week, provided the user pays for an X Premium subscription.

Other LLM providers, such as enterprise focused Cohere in Toronto, aim to pull in more recent knowledge to their LLMs through a combination of web browsing capabilities and retrieval augmented generation, or RAG, which allows the model to draw upon information sources external to it and provided by an administrator, such as company files.

In the case of the new PPLX online LLMs, Perplexity has developed its own approach to pulling in recent information. As the company writes in its blog post: “our in-house explore, indexing, and crawling infrastructure allows us to augment LLMs with the most relevant, up to date, and valuable information. Our explore index is large, updated on a regular cadence, and uses sophisticated ranking algorithms to ensure high quality, non-SEOed sites are prioritized. Website excerpts, which we call ‘snippets’, are provided to our pplx-online models to enable responses with the most up-to-date information.”

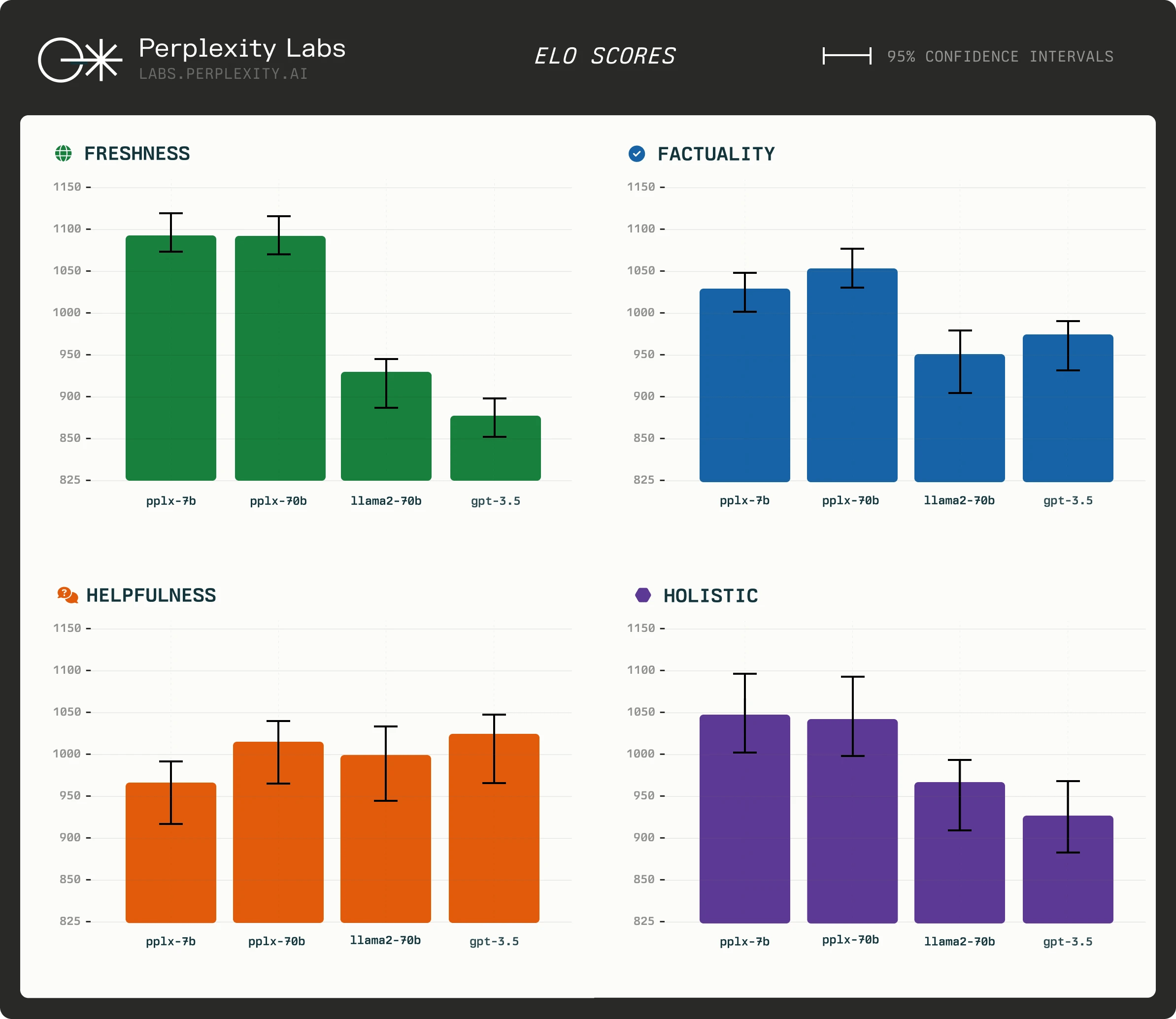

To demonstrate the efficacy of its new LLMs, Perplexity hired some human contractors to evaluate responses to questions based on a set of three criteria: helpfulness, factuality (also called accuracy by Perplexity), and freshness (the latter referring to how up-to-date the information was).

The contractors were asked to differentiate responses from two models at random, some of them Perplexity’s new PPLX online LLMs and others Meta’s Llama 2 or OpenAI’s GPT-3.5 Turbo, choosing which response between the two they preferred.

Then, Perplexity extrapolated from the human contractors’ response using a method called Elo scoring, to ascertain that its models performed better than both OpenAI’s and Meta’s raw models when it came to the “freshness” and “factuality.” GPT-3.5 still outperformed the PPLX and raw Llama 2 models when it came to “helpfulness,” or how useful the consultants found the LLM responses to be.

“Overall, the evaluation results display that our PPLX models can match and even outperform gpt-3.5 and llama2-70b on Perplexity-related use cases, particularly for providing accurate and up-to-date responses,” the company writes in its blog post describing the new models.

How to use and implications

The new PPLX online LLMs are available now for individuals and organizations to use through Perplexity’s API website and by following the documentation posted there. In addition, Perplexity notes in its blog post that the API is moving from beta testing availability to general public availability.

However, there is a cost: despite being trained on free, open source models, Perplexity is charging for the addition of its explore and web indexing tech in these models. Perplexity charges $20 USD monthly for its Pro subscription tier or $200 annually, which will now grant users a $5 monthly credit that they can apply towards the Perplexity API to get access to the PPLX models.

Beyond that, users will need to pay Perplexity for additional API calls (accessing the models with a query or prompt). Perplexity hasn’t provided public pricing information, rather it directs interested parties to reach out directly via email at: api@perplexity.ai.

While uptake of the new models by individuals and businesses, for direct usage or in new applications, remains to be seen, Perplexity has already won some ardent fans who believe it is the future of explore, including venture capitalist (VC) investor Jeremiah Owyang of Blitzscaling Ventures, who says he has “no financial tie” to the company.

With Google Bard already stumbling due to some controversies and bad reviews, and Google’s follow-up GPT-killer Gemini reportedly delayed, the moment is ripe for Perplexity to set up itself as an alternative vision of the future of explore — one in which an AI assistant converses with you and surfaces answers from the web, instead of the user themselves sorting through the explore results to find the best ones.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. ascertain our Briefings.