The big picture: Competing standards could soon emerge as companies like Microsoft, Intel, Qualcomm, and Apple prepare to promote PCs and other devices that prioritize on-device AI operations. Microsoft and Intel recently outlined what they think should be classified as an “AI PC,” but the AI sector’s current leader, Nvidia, has different ideas.

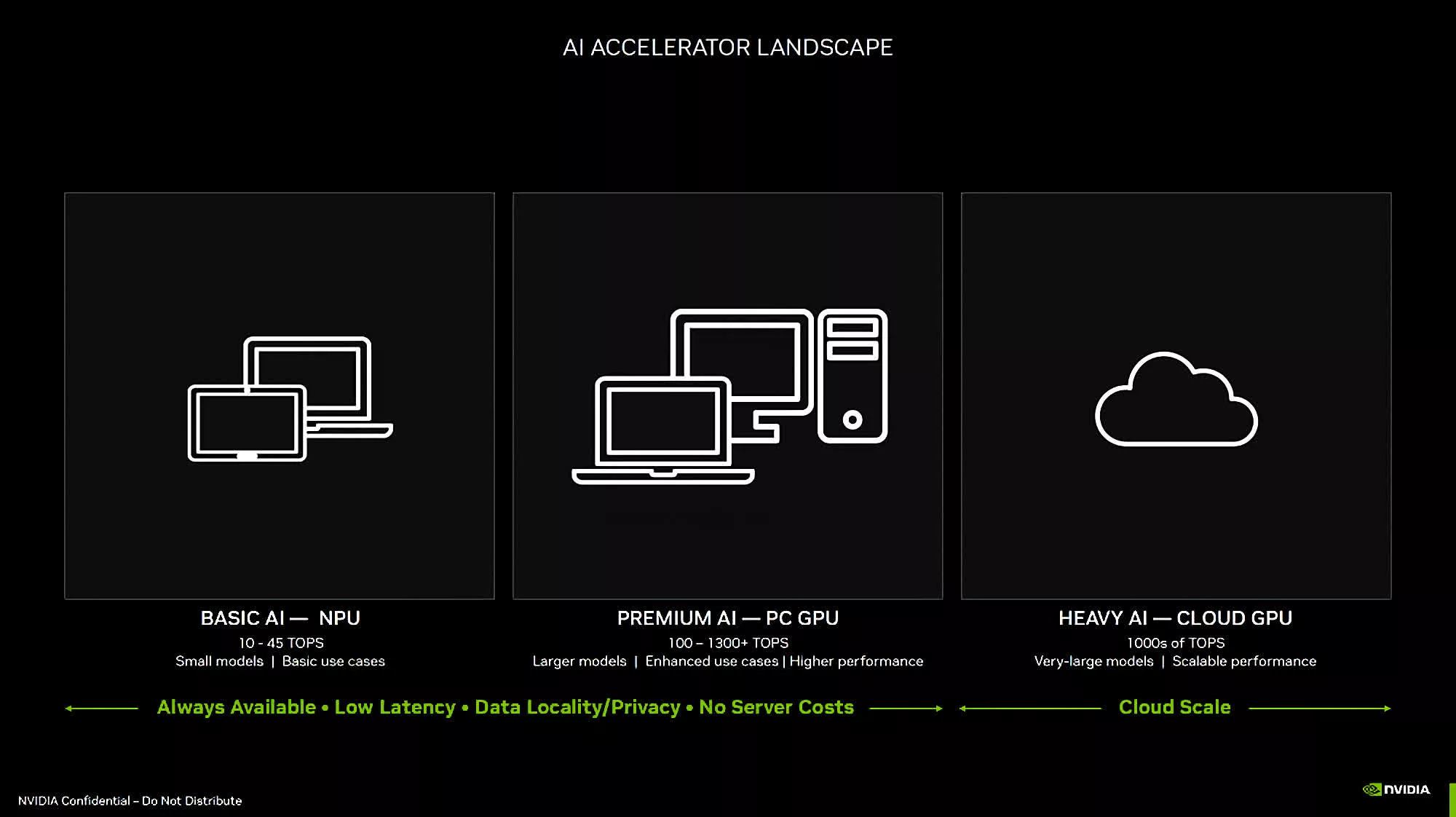

A recently leaked internal presentation from Nvidia explains the company’s apparent preference for discrete GPUs over Neural Processing Units (NPUs) for running local generative AI applications. The graphics card giant could view NPUs from other companies as a threat since its earnings have skyrocketed since its processors became integral for operating large language models.

Since launching its Meteor Lake CPUs late last year, Intel has tried to push laptops featuring the processors and their embedded NPUs as a new class of “AI PC” designed to perform generative AI operations without relying on massive data centers in the cloud. Microsoft and Qualcomm plan to shepherd more AI PCs into the market later this year, and Apple expects to jump onto the bandwagon in 2024 with its upcoming M4 and A18 bionic processors.

Microsoft is trying to promote its services as integral to the new trend by listing its Copilot virtual assistant and a new Copilot key as requirements for all AI PCs. However, Nvidia thinks its RTX graphics cards, which have been on the market since 2018, are much better suited for AI tasks, implying that NPUs are unnecessary and that millions of “AI PCs” are already in circulation.

Microsoft claims that AI performance reaching 40 trillion operations per second (TOPS) will be necessary for next-generation AI PCs, but Nvidia’s presentation claims that RTX GPUs can already reach 100-1,300 TOPS. The GPU manufacturer said that chips like the currently available RTX 30 and 40 series graphics cards are excellent tools for content creation, productivity, chatbots, and other applications involving numerous large language models. For such tasks, the mobile GeForce RTX 4050 can supposedly outperform Apple’s M3 processor, and the desktop RTX 4070 achieves “flagship performance” in Stable Diffusion 1.5.

To showcase the unique capabilities of its technology, Nvidia has rolled out a major update for ChatRTX. This chatbot, powered by Nvidia’s TensorRT-LLM, operates locally on any PC equipped with an RTX 30- or 40-series GPU and a minimum of 8 GB VRAM. What sets ChatRTX apart is its ability to answer queries in multiple languages by scanning through documents or YouTube playlists provided by users. It is compatible with text, pdf, doc, docx, and XML formats.

Of course, just because Nvidia says it’s the surefire leader for onboard AI performance doesn’t mean competitors will throw in the towel and say, “You win.” On the contrary, competition and R&D in the NPU market will only grow more fierce as companies try to unseat Nvidia.