Mistral, the one-year-old AI startup that made headlines with its unique Word Art logo and the largest ever seed round in European history, has come out with Mistral Large – its latest and largest model for enterprises – and a notable strategic partnership with Microsoft.

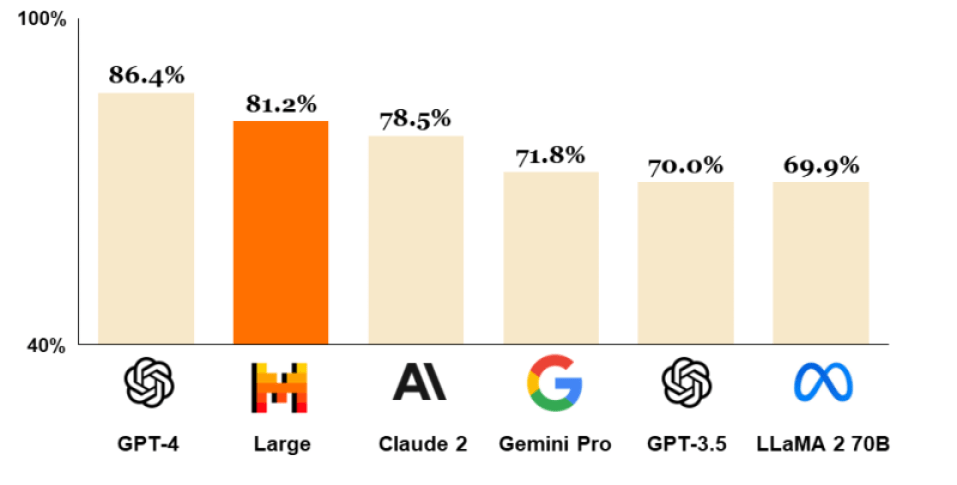

Available starting today, Mistral Large has been designed as a text generation model capable of handling complex multilingual reasoning tasks, including text understanding, transformation and code generation. According to the massive multitask language understanding (MMLU) benchmark results shared by the company, it performs fairly well, standing only as the second-best model generally available through an API after GPT-4.

Mistral says the large model will be available primarily via its API but also through Azure AI, thanks to the new partnership with Microsoft. The company also launched an optimized version of Mistral Small, the smaller model it has on offer, and a chat app to help business teams get an idea of what the company has on offer.

Mistral Large: What to expect?

As a multilingual model, Mistral Large will understand, reason with and generate text with native fluency not only in English but also in other languages, starting with French, Spanish, German and Italian. Now, this is not something new as Google and OpenAI also offer multilingual models, but Mistral emphasizes that its offering has “a nuanced understanding of grammar and cultural context” for all languages, which will lead to better results.

VB Event

The AI Impact Tour – NYC

We’ll be in New York on February 29 in partnership with Microsoft to discuss how to balance risks and rewards of AI applications. Request an invite to the exclusive event below.

The model has a context window of 32K tokens, which makes it capable of processing large documents and recalling information precisely. It also comes with precise instruction-following, allowing developers to design their moderation policies and native function calling.

While it remains to be seen how the new model performs in the real world, especially against bigger offerings like Gemini 1.5, which supports up to 1 million tokens, Mistral says the model does a pretty good job taking on rival offerings.

In MMLU tests, for instance, Mistral Large had an accuracy of 81.2%, sitting right behind GPT-4’s 86.4%. The benchmark did not include Gemini Pro 1.5, but Gemini Pro 1.0 scored 71.8%. Llama 2 70B also sat behind with a score of 69.9%.

The Meta offering even failed to beat (or match) Mistral in language-specific tests.

While similar rankings were seen in the GSM8K Math benchmark involving Llama and the GPT family, coding seemed to be a weak point for Mistral Large. In the HumanE benchmark for coding performance, the new large model performed with an accuracy of 45.1%, sitting well behind GPT-3.5, GPT-4 and Gemini Pro 1.0.

The company has also launched a new version of its smaller model, Mistral Small, with optimizations for latency and cost. It outperforms Mixtral 8x7B and serves as an intermediary solution between the company’s open-weight offering and Mistral Large.

Strategic partnership with Microsoft and new Chat app

While building models that perform well is crucial, you must ensure they reach the right customers as and when needed – an aspect critical for growth. This is where Mistral’s strategic partnership with Microsoft comes in.

Under this engagement, all open and commercial models offered by Mistral, including the new large model, will be made available on Azure AI Studio and Azure Machine Learning. This makes Mistral only the second company to make its commercial language models available on Azure.

Mistral says Azure users can tap the models with their existing credits and use them with “as seamless a user experience as with its own APIs.” The company will also provide direct access to its support team to customers coming via Azure.

“At Mistral AI, we make generative AI ubiquitous – through our open-source models and by bringing our commercial models where developers create. We are very proud to announce the availability of Mistral Large on Azure AI. Microsoft’s trust in our model is a step forward in our journey to put frontier AI in everyone’s hands,” Arthur Mensch, co-founder and CEO of Mistral AI, said in a statement.

That said, for Mistral, Microsoft will not be the only distribution partner. A few days ago, Amazon Web Services (AWS) Principal Developer Advocate Donnie Prakoso also announced that the French startup’s open models will come on Amazon Bedrock, its managed service for gen AI offerings and application development. However, he did not share when exactly it would happen.

To gain the trust of companies and eventually bring them on board via these channels, Mistral is also launching a chat app, a multilingual conversational assistant that shows what teams can build with its models and deploy in their respective business environments.

Users can create an account on Mistral’s website for beta access to Mistral Chat and interact with the models the company has on offer in a pedagogical and fun way. However, the company does caution that it will not be able to access the internet and may deliver inaccurate or outdated information in some cases. The company is also building an enterprise-centric version of the assistant with self-deployment capacities with fine-grained moderation.

“Thanks to a tunable system-level moderation mechanism, le Chat warns you in a non-invasive way when you’re pushing the conversation in directions where the assistant may produce sensitive or controversial content,” the company noted in another blog post.

According to Crunchbase data, Mistral has raised more than $500 million across seed and series A rounds led by known investors like Lightspeed Venture Partners and Andreessen Horowitz (a16z).

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.