State-affiliated online attackers are finding ChatGPT and other AI tools useful for improving their productivity, and streamlining and accelerating their work, but they have yet to unleash the technology’s full potential.

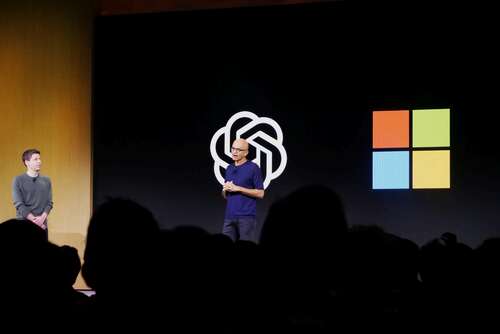

Microsoft and OpenAI say they’re doing their best to keep it from happening.

That’s the takeaway from a new research report issued by the companies this morning, detailing the use of large language models by nation-state attackers affiliated with China, Russia, North Korea, and Iran.

“These actors generally sought to use OpenAI services for querying open-source information, translating, finding coding errors, and running basic coding tasks,” OpenAI said in a post Wednesday morning.

The San Francisco-based company said it worked with Microsoft to identify and terminate accounts associated with five state-affiliated groups.

State-affiliated threat actors “have not yet observed particularly novel or unique AI-enabled attack or abuse techniques resulting from threat actors’ usage of AI,” Microsoft said in its own post. “However, Microsoft and our partners continue to study this landscape closely.”

Microsoft announced several principles for disrupting the malicious use of its AI technologies and APIs. In addition to detecting and suspending the accounts of state-affiliated threat actors, the company says it will notify other service providers, collaborate with key stakeholders, and issue public reports about its findings.

The Redmond company also discussed the ways that AI will help to defend against online attacks. Microsoft said its Copilot for Security product, currently in customer preview, “showed increased security analyst speed and accuracy, regardless of their expertise level, across common tasks like identifying scripts used by attackers, creating incident reports, and identifying appropriate remediation steps.”