Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

More news from Meta Platforms today, parent company of Facebook, Instagram, WhatsApp and Oculus VR (among others): hot on the heels of its release of a new voice cloning AI called Audiobox, the company today announced that this week, it is beginning a small trial in the U.S. of a new, multimodal AI designed to run on its Ray Ban Meta smart glasses, made in partnership with the signature eyeware company, Ray Ban.

The new Meta multimodal AI is set to launch publicly in 2024, according to a video post on Instagram by longtime Facebook turned Meta chief technology officer Andrew Bosworth (aka “Boz”).

“Next year, we’re going to launch a multimodal version of the AI assistant that takes advantage of the camera on the glasses in order to give you information not just about a question you’ve asked it, but also about the world around you,” Boz stated. “And I’m so excited to share that starting this week, we’re going to be testing that multimodal AI in beta via an early access program here in the U.S.”

Boz did not include how to engage in the program in his post.

VB Event

The AI Impact Tour

Connect with the enterprise AI community at VentureBeat’s AI Impact Tour coming to a city near you!

The glasses, the latest version of which was introduced at Meta’s annual Connect conference in Palo Alto back in September, cost $299 at the entry price, and already ship in current models with a built-in AI assistant onboard, but it is fairly limited and cannot intelligently answer to video or photography, much less a live view of what the wearer was seeing (despite the glasses having built-in cameras).

Instead, this assistant was designed simply to be controlled by voice, specifically the wearer speaking to it as though it were a voice assistant similar to Amazon’s Alexa or Apple’s Siri.

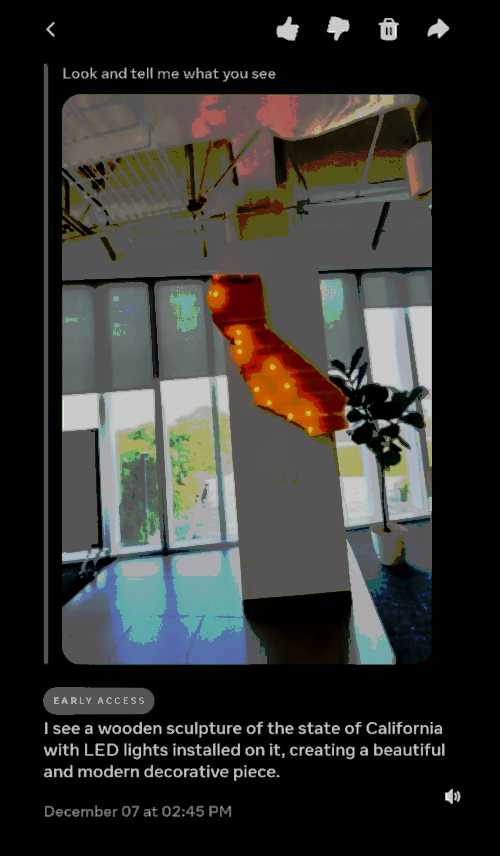

Boz showcased one of the new capabilities of the multimodal version in his Instagram post, including a video clip of himself wearing the glasses and staring at a lighted piece of wall art showing the state of California in an office. Interestingly, he appeared to be holding a smartphone as well, suggesting the AI may need a smartphone paired with the glasses to work.

A screen showing the apparent user interface (UI) of the new Meta multimodal AI showed that it successfully answered Boz’s question “Look and tell me what you see” and identified the art as a “wooden sculpture” which it called “beautiful.”

The advance is perhaps to be expected given Meta’s general wholesale accept of AI across its products and platforms, and its promotion of open source AI through its signature LLM Llama 2. But it is interesting to see its first attempts at a multimodal AI coming in the form not of an open source model on the web, but through a device.

Generative AI’s advance into the hardware category has been slow so far, with a few smaller startups — including Humane with its “Ai Pin” running OpenAI’s GPT-4V — making the first attempts at dedicated AI devices.

Meanwhile, OpenAI has pursued the route of offering GPT-4V, its own multimodal AI (the “V” stands for “vision”), through its ChatGPT app for iOS and Android, though access to the model also requires a Chat GPT Plus ($20 per month) or Enterprise subscription (variable pricing).

The advance also calls to mind Google’s ill-fated trials of Google Glass, an early smart glasses prototype from the 2010s that was derided for its fashion sense (or lack thereof) and visible early-adopter userbase (spawning the term “Glassholes“), as well as limited practical use cases, despite heavy hype prior to its launch.

Will Meta’s new multimodal AI for Ray Ban Meta smart glasses be able to avoid the Glasshole trap? Has enough time passed and sensibilities changed toward strapping a camera to one’s face to allow a product of this nature to succeed?

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. ascertain our Briefings.