Just as cloud platforms quickly scaled to provide enterprise computing infrastructure, Menlo Ventures sees the modern AI stack following the same growth trajectory and value creation potential as public cloud platforms.

The venture capital firm says the foundational AI models in use today are highly similar to the first days of public cloud services, and getting the intersection of AI and security right is critical to enabling the evolving market to reach its market potential.

Menlo Ventures’ latest blog post, “Part 1: Security for AI: The New Wave of Startups Racing to Secure the AI Stack,” explains how the firm sees AI and security combining to help drive new market growth.

“One analogy I’ve been drawing is that these foundation models are very much like the public clouds that we’re all familiar with now, like AWS and Azure. But 12 to 15 years ago, when that infrastructure as a service layer was just getting started, what you saw was massive value creation that spawned after that new foundation was created,” said Rama Sekhar, Menlo Venture’s new partner who is focusing on cybersecurity, AI and cloud infrastructure investments told VentureBeat.

VB Event

The AI Impact Tour – NYC

We’ll be in New York on February 29 in partnership with Microsoft to discuss how to balance risks and rewards of AI applications. Request an invite to the exclusive event below.

“We think something very similar is going to happen here where the foundation model providers are at the bottom of the infrastructure stack,” Sekhar said.

Solve the AI for security paradox to drive faster generative AI growth

Throughout VentureBeat’s interview with Sekhar and Feyza Haskaraman, principal, Cybersecurity, SaaS, Supply Chain and Automation, key points of seeing AI models as core to the center of a new, modern AI stack that relies on a real-time, steady stream of sensitive enterprise data to self-learn became apparent. Sekhar and Haskaraman explained that the proliferation of AI is leading to an exponential increase in the size of threat surfaces with LLMs being a primary target.

Sekhar and Haskaraman say that securing models, including LLMs with current tools, is impossible, creating a trust gap in enterprises and slowing down generative AI adoption. They attribute this trust gap to how much hype there is for gen AI in enterprises versus actual adoption. The trust gap is widened by attackers sharpening their tradecraft with AI-based techniques, further underscoring why enterprises are becoming increasingly concerned about losing the AI war.

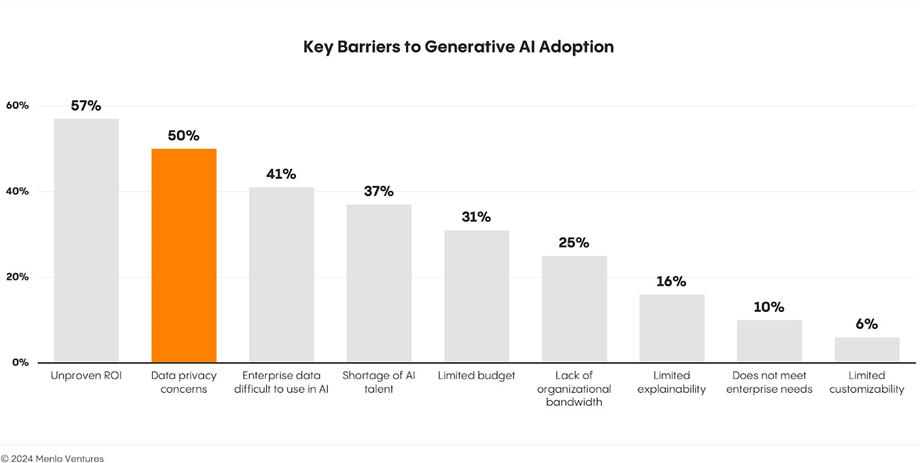

There are formidable trust gaps to close for gen AI to reach its market potential. Sekhar and Haskaraman believe solving the challenges of improving security for AI will help close the gaps. Menlo Ventures’ survey found that unproven ROI, data privacy corners and the perception that enterprise data is difficult to use with AI are the top three barriers to greater generative AI adoption.

Improving AI for security will directly help solve data privacy concerns. Getting AI for security integration, right can also contribute to solving the other two. Sekhar and Haskaraman pointed out that OpenAI’s AI models are increasingly becoming the target of cyber attacks. Just last November, They pointed out that OpenAI confirmed a DoS attack that impacted their API and ChatGPT traffic and caused multiple outages.

Governance, Observability and Security are table stakes

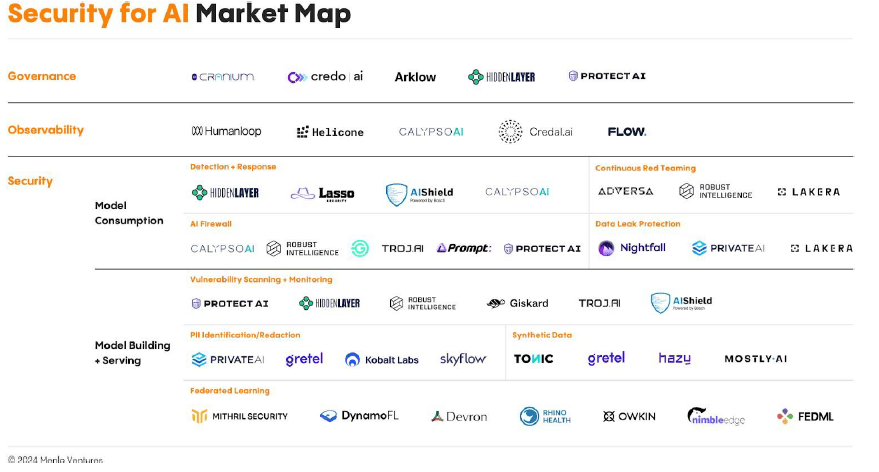

Menlo Venture has gone all-in on the belief that governance, observability, and security are the foundations that security for AI needs in place to scale. They are the table stakes their market map is based on.

Governance tools are seeing rapid growth today. VentureBeat has also seen exceptional growth of AI-based governance and compliance startups that are entirely cloud-based, giving them time-to-market and global scale advantages. Menlo Ventures is a governance tool including Credo and Cranium, helping businesses keep track of AI services, tools and owners, whether they were made in-house or by outside companies. They do risk assessments for safety and security measures, which help people figure out what the risks are for a business. Making sure everyone in an organization knows how AI is being used is the first and most important thing that needs to be done to protect and observe large language models (LLMs).

Menlo Ventures sees observability tools as critical for monitoring models while also providing enterprises the ability to aggregate logs on access, inputs and outputs. The goal of these tools is to detect misuse and also provide full auditability. Menlo Ventures says Helicone for security use-case-specific tools and CalypsoAI are examples of startups that are fulfilling these requirements as part of the solution stack.

Security solutions are centered on establishing trust boundaries or guardrails. Sekhar and Haskaraman write that rigorous control is necessary for both internal and external models when it comes to model consumption, for example. Menlo Ventures is especially interested in AI Firewall providers, including Robust Intelligence and Prompt Security, who moderate input and output validity, protect against prompt injections and detect Personally identifiable information (PII)/sensitive data. Additional companies of interest include Private AI and Nightfall, which help organizations identify and redact PII data from inputs and outputs. Additional companies of interest include Lakera and Adversa aim to automate red teaming activities to help organizations investigate the robustness of their guardrails. On top of this, threat detection and response solutions like Hiddenlayer and Lasso Security work to detect anomalous and potentially malicious behavior attacking LLMs are also of interest. DynamoFL and FedML for federated learning, Tonic and Gretel for generating synthetic data to remove the worry of feeding in sensitive data to LLMs and Private AI or Kobalt Labs help identify and redact sensitive information from LLM data stores are also part of the Security for AI Market Map below.

Solving Security for AI first – in DevOps

Open source is a large percentage of any enterprise application, and securing software supply chains is another area where Menlo Ventures continues to look for opportunities to close the trust gap enterprises have.

Sekhar and Haskaraman believe that security for AI needs to be embedded into the DevOps process so well that it’s innate in the structure of enterprise applications. VentureBeat’s interview with them identified how security for AI needs to become so dominant that its value and defense delivered helps to close the trust gap that exists that’s standing in the way of gen AI adoption expanding at scale.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.