More than 90 researchers — including a Nobel laureate — have signed on to a call for the scientific community to follow a set of safety and security standards when using artificial intelligence to design synthetic proteins.

The community statement on the responsible development of AI for protein design is being unveiled today in Boston at Winter RosettaCon 2024, a conference focusing on biomolecular engineering. The statement follows up on an AI safety summit that was convened last October by the Institute for Protein Design at the University of Washington School of Medicine.

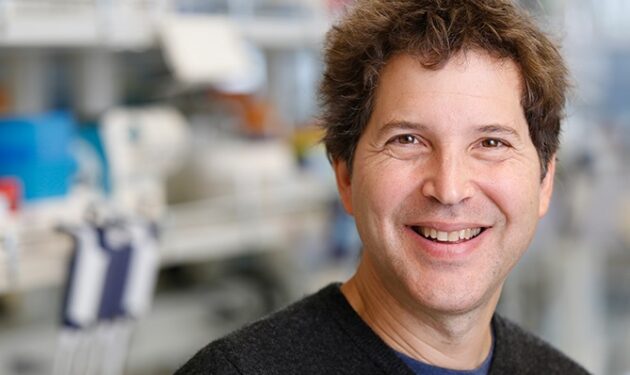

“I view this as a crucial step for the scientific community,” the institute’s director, David Baker, said in a news release. “The responsible use of AI for protein design will unlock new vaccines, medicines and sustainable materials that benefit the world. As scientists, we must ensure this happens while also minimizing the chance that our tools could ever be misused to cause harm.”

The community statement calls on researchers to conduct safety and security reviews of new AI models for protein design before their release, and to issue reports about their research practices.

It says AI-assisted technologies should be part of a rapid response to biological emergencies such as future pandemics. And it urges researchers to participate in security measures around DNA manufacturing — for example, checking the hazard potential of each synthetic gene sequence before it’s used in research.

The process behind the list of principles and voluntary commitments parallels the Asilomar conference that drew up guidelines for the safe use of recombinant DNA in 1975. “That was our explicit model for the summit we hosted in October,” said Ian Haydon, head of communications for the Institute of Protein Design and a member of the panel that prepared the statement.

Among the first researchers to sign on to the statement is Caltech biochemist Frances Arnold, who won a share of the Nobel Prize in chemistry in 2018 for pioneering the use of directed evolution to engineer enzymes. Arnold is also a co-chair of the President’s Council of Advisers on Science and Technology, or PCAST.

Other prominent signers include Eric Horvitz, Microsoft’s chief scientific officer and a member of PCAST; and Harvard geneticist George Church, who’s arguably best-known for his efforts to revive the long-extinct woolly mammoth.

Haydon said other researchers who are active developers of AI technologies for biomolecular structure prediction or design are welcome to add their names to the online list of signatories. There’s a separate list of supporters that anyone can add their name to. (Among the first supporters to put their names on the list is Mark Dybul, who was U.S. Global AIDS Coordinator under President George W. Bush and is now CEO of Renovaro Biosciences.)

The campaign’s organizers say the fact that a person has signed the statement shouldn’t be read as implying any endorsement by the signer’s institution — and there’s no intent to enforce the voluntary commitments laid out in the statement. That raises a follow-up question: Will the statement have any impact?

“Implementation is the next and most important step,” Haydon told GeekWire in an email. “This new pledge shows that many scientists want to lead the way on this, in consultation with other experts. We anticipate close collaboration between researchers and science funders, publishers and policymakers.”

The Institute for Protein Design is among the world’s leaders in biomolecular engineering: For more than a decade, Baker and his colleagues at the institute have been using computational tools — including a video game — to test out ways in which protein molecules can be folded into “keys” to unlock therapeutic benefits or lock out harmful viruses. In 2019, the institute won a five-year, $45 million grant from the Audacious Project at TED to support its work.

Another leader in the field is Google DeepMind, which used its AlphaFold AI program to predict the 3-D structures of more than 200 million proteins in 2022. DeepMind’s researchers weren’t among the early signers of the community statement; however, Google AI has published its own set of commitments relating to AI research.

“In line with Google’s AI principles, we’re committed to ensuring safe and responsible AI development and deployment,” a Google DeepMind spokesperson told GeekWire in an email. “This includes conducting a comprehensive consultation prior to releasing our protein-folding model AlphaFold, involving a number of external experts.”

Efforts to manage the potential risks of artificial intelligence and maximize the potential benefits have been gathering momentum over the past year:

- Last July, a group of seven tech companies — Amazon, Anthropic, Google, Inflection, Meta, Microsoft and OpenAI — made a series of voluntary commitments with the White House to develop AI responsibly.

- Last month, the University of Washington announced the appointment of a Task Force on Artificial Intelligence to address the ethical and safety issues surrounding AI and develop a university-wide AI strategy.

- This week, the Washington State Legislature approved a plan to create an Artificial Intelligence Task Force that would help lawmakers better understand the benefits and risks of AI.

Haydon sees the campaign to promote the responsible use of AI in biomolecular design as part of that bigger trend.

“This is far from the only community grappling with the benefits and risks of AI,” he said. “We welcome input from others who are thinking through similar issues. But each niche of AI has its own benefits, risks and complexities. What works for large language models or AI trained on hospital data won’t necessarily apply in domains like chemistry or molecular biology. The challenge will be to create the right solutions for each context.”