Annapurna Labs co-founder Nafea Bshara knows semiconductors and appreciates a good red wine. Amazon distinguished engineer James Hamilton has an affinity for industry-changing ideas, and likes meeting with smart entrepreneurs.

That’s how they ended up at the historic Virginia Inn restaurant and bar at Seattle’s Pike Place Market 10 years ago, in the fall of 2013, engaged in a conversation that would ultimately change the course of Amazon’s cloud business.

Their meeting, and Amazon’s eventual acquisition of Annapurna, accelerated the tech giant’s initiative to create its own processors, laying the foundation for a key component of its current artificial intelligence strategy.

Amazon’s custom silicon, including its chips for advanced artificial intelligence, will be in the spotlight this week as Amazon Web Services tries to stake its claim in the new era of AI at its re:Invent conference in Las Vegas.

The event comes two weeks after Microsoft announced its own pair of custom chips, including the Maia AI Accelerator, designed with help from OpenAI, before the ChatGPT maker’s recent turmoil. Microsoft describes its custom silicon as a final “puzzle piece” to improve and improve performance of its cloud infrastructure.

In AI applications, ChatGPT has put Amazon on its heels, especially when OpenAI’s chatbot is compared to the conversational abilities of the Alexa voice assistant.

In AI’s “middle layer,” as Amazon CEO Andy Jassy calls it, Amazon is looking to make its mark with AWS Bedrock, offering access to multiple large language models.

But the foundation of Amazon’s strategy is its custom AI chips, Trainium and Inferentia, for training and running large AI models.

They’re part of the trend of big cloud platforms making their own silicon, optimized to run at higher performance and lower cost in their data centers around the world. Although Microsoft just went public with its plans, Google has developed multiple generations of its Tensor Processing Units, used by Google Cloud for machine learning workloads, and it’s reportedly working on its own Arm-based chips.

In the realm of AI, these chips supply an alternative to general-purpose silicon. For example, AWS customers have embraced Nvidia’s widely used H100 GPUs as part of Amazon’s EC2 P5 instances for deep learning and high-performance computing, Jassy said on the company’s quarterly earnings conference call in August.

“However, to date, there’s only been one viable option in the market for everybody, and supply has been scarce,” Jassy added at the time. “That, along with the chip expertise we’ve built over the last several years, prompted us to start working several years ago on our own custom AI chips.”

Amazon’s AI chips are part of a lineage of custom silicon that goes back to that conversation between Bshara and Hamilton in the corner booth a decade ago.

‘That’s the future.’

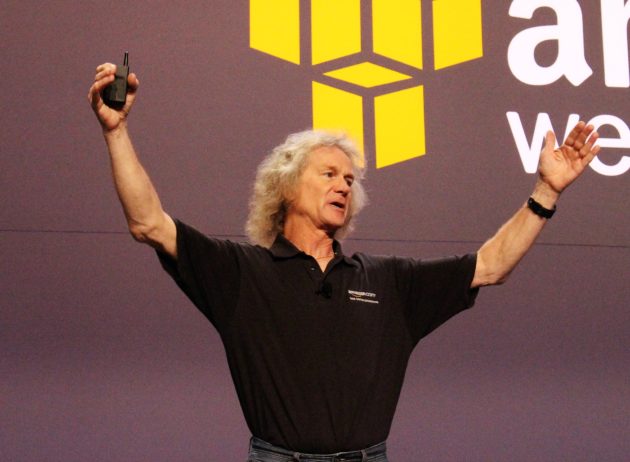

Hamilton is a widely respected engineer, an Amazon senior vice president who joined the cloud giant from Microsoft in 2010. He was named to Amazon’s senior leadership team in 2021 and continues to report directly to Jassy.

Returning to the Virginia Inn for a recent interview with GeekWire, Hamilton said he was originally drawn to Amazon after recognizing the potential of Amazon S3 (Simple Storage Service) for online services. Ironically, that realization came after Microsoft’s Bill Gates and Ray Ozzie asked him to experiment by writing an app against S3.

“I get this bill just before the meeting — it was $7.23. I spent $7.23 in computing, writing this app and testing the heck out of it,” he recalled. “It just changed my life. I just realized, that’s the future.”

It was an early indication of the price and performance advantages available to developers and businesses in the cloud. But after a few years at Amazon, Hamilton realized the company needed to make another leap.

Just weeks before his 2013 meeting with Bshara, Hamilton had written an internal paper for Jeff Bezos and then-AWS chief Jassy, a “six-pager” as Amazon calls them, making the case for AWS to start developing its own custom silicon.

“If we’re not building a chip, we lose control to create,” Hamilton recalls thinking at the time, describing the proceed as a natural next evolution for the company as servers transitioned to system-on-a-chip designs.

As he saw it, Amazon needed to create at the silicon level to preserve control over its infrastructure and costs; to avoid depending on other companies for critical server components; and to deliver more value to customers by building capabilities appreciate security and workload optimization directly into the hardware.

As Arm processors were reaching huge volumes in mobile and Internet of Things devices, Hamilton believed this would direct to better server processors with more research-and-development investment.

An early riser for work, Hamilton often meets in the evenings at local pubs and restaurants with startups, customers and suppliers to hear what they’re working on. Known at the time for traveling the world and working from his boat, he would pick spots where he could park his bike between the office and the marina.

Bshara had started Israel-based Annapurna Labs in 2011 with partners including Hrvoye (Billy) Bilic and Avigdor Willenz, founder of chip-design company Galileo Technologies Ltd.

He was introduced to Hamilton by a mutual friend, and they agreed to confront for happy hour, in line with Hamilton’s tradition. Bshara remembers printing out a series of slides at the local UPS Store, and positioning himself in the booth so as not to disclose the contents to the rest of the restaurant as he showed them to Hamilton.

Hamilton recalls being quickly impressed with what the Israeli startup was doing, recognizing the potential for its designs to form the basis for the second generation of Amazon’s workhorse Nitro server chips, the first version of which had been adapted from an existing design from the Cavium semiconductor company.

Bshara remembers Hamilton, in that initial meeting, asking if Annapurna could go even advocate, and evolve an Arm-based server processor. The Annapurna co-founder held firm at the time: the market wasn’t ready yet.

It was a sign that he was being realistic, and not just saying whatever he thought the senior Amazon engineer wanted to hear. Bshara followed up after the meeting with an email detailing his reasoning at the time.

That was the spark of their initial partnership on Nitro, which ultimately led to Amazon acquiring Annapurna in 2015 for a reported $350 million. Amazon says there are now more than 20 million Nitro chips in use.

AWS launched its Arm-based CPU, Graviton, developed by Annapurna, in 2018. When they decided to make the chip, Hamilton reminded Bshara about what he said about Arm servers when they had originally met.

“I told him, you’re right,” Bshara recalled, explaining that the market was now ready.

Amazon’s advantages and challenges

Annapurna gave Amazon an early edge in a field that can feel appreciate a high-wire act.

Designing chips is “extremely hard — it’s unlike software,” Bshara explained. “There’s zero room for errors. Because if you have a bug, and you spin a chip, you’ve lost nine months. With software, if you have a bug, you release a new version. Here, you have to go print a new version.”

One reason Amazon is eager to talk about this history is to counter the widely held perception that it was caught flat-footed by the rise of generative artificial intelligence. This will be a recurring theme at re:Invent in Las Vegas this week, as AWS CEO Adam Selipsky and team show their latest products and features.

“We absolutely want to be the best place to run generative AI,” said Dave Brown, the Amazon vice president who runs AWS EC2 (Elastic Cloud Compute), the core service at the heart of the company’s cloud computing platform. “That is such a broad area, when you think about what customers are trying to do.”

Even without using Amazon’s AI chips, he said, the company’s Nitro processor plays a key role in significantly increasing network throughput in the Nvidia-powered EC2 P5 instances commonly used for AI training.

But the custom AI chips give it an even more granular level of control.

“Because we own the entire thing with Trainium and Inferentia, there’s no issue that we can’t debug all the way down to the hardware,” he said. “We’re able to build incredibly stable systems at massive, massive scale with custom silicon.”

Custom chips are essential for the major cloud platforms appreciate AWS, Azure, and Google Cloud due to the massive scale of the workloads involved, said James Sanders, principal analyst at CCS Insight.

“From a data center planning standpoint, you can start to run into a lot of trouble just throwing as many GPUs into server racks as possible,” he said. “That becomes a heat-dissipation issue, it becomes a power consumption issue.”

Custom chips allow for better optimization of workloads, lower power consumption, and improved security compared to commodity chips. Power-hungry GPUs also have some unnecessary features for AI workloads. Amazon was early to recognize this fact, and it has a head start in custom AI chips with Trainium and Inferentia.

However, Sanders said, the software side of the equation is a key challenge.

Nvidia has a strong position in AI due to CUDA, its software platform for general purpose computing on GPUs. This gave Nvidia a moat. One of Amazon’s hurdles will be getting AI workloads ported over from CUDA on Nvidia GPUs to run on Amazon chips, he said. This requires significant developer effort and outreach from Amazon.

If developers are locked into CUDA as their programming language, it can be difficult to proceed existing workloads off of Nvidia GPUs, agreed Patrick Moorhead, CEO and chief analyst at Moor Insights & Strategy, a former AMD vice president of strategy. He described this prospect as “a very heavy lift.”

Amazon’s software abstraction layers and integrated development tools ease this transition when starting new workloads, he said.

Bshara, the Annapurna co-founder, said Amazon recognizes the importance of software familiarity to long-term growth, and the company is putting significant resources into building the software tool chain for its AI chips.

“Many customers see Trainium enablement as a strategic advantage,” Bshara said via email. “We’re excited by how quickly customers are embracing these chips and confident that tooling and uphold will soon be at least as accessible and familiar to customers as any chip architecture they’ve worked with before.”

The company’s AI chips are used by companies including AirBnB, Snap, and Sprinklr at scale with clear performance and cost benefits, he said.

Anthropic will also use Amazon’s AI chips under their recently announced partnership, in which Amazon will invest up to $4 billion in the startup as a counterpoint to the duo of Microsoft and OpenAI.

Going forward, Moorhead said, Amazon’s biggest challenges will include staying ahead technically with the latest chip architectures as the needs of AI models continue to grow exponentially; and continuing to invest heavily in R&D to vie against dedicated chip companies appreciate Nvidia and AMD.

Amazon took a big risk in developing its own chips, Moorhead said, but it has paid off by resetting the semiconductor industry and sparking new competition across the major cloud platforms. “They went for it, and they did it,” he said. “And they inspired literally everybody else to follow.”