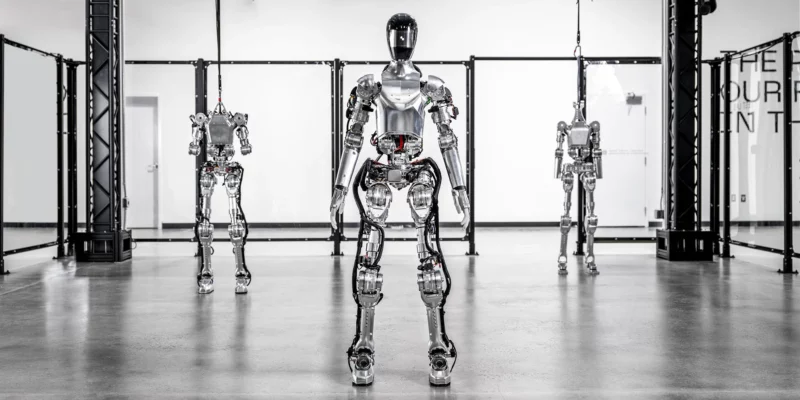

Figure

Humanoid robotics company Figure AI announced it raised an astounding $675 million in a funding round from an all-star cast of Big Tech investors. The company, which aims to commercialize a humanoid robot, now has a $2.6 billion valuation. Participants in the latest funding round include Microsoft, the OpenAI Startup Fund, NVIDIA, Jeff Bezos’ Bezos Expeditions, Parkway Venture Capital, Intel Capital, Align Ventures, and ARK Invest. With all these big-name investors, Figure is officially Big Tech’s favorite humanoid robotics company. The manufacturing industry is taking notice, too. In January, Figure even announced a commercial agreement with BMW to have robots work on its production line.

“In conjunction with this investment,” the press release reads, “Figure and OpenAI have entered into a collaboration agreement to develop next generation AI models for humanoid robots, combining OpenAI’s research with Figure’s deep understanding of robotics hardware and software. The collaboration aims to help accelerate Figure’s commercial timeline by enhancing the capabilities of humanoid robots to process and reason from language.”

With all this hype and funding, the actual robot must be incredible, right? Well, the company is new and only unveiled its first humanoid “prototype,” the “Figure 01,” in October. At that time the company said it represented about 12 months of work. With veterans from “Boston Dynamics, Tesla, Google DeepMind, and Archer Aviation,” the company has a strong starting point.

-

Ok, it’s time to pick up a box, so get out your oversized hands and grab hold.

Figure -

Those extra-big hands seem to be the focus of the robot. They are just incredibly complex and look to be aiming at a 1:1 build of a human hand.

Figure -

Just look at everything inside those fingers. It looks like there are tendons of some kind.

Figure -

Not impressed with this “pooped your pants” walk cycle, which doesn’t really use the knees or ankles.

Figure -

A lot of the hardware appears to be waiting for software to use it, like the screen that serves as the robot’s face. It only seems to run a screen saver.

Figure

The actual design of the robot appears to be solid aluminum and electrically actuated, aiming for an exact 1:1 match for a human. The website says the goal is a 5-foot 6-inch, 130-lb humanoid that can lift 44 pounds. That’s a very small form-over-function package to try and fit all these robot parts into. For alternative humanoid designs, you’ve got Boston Dynamics’ Atlas, which is more of a hulking beast thanks to the function-over-form design. There’s also the more purpose-built “Digit” from Agility Robotics, which has backward-bending bird legs for warehouse work, allowing it to bend down in front of a shelf without having to worry about the knees colliding with anything.

The best insight into the company’s progress is the official YouTube channel, which shows the Figure 01 robot doing a few tasks. The last video, from a few days ago, showed a robot doing a “fully autonomous” box-moving task at “16.7 percent” of normal human speed. For a bi-pedal robot, I have to say the walking is not impressive. Figure has a slow, timid shuffle that only lets it wobble forward at a snail’s pace. The walk cycle is almost entirely driven by the hips. The knees are bent the entire time and always out in front of the robot. The ankles barely move at all. It seems to only be able to walk in a straight line, and turning is a slow stop-and-spin-in-place motion that has the feet peddling in place the entire time. The feet seem to move at a constant up-and-down motion even when the robot isn’t moving forward, almost as if foot planning just runs on a set timer for balance. It can walk, but it walks about as slowly and awkwardly as a robot can. A lot of the hardware seems built for software that isn’t ready yet.

Figure seems more focused on the hands than anything. The 01 has giant oversized hands that are a close match for a human’s, with five fingers, all with three joints each. In January, Figure posted a video of the robot working a Keurig coffee maker. That means flipping up the lid with a fingertip, delicately picking up an easily crushable plastic cup with two fingers, dropping it into the coffee maker, casually pushing the lid down with about three different fingers, and pressing the “go” button with a single finger. It’s impressive to not destroy the coffee maker or the K-cup, but that Keurig is still living a rough life—a few of the robot interactions incidentally lift one side or the other of the coffee maker off the table thanks to way too much force.

-

For some very delicate hand work, here’s the Figure 01 making coffee. They went and sourced a silver Keurig machine so this image only contains two colors, black and silver.

Figure -

Time to press the “go” button. Also is that a wrist-mounted lidar puck for vision? Occasionally, flashes of light shoot out of it in the video.

Figure -

These hand close-ups are just incredible. I really do think they are tendon-actuated. You can also see all sorts of pads on the inside of the hand.

Figure -

I love the ridiculous T-pose it assumes while it waits for coffee.

Figure

The video says the coffee task was performed via an “end-to-end neural network” using 10 hours of training time. Unlike walking, the hands really feel like they have a human influence when it comes to their movement. When the robot picks up the K-cup via a pinch of its thumb and index finger or goes to push a button, it also closes the other three fingers into a fist. There isn’t a real reason to move the three fingers that aren’t doing anything, but that’s what a human would do, so presumably it’s in the training data. Closing the lid is interesting because I don’t think you could credit a single finger with the task—it’s just kind of a casual push using whatever fingers connect with the lid. The last clip of the video even shows the Figure 01 correcting a mistake—the K-cup doesn’t sit in the coffee maker correctly, and the robot recognizes this and can poke it around until it falls into place.

A lot of assembly line jobs are done at a station or sitting down, so the focus on hand dexterity makes sense. Boston Dynamics’ Atlas is way more impressive as a walking robot, but that’s also a multi-million dollar research bot that will never see the market. Figure’s goal, according to the press release, is to “bring humanoid robots into commercial operations as soon as possible.” The company openly posts a “master plan” on its website, which reads “1) Build a feature-complete electromechanical humanoid. 2) Perform human-like manipulation. 3) Integrate humanoids into the labor force.” The robots are coming for our jobs.