Omeda Studios had a problem in its triple-A multiplayer online battle arena game Predecessor. And so it turned to AI-powered chat moderation from startup GGWP to curb toxic behavior.

And it worked. Omeda Studios and GGWP are sharing compelling results of how effective GGWP’s AI-based chat moderation tool has been in combating toxic behavior within the gaming community.

The impact has been profound, showcasing a significant reduction in offensive chat messages and repeat infractions. In an industry grappling with the challenge of toxic player behavior, Omeda Studios’ approach represents a pivotal step towards fostering a more inclusive and respectful gaming environment, the companies said.

“What’s cool is the Predecessor guys are very transparent with their communication with their community,” Fong said. “We have some customers who don’t want their community to know who is helping them. They want everything to be behind the scenes. It was really cool and refreshing.”

Event

GamesBeat Next On-Demand 2023

Did you miss out on GamesBeat Next? Head to our on-demand library to hear from the brightest minds within the gaming industry on latest developments and their take on the future of gaming.

How it started

Dennis Fong, CEO of GGWP, said in an interview with GamesBeat that the Predecessor team independently wrote a blog post sharing the results that GGWP’s AI filtering had on the community. The two companies talked about the results and came up with a case study.

Robbie Singh, CEO of Omeda Studios, said in an interview with GamesBeat that it all started after the company took Predecessor, which was a game created by fans who took over the game after Epic Games decided to shut down its Paragon MOBA. Omeda Studios built out the product and launched it in December 2022.

“When we launched Predecessor in early access, that the surge of player numbers and sales was beyond our wildest expectations,” Singh said. “The unforeseen success highlighted the absence of tools that we needed to mitigate toxicity that we all know is pretty common in a lot of online games.”

Singh reached out to an investor who connected the team with Fong at GGWP. They integrated the GGWP solution and saw significant enhancements to the game, community interactions — everything was super positive. It’s been instrumental in shouldering the burden of moderating the in-game experience, and it has allowed us to maintain that commitment to our community of building a really positive player experience.”

The basics of moderating bad behavior

Jon Sredl, senior manager of the live production and game operations at Omeda Studios, said in an interview that moderation is often handled in a couple of ways. The first is reactive where you wait for players to say bad things then wait for other players to report them. Then you wait for a human to review what was said and decide if it was a bad thing that should be punished after the fact. That doesn’t stop anything from happening in the moment. The offending player often doesn’t even get a notification of what was said that was wrong.

“You don’t have a means of guiding players to understand what is acceptable and what’s not acceptable or what your standards are,” Sredl said.

The other method of moderation is simple word filters. You say players not allowed to say this word. The company will censor any attempt to say this word, with a big long list of slurs, hate speech and other band things, Sredl said.

“That works because it stops things in the moment. But because it’s a static filter, you’re either constantly having to update it manually yourself, or you’re waiting for players to figure out what doesn’t actually get censored and the ways that they can substitute numbers in for letters,” Sredl said. “We were looking for a low overhead tool that could gets us a huge amount of value in terms of saving human review time and was able to stop the act in the moment and keep things from escalating.”

The team started working with the AI-powered tool for moderating text chat. It required tweaking and tuning based on the game’s own standards.

“The great thing about a tool is that it’s a neural network,” he said. “You can train it about what we care about. That’s allowed us to continuously improve and find cases in which maybe we’re being a little bit harsh, or the model was being a little bit harsh on things that we didn’t care as much about. But there are other things that we care more about that the model was initially letting through. And then we work with the team. They make adjustments to the model. And we’ve continued to see progressively better results, progressively better communities, as we are shaping the model towards the combination of what our community expects, and the standards that we’re looking at.”

Reputation management

Sredl said that GGWP’s additional feature of reputation management was also valuable. It could track the offenses by a certain account that it tracked. If this player continued to violate community standards, then it could progressively offer punishments and draw conclusions based on a reputation score — without requiring human moderation.

“For the players that have a history that’s far more positive, or at least neutral, we’re able to be more lenient, which allows us to both have quicker reaction to players that we think are higher risk or inducing negativity,” Sredl said. “Now if someone says something heinous, regardless of their reputation score, we’re going to shut that down. And we don’t give anyone free passes because they’ve been positive in the past. But it’s a huge part of allowing us to be a lot more flexible, in how strict we are with players, depending on what their history has indicated.”

Shifting the paradigm in addressing toxicity

Toxic behavior in online gaming isn’t merely an inconvenience; it’s a pervasive issue affecting a staggering 81% of adults, according to a report by the Anti-Defamation League. Harassment, often targeted at specific groups based on identity factors, poses a substantial threat to player retention and satisfaction. The sheer volume of daily messages makes manual moderation impractical. Something has to be done.

Traditionally, games leaned towards expulsion as a remedy, but research reveals that only around 5% of players consistently exhibit disruptive behavior. Recognizing this, Omeda Studios embraced a new paradigm, focusing on education to deter toxic behavior.

Omeda Studios’ chat moderation tool employs a comprehensive three-fold approach:

Live filtering: Offensive words and slurs are filtered out in real-time, serving as the initial line of defense against offensive messages.

Advanced detection models: Custom language models flag various types of toxic messages, including identity hate, threats, and verbal abuse, within seconds.

Behavior-based sanctions: Analyzing players’ historical patterns and current session behavior allows for tailored sanctions, from session mutes for heated moments to longer, more stringent penalties for repeat offenders.

The Outcome: Tangible reductions in toxicity

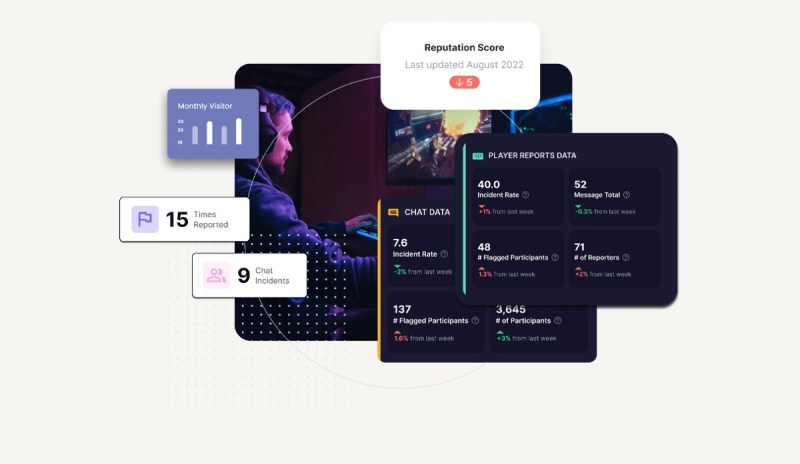

Omeda Studios has been using GGWP to collect data and train the model since January 2022. They turned on the automatic sanction process in June 2023, and then it has been cleaning up the chat ever since.

“It’s just dramatically healthier conversation space than I am used to with other MOBAs,” Sredl said. “Players are noticing that there’s less toxicity than they’re used to in other games, and they are more likely to start talking.”

Since the integration of the moderation tool, Predecessor has witnessed a notable decrease in offensive chat messages, with a 35.6% reduction per game session.

Actively chatting players have sent 56.3% fewer offensive messages. Specific reductions include a 58% drop in identity-related incidents, a 55.8% decrease in violence-related incidents, and a 58.6% decrease in harassment incidents.

Fong said the recidivism rates were reduced significantly. It doesn’t just reduce toxicity. It stops it from recurring. George Ng, cofounder at GGWP, said in an interview that the rates vary from 55% to 75% in terms of stopping recurrences. Players won’t likely have another offense after a warning.

Simple warnings, such as session mutes, which were designed to de-escalate occasional heated moments, have been effective in deterring future misconduct in a majority of players. In fact, around 65% of players who received such warnings refrained from further, more serious chat infractions and avoided consistent toxic behavior warranting harsher sanctions.

Players who consistently exhibited toxicity and therefore ended up reaching more severe sanctions frequently incurred additional sanctions. Imposing these repeated sanctions on players with more long-term patterns of bad behavior has been instrumental in preventing others from falling victim to their toxicity.

Sredl said the company has saved a lot of money on costs in terms of customer service hours focused on dealing with toxic people.

“A tool like this scales with the audience literally and you get huge economies of scale,” he said. “You also have the intangible of happier players who come back. One of the easiest ways for someone to churn out of a brand new game is to walk in and see a really hostile community.”

Complexities of moderation

This is not to say it’s easy. There are cases in some countries where the standards are different as you can use more colorful language in places like Australia. And the model can draw conclusions. If two people are bantering in Discord, the odds are high they are friends. But if they’re using text chat, they’re a lot less likely to be associated and so they may be complete strangers.

In the instances where a human decides the AI made a mistake, they can rectify it and tell GGWP, which can tweak the model and make it better, Sredl said.

“Most of the community is extraordinarily happy that this exists,” he said. “It has done a lot of the job for us in shutting up the players who are destined to be horrifically toxic, removing them from our ecosystem from the chat perspective.

Conclusion: Proactive solutions for a positive gaming environment

Sredl said there is still opportunity for manual intervention by humans.

“But because it’s a model based system, it scales linearly with our player base,” Stredl said.

So far, the company hasn’t had to hire a huge department of customer service people. And it can focus its resources on making the game.

Omeda Studios’ results underscore the effectiveness of proactive moderation in reducing toxicity. The GGWP tool not only blocks offensive chat in real-time but also acts as a powerful deterrent, prompting players to reconsider before sending offensive messages.

This commitment to fostering a more inclusive and respectful gaming environment sets a new standard for the industry, showcasing what’s possible when developers prioritize player well-being.

As the gaming community continues to evolve, Omeda Studios and GGWP’s approach could serve as a blueprint for creating safer, more enjoyable online spaces for players worldwide.

The company is now gearing up for its PlayStation closed beta later this year. And it’s good that it doesn’t have to deal with a huge toxicity problem at the same time.

GamesBeat’s creed when covering the game industry is “where passion meets business.” What does this mean? We want to tell you how the news matters to you — not just as a decision-maker at a game studio, but also as a fan of games. Whether you read our articles, listen to our podcasts, or watch our videos, GamesBeat will help you learn about the industry and enjoy engaging with it. Discover our Briefings.