At Google I/O 2023, the company had much to say about generative AI. For example, we saw Google’s chatbot, Bard, roll out to more countries/languages and learned how the tool can now blend with other Google services, such as Maps and Sheets. Google also announced a new “Help me write” tool in Gmail, which does exactly what you’d expect. One of the more controversial announcements, though, was Magic Editor, a new system that can create generative AI images using photos you’ve already captured.

Only a few days after I/O, we published an opinion piece about the problems we saw with Magic Editor. Essentially, the tool begs the question: What even is a photo? If a photograph is a captured moment of reality, isn’t manipulating that moment to ponder a wholly different reality a substantial ethical problem? In other words, if you take a photo of your family on a cloudy day and alter it with Magic Editor to blend a sunny sky instead, doesn’t that change a core aspect of the memory that photo reflects? Where is the line for this manipulation?

Generative AI is changing how we capture memories. We need a way to know an image has been altered.

This is a significant dilemma we face in the developing AI age. Thankfully, we were happy to hear Google is taking the issue seriously. On stage, Google emphasized its focus on keeping generative AI tools safe and ethical. More recently, it’s incorporated guardrails into Magic Editor that impede you from editing faces and people, for example.

The company has also, on numerous occasions, committed to watermarking media that includes generative AI elements so anyone can see if it’s wholly real or not. A few weeks ago, we went looking for this watermark — but we couldn’t find it. This led us to message Google directly.

What we asked Google and how it responded

C. Scott Brown / Android Authority

At the beginning of November, we contacted a Google PR representative working within the Google Photos division. We asked them for explicit instructions on finding the watermark associated with photos manipulated using Magic Editor, Magic Eraser, and similar Google tools.

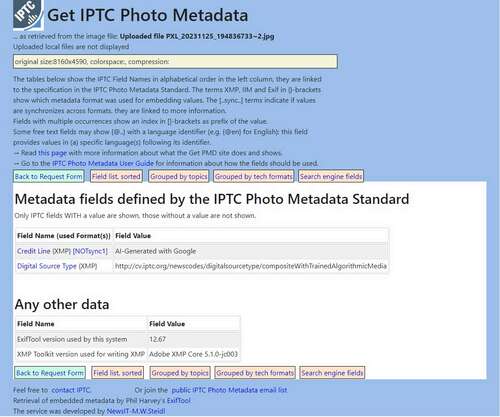

In a reply only a few hours later, the PR rep told us that the metadata is based on standards from the International Press Telecommunications Council (IPTC). To see this information, you need to use a web-based tool provided by the IPTC. The free service requires you to furnish an image’s URL, upload a photo, or furnish a website’s URL to pull a list of images located there. We tried this by uploading a photo captured by a Pixel 8 Pro and then manipulated by Magic Editor. Sure enough, we could see that information listed:

For the record, we also uploaded the original image and found it lacked the “AI-Generated with Google” tag. This proves the IPTC can distinguish a photo that incorporates Magic Editor-style AI-generated elements from one with just AI-based editing tweaks, such as those made by the Pixel 8 Pro for clarity, color, focus, etc.

While this system works, it’s not very convenient. How many people know this site even exists? Shouldn’t this information be clearly listed inside Google Photos itself? Why can’t I just right-click on a photo in Chrome and see this information? Keep these questions in mind as we proceed to the second question we asked Google.

Google does not have a dead-simple system for watermarking AI images or checking the watermark of an existing image.

In our original email, we also asked Google about its policies related to generative AI images from its partners. For example, will an Android manufacturer need to abide by specific rules for its own AI systems to continue using Google Play Services? Will these partners also be required to furnish a similar watermark for AI media? Rumors propose Samsung has AI systems in the works for the Galaxy S24 series, so this will become important quite soon.

Google’s response to this question was to link us to its AI-generated content policy and a blog post from October about AI. You can feel free to read both articles, but I’ll save you some time: There is nothing on either page about mandating watermarks for AI-generated imagery and AI-altered photography created by Google’s partners. However, Google does have a different page outlining its policies on deceptive behavior, which includes a section about manipulated media (note: not specifically AI-generated). Here’s a relevant bit:

Apps that handle or alter media, beyond conventional and editorially acceptable adjustments for clarity or quality, must prominently disclose or watermark altered media when it may not be clear to the average person that the media has been altered.

The interesting bit there is “…prominently disclose or watermark altered media.” I’m not a lawyer, but it would seem to me that Google is violating its own policy by burying its watermark behind the IPTC Photo Metadata tool.

Unanswered follow-up

After receiving these answers from Google, we issued a follow-up email with a new question. Here it is, verbatim:

Will there be updates to existing Google products to allow users to see the relevant AI metadata without needing to visit a third-party site? This seems appreciate a lot of extra steps to find out if an image is AI-generated, and I expect most users won’t do it, which will give more room for the spread of misinformation. As an example of what I mean, will there eventually be relevant metadata shown in Google Photos directly? A right-click within Chrome?

The Google PR rep did not answer to this email. We followed up with a reminder but still heard nothing back. Since then, we haven’t seen any new information about Google’s watermarking policies for AI content nor any updates to Google services that would make it easier to see the company’s own watermark.

Generative AI images: What we think Google should do

Rita El Khoury / Android Authority

The way we see it, there are three things Google needs to change here.

The first is obvious. Google needs to make it easier for the average person to find out whether or not an image has been created or manipulated using generative AI. There shouldn’t be any need for a third-party tool, especially one appreciate the IPTC’s that so few people would know about. Furthermore, this watermark needs to be ensure. I have no expertise in the matter, but it would seem that it wouldn’t be too difficult for someone to wipe the “AI-Generated with Google” tag from a piece of media to fake out the verification. This could, theoretically, direct to a spread of misinformation.

Google needs a strict watermarking policy for itself and its partners, and it needs to enforce those policies rigidly.

Secondly, Google must force its partners to follow these same watermarking policies. With how massive Google is in multiple industries, it is uniquely situated to police thousands of other companies and millions of developers by simply mandating they follow watermarking rules.

Finally, Google must enforce these hypothetical policies with an iron fist. Considering it has no publicly available mandate specifically related to AI-generated media watermarking — and it is very likely in violation of its own existing policies for manipulated media — it would seem we have a Wild West situation on our hands at the moment. If Google is lenient now, it will be much harder to be strict and rein everyone in later.

Google can start right now with Android and Chrome

Damien Wilde / Android Authority

Because of how prominent and influential Google is, it has a responsibility to do whatever it can to impede AI-generated media from getting out of control. As the saying goes, getting the toothpaste back into the tube is difficult. There are no better places to start with preventative measures than Android and Chrome, the world’s largest mobile operating system and the world’s most popular internet browser, respectively. With over three billion active Android devices globally and a similar number of Chrome users, no other company holds as much sway as Google in this regard.

We are not the only ones who think this, either. Judd Heape, a VP at Qualcomm, had this to say when we asked about Truepic, a Qualcomm partner company working to bring transparency to the world of AI:

Qualcomm as a whole isn’t saying ‘OEMs, you must [use Truepic]’, but this is something that will protect the end-user. And you know who’d be good at doing this is Google. As a part of Android, they’ve mandated things. They can mandate in their testing. They’ve mandated things in the past for teens…doing beautification of photos, you can turn that feature off. Something appreciate that can be done here too with C2PA. But let’s see, I think in the next few years we’ll see how that plays out.

We agree with Mr. Heape’s thoughts here, but we feel the idea of waiting for “the next few years” is not at all feasible. Things are moving way too fast for that. Just look at ChatGPT — it is only one year old. In one year, it has dramatically altered not just the tech industry but the entire world. We don’t have the luxury of time to suss out what to do about generative AI — we need action now.

Still, we are encouraged by Google’s start here. Even the small gesture of adding a watermark to Magic Editor/Eraser photos is more than a lot of other companies would do without regulations forcing them to. But a mostly hidden watermark isn’t nearly enough, and Google can’t sit idle if its own partners don’t follow similar rules.

What do you think? Answer the poll below and tell us in the comments why you chose your answer.

Is watermarking AI-generated media an important safeguard?

0 votes