Boston-based Enkrypt AI, a startup that offers a control layer for the safe use of generative AI, today announced it has raised $2.35 million in a seed round of funding, led by Boldcap.

While the amount isn’t as massive as AI companies raise these days, the product offered by Enkrypt is quite interesting as it helps ensure private, secure and compliant deployment of generative AI models. The company, founded by Yale PhDs Sahil Agarwal and Prashanth Harshangi, claims that its tech can handle these roadblocks with ease, accelerating gen AI adoption for enterprises by up to 10 times.

“We’re championing a paradigm where trust and innovation coalesce, enabling the deployment of AI technologies with the confidence that they are as secure and reliable as they are revolutionary,” Harshangi said in a statement.

Along with Boldcap, Berkeley SkyDeck, Kubera VC, Arka VC, Veredas Partners, Builders Fund and multiple other angels in the AI, healthcare and enterprise space also participated in the seed round.

VB Event

The AI Impact Tour – NYC

We’ll be in New York on February 29 in partnership with Microsoft to discuss how to balance risks and rewards of AI applications. Request an invite to the exclusive event below.

What does Enkrypt AI has on offer?

The adoption of generative AI is surging, with almost every company looking at the technology to streamline its workflows and drive efficiencies. However, when it comes to fine-tuning and implementing foundation models across applications, multiple safety hurdles tag along. One has to make sure that the data remains private at all stages, there are no security threats and the model stays reliable and compliant (with internal and external regulations) even after deployment.

Most companies today tackle these hurdles manually with the help of internal teams or third-party consultants. The approach does the job but takes a lot of time, delaying AI projects by as much as two years. This can easily translate into missed opportunities for the business.

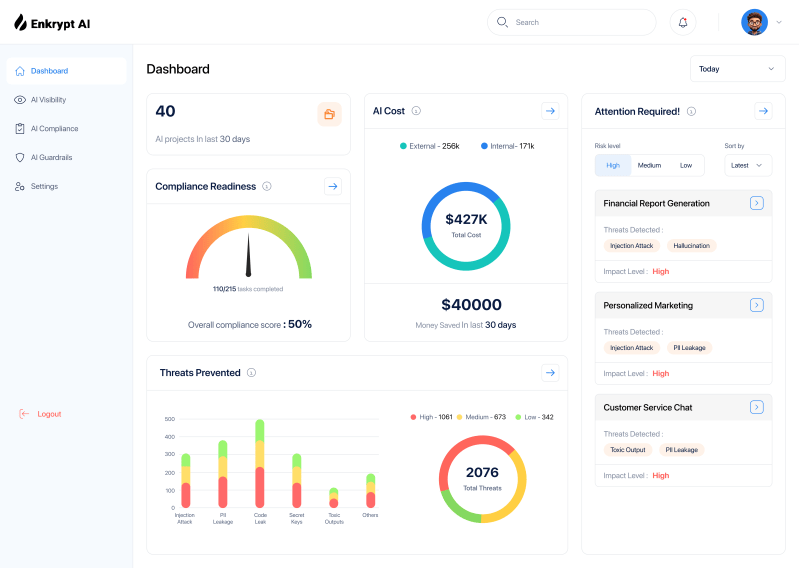

Founded in 2023, Enkrypt is addressing this gap with Sentry, a comprehensive all-in-one solution that delivers visibility and oversight of LLM usage and performance across business functions, protects sensitive information while guarding against security threats, and manages compliance with automated monitoring and strict access controls.

“Sentry is a secure enterprise gateway deployed between users and models, that enables model access controls, data privacy and model security. The gateway routes any LLM interaction (from external models, or internally hosted models) through our proprietary guardrails for data privacy and LLM security, as well as controls and tasks for regulations to ensure no breach occurs,” Agarwal, who is the CEO of the company, told VentureBeat.

To ensure security, for instance, Sentry guardrails, which are driven by the company’s proprietary models, can prevent prompt injection attacks that can hijack apps or prevent jailbreaking. For privacy, it can sanitize model data and even anonymize sensitive personal information. In other cases, the solution can test generative AI APIs for corner cases and run continuous moderation to detect and filter out toxic topics and harmful and off-topic content.

“Chief Information Security Officers (CISOs) and product leaders get complete visibility of all the generative AI projects, from executives to individual developers. This granularity enables the enterprise to use our proprietary guardrails to make the use of LLMs safe, secure and trustworthy. This ultimately results in reduced regulatory, financial and reputation risk,” the CEO added.

Significant reduction in generative AI vulnerabilities

While the company is still at the pre-revenue stage with no major growth figures to share, it does note that the Sentry technology is being tested by mid to large-sized enterprises in regulated industries such as finance and life sciences.

In the case of one Fortune 500 enterprise using Meta’s Llama2-7B, Sentry found that the model was subject to jailbreak vulnerabilities 6% of the time and brought that down ten-fold to 0.6%. This enabled faster adoption of LLMs – in weeks instead of years – for even more use cases across departments at the company.

“The specific request (from enterprises) was to get a comprehensive solution, instead of multiple point-solutions for sensitive data leak, PII leaks, prompt-injection attacks, hallucinations, manage different compliance requirements and finally make the use-cases ethical and responsible,” Agarwal said while emphasizing the comprehensive nature of the solution makes it unique in the market.

Now, as the next step, Enkrypt plans to build this solution and take it to more enterprises, demonstrating its capability and viability across different models and environments. Doing this successfully will be the defining point for the startup as safety has become a necessity, rather than a best practice, for every company developing or deploying generative AI.

“We are currently working with design partners to mature the product. Our biggest competitor is Protect AI. With their recent acquisition of Laiyer AI, they plan to build a comprehensive security and compliance product as well,” the CEO said.

Earlier this month, the U.S. government’s NIST standards body also established an AI safety consortium with over 200 firms to “focus on establishing the foundations for a new measurement science in AI safety.”

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.