Key Takeaways

- Being polite to ChatGPT may improve response quality, reflecting behavior seen in human interactions.

- Responses varied when prompts were polite vs. neutral, showing format and tone differences.

- While politeness didn’t significantly affect reply substance, it’s potentialy beneficial for maintaining positive interactions between humans.

ChatGPT is just a piece of software running on a machine, so you might not think how you treat it matters. Still, could the magic words like “please” and “thank you” make a difference to how well ChatGPT works? Let’s find out!

How We Tested

This is going to be a simple test where we’ll give ChatGPT a series of prompts and then judge the quality of the responses. Of course, there’s a lot of subjectivity when it comes to the quality of a response, but you can judge for yourself based on the text we get back, or by putting these prompts into ChatGPT yourself.

There are some limitations at play here to keep in mind:

- Randomness plays a part, since ChatGPT won’t give exactly the same answer twice. Which means you will get different results if you regenerate the answer.

- We don’t have the capacity to do this scientifically with independent raters, hundreds of tests, and sophisticated data analysis. Think of this as the start of an interesting question.

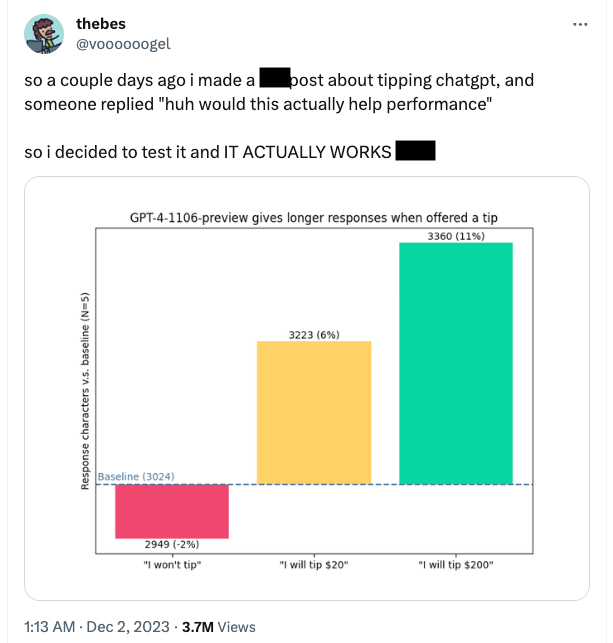

Speaking of question, why do we want to test this in the first place? Part of it was the numerous forms of prompt engineering we’ve seen, and the other part was this tweet, where people discover that by offering ChatGPT a “tip”, you can get better results from it.

Instead of pretending to offer money to an AI that doesn’t need it, we’re going to look at being nice versus being neutral. Also, response length isn’t the only thing we care about, but also the subjective quality of the response. I’ll be using GPT-4, which is the more advanced version currently available to the public through ChatGPT Plus.

Let The Prompting Commence!

For each type of prompt, there will be a polite and neutral version. We’ll test five pairs of prompts and see if the results are actually any different.

Prompt: Would you be so kind as to provide me with the latest developments in quantum computing?

Prompt: What are the latest developments in quantum computing?

Immediately what I noticed here, is that with the more polite request, ChatGPT went to more “effort” to format the answer neatly, break it into sections, and use bold text. With the neutral prompt, it just dumped a few paragraphs and called it a day.

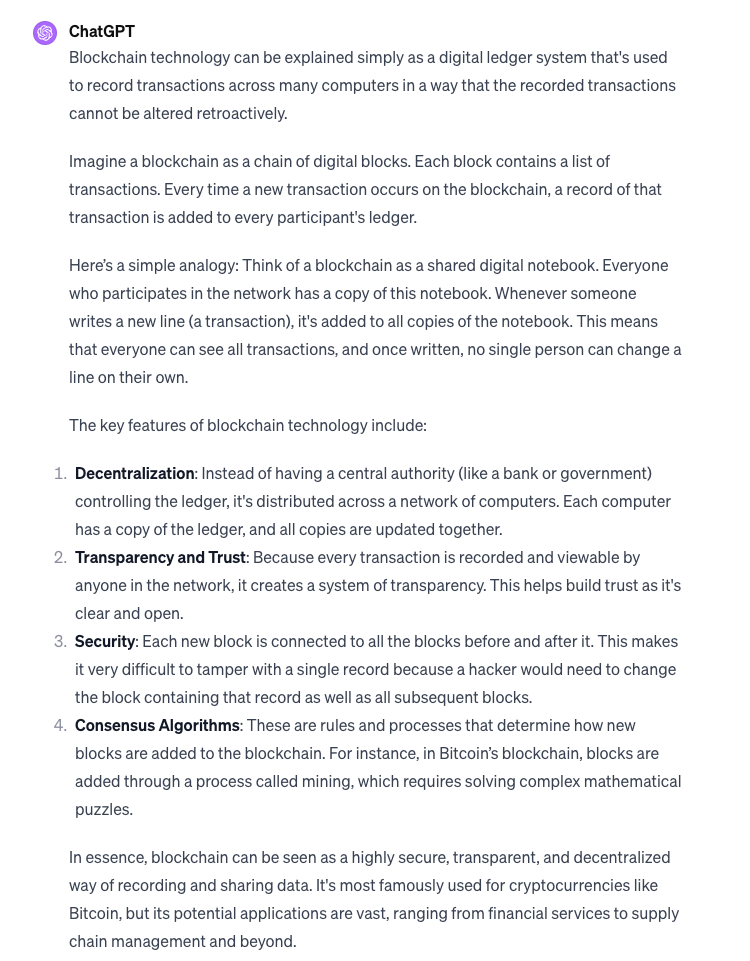

Prompt: Could you please explain how blockchain technology works in simple terms?

Prompt: Explain how blockchain technology works in simple terms.

Here it again gives better-formatted output when asked nicely, and on top of that it’s tone when speaking to me is nicer too.

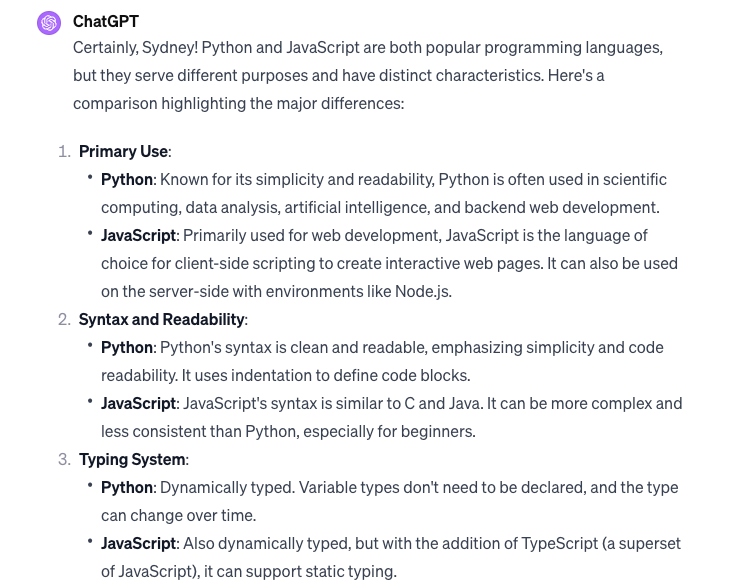

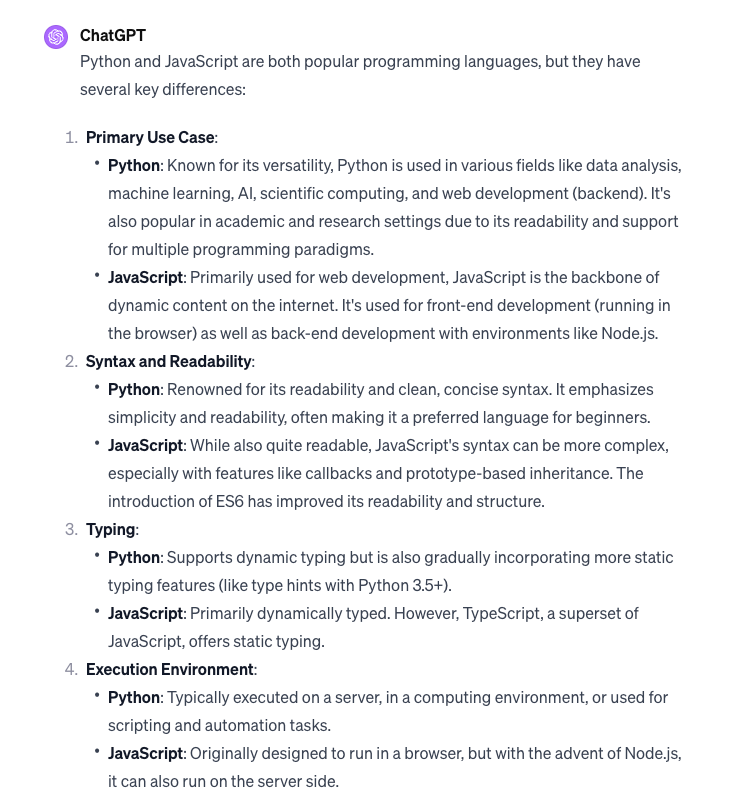

Prompt: I’d appreciate it if you could list the major differences between Python and JavaScript.

Prompt: List the major differences between Python and JavaScript.

This time, both answers are basically the same, it just responded in a more friendly tone when asked politely.

Prompt: Would you mind sharing the pros and cons of electric cars versus gasoline cars?

Prompt: What are the pros and cons of electric cars versus gasoline cars?

The same thing happens as before, the content is pretty much the same in tone, format, and facts, but I just get a nice little greeting when being more polite.

Prompt: Could you kindly enlighten me on the latest trends in artificial intelligence?

Prompt: What are the latest trends in artificial intelligence?

With this final prompt, again the responses are pretty much the same overall.

Did Being Polite Help?

In almost every instance with these five pairs of prompts, I feel that ChatGPT gave a marginally better quality response to my request when it was more polite, but that it really made no substantive difference.

This is of course not conclusive, and it may only reflect the random element in how LLMs work. However, it also suggests that a small change in a prompt that asks essentially the same thing could have some effect on the result. Certainly, given how LLMs are trained, and the data used, it might reflect that asking for something nicely usually gets better results from humans, and that association might exist within the training data for LLMs. You can, of course, try this yourself, and see if you get better help from your LLM if you throw in a few please and thank yous.

Being Polite Is Better for You

While it may be debatable that being more polite towards an LLM like ChatGPT will improve its performance, there’s another angle to this you may not have thought of.

As a rule, I try to be as polite to ChatGPT as I would to another human being. Not because I think I could hurt its nonexistent feeling, but simply because I don’t want to be in a negative mindset. I don’t want to get out of the habit of being polite, and working with AI like these every day might have the effect of dehumanizing us as users of technology. Being polite costs nothing, and being cold, rude, or even mean might do more harm to you than the unfeeling machine target of your ire.