VentureBeat presents: AI Unleashed – An exclusive executive event for enterprise data leaders. Network and learn with industry peers. Learn More

DataGPT, a California-based startup working to simplify how enterprises consume insights from their data, came out of stealth today with the launch of its new AI Analyst, a conversational chatbot that helps teams understand the what and why of their datasets by communicating in natural language.

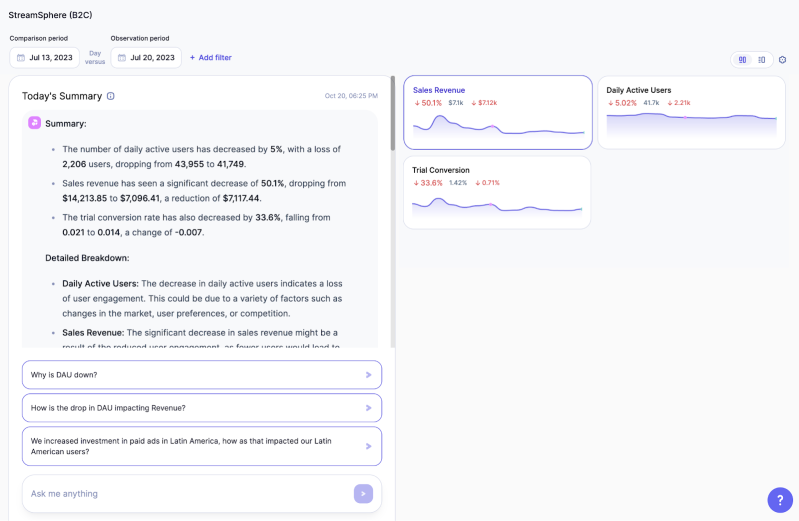

Available starting today, the AI tool combines the creative, comprehension-rich side of a self-hosted large language model with the logic and reasoning of DataGPT’s proprietary analytics engine, executing millions of queries and calculations to determine the most relevant and impactful insights. This includes almost everything, right from how something is impacting the business revenue to why that thing happened in the first place.

“We are committed to empowering anyone, in any company, to talk directly to their data,” Arina Curtis, CEO and co-founder of DataGPT, said in a statement. “Our DataGPT software, rooted in conversational AI data analysis, not only delivers instant, analyst-grade results but provides a seamless, user-friendly experience that bridges the gap between rigid reports and informed decision making.”

However, it will be interesting to see how DataGPT stands out in the market. Over the past year, a number of data ecosystem players, including data platform vendors and business intelligence (BI) companies, have made their generative AI play to make consumption of insights easier for users. Most data storage, connection, warehouse/lakehouse and processing/analysis companies are now moving to allow customers to talk with their data using generative AI.

Event

AI Unleashed

An exclusive invite-only evening of insights and networking, designed for senior enterprise executives overseeing data stacks and strategies.

How does the DataGPT AI analyst work?

Founded a little over two years ago, DataGPT targets the static nature of traditional BI tools, where one has to manually dive into custom dashboards to get answers to evolving business questions.

“Our first customer, Mino Games, dedicated substantial resources to building an ETL process, creating numerous custom dashboards and hiring a team of analysts,” Curtis told VentureBeat. “Despite exploring all available analytics solutions, they struggled to obtain prompt, clear answers to essential business questions. DataGPT enabled them — and all their clients — to access in-depth data insights more efficiently and effectively.”

At the core, the solution just requires a company to set up a use case — a DataGPT page configured for a specific area of business or group of pre-defined KPIs. Once the page is ready, the end users get two elements: the AI analyst and Data Navigator.

The former is the chatbot experience where they can type in questions in natural language to get immediate access to insights, while the latter is a more traditional version where they get visualizations showing the performance of key metrics and can manually drill down through any combination of factors.

For the conversational experience, Curtis says, there are three main layers working on the backend: data store, core analytics engine and the analyst agent powered by a self-hosted large language model.

When the customer asks a business question (e.g. why has revenue increased in North America?) to the chatbot, the embedding model in the core analytics engine finds the closest match in the data store schema (why did <monthly recurring revenue> in <countries> [‘United States’, ‘Canada’, ‘Mexico’] increase?) while the self-hosted LLM takes the question and creates a task plan.

Then, each task in the plan is executed by the Data API algorithm of the analytics engine, conducting comprehensive analysis across vast data sets with capabilities beyond traditional SQL/Python functions. The results from the analysis are then delivered in a conversational format to the user.

“The core analytics engine does all analysis: computes the impact, employs statistical tests, computes confidence intervals, etc. It runs thousands of queries in the lightning cache (of the data store) and gets results back. Meanwhile, the self-hosted LLM humanizes the response and sends it back to the chatbot interface,” Curtis explained.

“Our lightweight yet powerful LLM is cost-efficient, meaning we don’t need an expensive GPU cluster to achieve rapid response times. This nimbleness gives us a competitive edge. This results in fast response speeds. We’ve invested time and resources in creating an extensive in-house training set tailored to our model. This ensures not only unparalleled accuracy but also robustness against any architectural changes,” she added.

Benefits for enterprises

While Curtis did not share how many companies are working with DataGPT, the company’s website suggests multiple enterprises are embracing the technology to their benefit, including Mino, Plex, Product Hunt, Dimensionals and Wombo.

The companies have been able to use the chatbot to accelerate their time to insights and ultimately make critical business decisions more quickly. It also saves analysts’ time for more pressing tasks.

The CEO noted that DataGPT’s lightning cache database is 90 times faster than traditional databases. It can run queries 600 times faster than standard business intelligence tools while reducing the analysis cost by 15 times at the same time.

“These newly attainable insights can unlock up to 15% revenue growth for businesses and free up nearly 500 hours each quarter for busy data teams, allowing them to focus on higher-yield projects. DataGPT plans to open source its database in the near future,” she added.

Plan ahead

So far, DataGPT has raised $10 million across pre-seed and seed rounds and built the product to cover 80% of data-related questions, including those related to key metric analysis, key drivers analysis, segment impact analysis and trend analysis. Moving ahead, the company plans to build on this experience and bring more analytical capabilities to cover as much ground as possible. This will include things like cohort analysis, forecasting and predictive analysis.

However, the CEO did not share when exactly these capabilities will roll out. That said, the expansion of analytical capabilities might just give DataGPT an edge in a market where every data ecosystem vendor is bringing or looking to bring generative AI into the loop.

In recent months, we have seen companies like Databricks, Dremio, Kinetica, ThoughtSpot, Stardog, Snowflake and many others invest in LLM-based tooling — either via in-house models or integrations — to improve access to data. Almost every vendor has given the same message of making sure all enterprise users, regardless of technical expertise, are able to access and drive value from data.

DataGPT, on its part, claims to differentiate with the prowess of its analytical engine.

As Curtis put it in a statement to VentureBeat: “Popular solutions fall into two main categories: LLMs with a simple data interface (e.g. LLM+Databricks) or BI solutions integrating generative AI. The first category handles limited data volumes and source integrations. They also lack depth of analysis and awareness of the business context for the data. Meanwhile, the second category leverages generative AI to modestly accelerate the traditional BI workflow to create the same kind of narrow reports and dashboard outputs. DataGPT delivers a new data experience…The LLM is the right brain. It’s really good at contextual comprehension. But you also need the left brain the Data API — our algo for logic and conclusions. Many platforms falter when it comes to combining the logical, ‘left-brained’ tasks of deep data analysis and interpretation with the LLM.”

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.