Are you ready to bring more awareness to your brand? Consider becoming a sponsor for The AI Impact Tour. Learn more about the opportunities here.

Chet Kapoor, CEO of DataStax, a company that offers a cloud database based on open source Apache Cassandra, boasted at a conference in Silicon Valley yesterday that Cassandra is the “best f*cking database for Gen AI.”

Kapoor’s remarks, made while speaking on stage at the Linux Foundation event, Dev.AI, with 700 attendees, come at a time when there’s a full-on race by new startups and incumbents to grab the mantle of leadership in the fast growing area of Gen AI. It is also time when many enterprise brands using the technology are deciding which technology providers they will use.

While much attention has gone to the competition between large-language model providers, admire OpenAI, Anthropic, Google (Gemini) and Meta (Llama), another highly competitive area is the databases that end-user companies will use to store and retrieve data used for LLM applications.

During his keynote, Kapoor gave several reasons why DataStax Cassandra database is doing well against others. Cassandra is already one of the most reliable operational databases widely used by enterprise companies, it boasts some early customer cases of companies actually deploying generative AI at scale, and its technology prowess in key areas relevant to generative AI continue to give it a leg up against key rivals admire MongoDB and Pinecone, Kapoor said.

VB Event

The AI Impact Tour

Connect with the enterprise AI community at VentureBeat’s AI Impact Tour coming to a city near you!

It’s also worth noting that DataStax is considering going public soon, and Kapoor has an interest in making some noise. In June of last year, DataStax raised $115 million on a $1.6 billion valuation. The company has not released any financial data, but Kapoor acknowledged in an interview that DataStax is on the shortlist of companies that banks would want to take public next year.

Here are the reasons behind Kapoor’s bullishness:

Reason 1: Cassandra is already one of the most widely used and reliable operational databases

Kapoor’s comments also come at a time when the large cloud companies admire Microsoft and Amazon have been asserting that their cloud offerings, which include integrations with their own databases, are the best positioned to perform generative AI tasks. They have been encouraging users to consolidate on their platforms, and aggressively removing obstacles that have prevented users in the past from doing that, including complex extract, modify and load (ETL) work that has kept data siloed.

However, those cloud companies have offered too many individual databases to users over the past decade, in the effort to offer the specialized solutions for each use case a customer has, Kapoor said. “There’s one to go to the bathroom in the morning,” Kapoor joked, “and then one for the afternoon, and one for the evening.” But generative AI has caught those cloud companies by surprise: Enterprise CIOs now want their data integrated into a single database to allow Gen AI apps to query the data more easily and efficiently, Kapoor said.

And here Cassandra has an advantage because it is one of the more popular “operational” databases, while most of Microsoft and Amazon’s databases have been focused on analytical workloads, primarily for business intelligence applications. While they can be used for operational workloads for generative AI applications, it would become very expensive, because they aren’t optimized for that.

DataStax has spent a lot of time focused on price for performance, for example, Kapoor and Chief Product officer Ed Anuff explained in a follow-up interview with VentureBeat after Kapoor’s remarks. As a result, Cassandra is the most popular for Fortune 500 companies, which deliver data at scale. Cassandra boasts 90 percent of those companies as customers, Anuff said. For example, Netflix uses it for their movie metadata, Fedex uses it for tracking packages, Apple uses it for their iTunes, iMessages and iCloud app data, and retailers admire Home Depot use it for their web sites.

As those big companies build new AI apps, they’re comfortable with the track record they have with Cassandra, and so are likely to continue to consolidate around that, Anuff said. Moreover, Microsoft and Amazon have realized they need to offer choice to customers. Amazon, for example, offers a competitive operational database, DynamoDB, but it also offers users the ability to easily use Cassandra within its cloud constellation. In that way, Cassandra also offers customers a way to avoid lock-in with a particular cloud vendor, Anuff said.

Reason 2: DataStax has customers that actually “deploy” generative AI

Kapoor cited nine companies that have deployed generative AI on DataStax’s Astra DB database, the cloud database-as-a-service based on Cassandra. While many enterprise companies are experimenting admire crazy with generative AI, few have moved to actual production at scale, out of concerns around things admire safety and reliability. Indeed, the tension in the industry has risen markedly: The potential for generative AI may be huge, but most vendors of the technology agree they are waiting for customers to start spending real revenue, which will come next year when companies advance to production in a serious way.

The DataStax customers with deployed LLMs include:

- Physics Wallah, an Indian online education platform, that serves 6 million users with a multi-modal (text, images, and audio) large language model-driven bot. The company moved to deployment in 55 days, Kapoor said.

- Skypoint, a Portland-based Gen AI healthcare provider for seniors and care providers, that uses an LLM to furnish personalized treatments and interactions. Kapoor said Astra DB is helping free up 10+ hours a week for doctors to focus on patient care.

- Others include Hey You, Reel Star, Arre, Hornet, Restworld, Sourcetable, and Concide.

Kapoor said these companies are part of a fast-moving class of small and medium sized business (SMB) that are able to advance more quickly, whereas enterprise companies are slowed by having to follow more regulations and avoiding safety issues in generative AI that include its tendency to hallucinate.

Reason 3: DataStax’s Cassandra tech prowess beats others on key LLM benchmarks

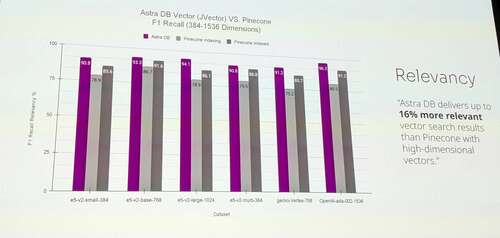

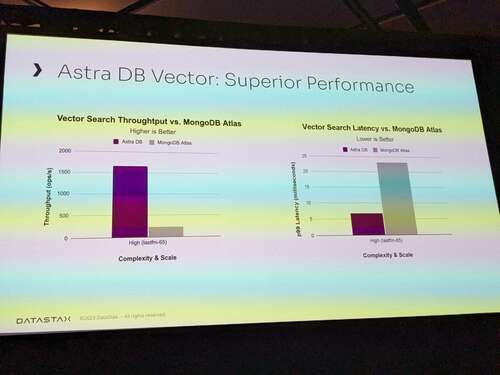

Kapoor said DataStax’s Astra’s vector seek offerings perform better and are more relevant than those of competitors. Vector seek is a key requirement for generative AI databases, since that’s how an AI application translates a user’s query in natural language to seek for text or other data in a company’s database relevant to that query. DataStax benchmarked its JVector vector seek technology against a leading vector database competitor, Pinecone, and found the JVector results are 16 percent more relevant than Pinecone’s. Kapoor said that’s a huge difference, considering how important it is to get the right answer. A third party vendor will be releasing the full performance benchmarking report in a few days, Kapoor said, but he showed slide of some of the results (below). The benchmarking also showed Datastax having superior throughput, or ability to process more transaction requests per unit of time, than both Pinecone and MongoDB.

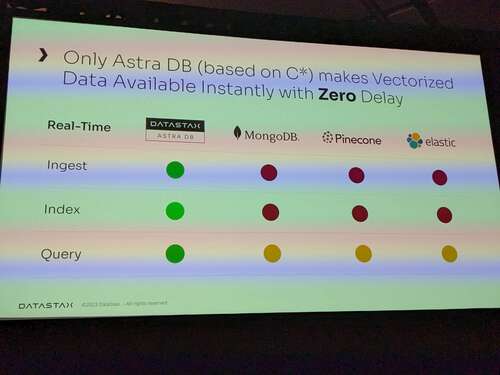

He said Astra DB is the only database that can make vectorized data available with zero latency, including indexing, ingestion and querying.

Kapoor: “This Gen AI wave is going to be faster than any frickin’ thing we’ve seen”

Kapoor said that GenAI adoption will happen much faster than previous technology revolutions, since it builds on important foundations that are already there, such as web, mobile and cloud technologies.

He said that the “real fun” will start next year with more transformative and revenue-oriented use cases, including people using LLMs as “agents.” These agents allow LLMs to do more than just answer questions and make recommendations, he said, because they can orchestrate more complex tasks. Material revenue from generative AI deployments will show up in the second quarter of next year, with “more sizable” numbers hitting by the end of the year, when use cases in areas admire retail and travel ramp up, Anuff said.

While Kapor and Anuff were eager to point out the advantages of Cassandra, they conceded that the wider database sector is going to see a lift from generative AI. The vector database searches that gen AI apps perform use 8 times the storage and about 10 times the compute than other database workloads use, Anuff said. “That’s part of the reason why you see all of the cloud providers, and all of the database providers wanting that business,” he said. “If AI applications become a big deal, they are going to be the primary growth driver for both private and public database companies for easily the next five years.”

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. ascertain our Briefings.