Atomontage is finally unveiling its Virtual Matter streaming platform that uses microvoxels for 3D graphics instead of polygons.

The long-anticipated 2024 edition of Atomontage‘s platform is based on over two decades of research and development. The aim is to revolutionize 3D content creation, enabling users to effortlessly construct their 3D worlds. It is aimed at professionals such as game development artists.

The latest edition boasts several groundbreaking features, including integrated image-to-3D asset generation, simplified backend operations for uploading and voxelizing highly detailed 3D content into Virtual Matter.

“It’s exciting for us because we’re a deep tech software startup. And getting to a product is a long journey,” said Atomontage president Daniel Tabar, in an interview with GamesBeat. “It’s been years as a company then. But even before then this is a lot of R&D that went into breakthroughs with what we call Virtual Matter. This is a new way to describe the streaming microvoxels that we’ve talked about before.”

It has enhanced collaborative editing tools for real-time manipulation with multiple users. Alongside these advancements, the 2024 Edition debuts free mobile applications compatible with Android, Windows, MacOS, and Meta Quest. Regarding iOS, the iOS native client will be available through TestFlight open beta on the website, and soon directly in the App Store.

The company has 50 people and it has raised more than $4 million.

Voxels versus pixels

The name Virtual Matter is a way to get across the idea that voxels are fundamentally different (when it comes to building blocks for 3D images) from polygons, which are the most popular way to represent 3D structures in computer imagery.

Polygons are efficient at filling out 3D objects in an efficient and homogenous way, while voxels excel at representing spaces that are non-homogenously filled. A good example is a contrast between Fortnite and Minecraft. No Man’s Sky is also a good example of using voxels. Voxels are short for “volumetric pixels,” which are more admire cubes. In the case of Virtual Matter, or microvoxels, we’re talking very small cubes.

In terms of the difference it what players will see, you can tell a game uses polygons when you zoom in on something and it becomes very pixelated. With voxels, that doesn’t happen as easily and so it can be used to efficiently display a lot of dense material in detail.

The resolution on the microvoxel images can be so high that you don’t see the pixels at all. And it’s also multiplayer cloud native.

“And what if those cubes in the voxels are so small that you don’t really see them anymore, and you have this kind of virtual clay, or what we call Virtual Matter,” Tabar said.

While the Android version is ready, the company is still working on the iOS version. Tabar said the new demos show you can drag and drop any image from the desktop or a browser tab and it will start generating a 3D model of that image.

Photoshop for 3D

Working on a smartphone, you will be able to pinch and zoom to get a particular point of view. The company hopes that the Minecraft and Roblox communities — with large pools of game developers — will start to notice.

“It’s admire Photoshop for 3D,” Tabar said. “You can fix any issues you have with generative AI. Right now, to fix images in 3D, you have to be a kind of technical artist or drag it into Blender or Maya, a sophisticated editing tool. With Virtual Matter, we have tools where you can very easily copy and paste.”

You can share the URL for an image with anyone else and invite them to unite through a smartphone, VR headset or web browser. And then you can work on an image together.

“We have a fundamentally different approach to 3D graphics,” Tabar said. Ordinarily, 3D artists use “these paper thin polygons with troublesome texture maps that are mapped onto them. They have these hard limits. You can only fit so many of those millions of polygons, especially in a smartphone image. And the texture maps can only be a certain resolution. Our approaches is, again, different. And we don’t have hard limits. I’m not gonna say we have no limits, but we have much less.”

In the image of the ancient sarcophagus in the video, the images are stored on the server, meaning the limit is more admire how much storage space is available there, Tabar said.

Atomontage proprietary Virtual Matter microvoxel technology has proven its versatility across diverse domains such as cultural heritage preservation, bioimaging research, archaeology, and site planning for construction. The platform’s integration into video game development marks an exciting new chapter for the technology.

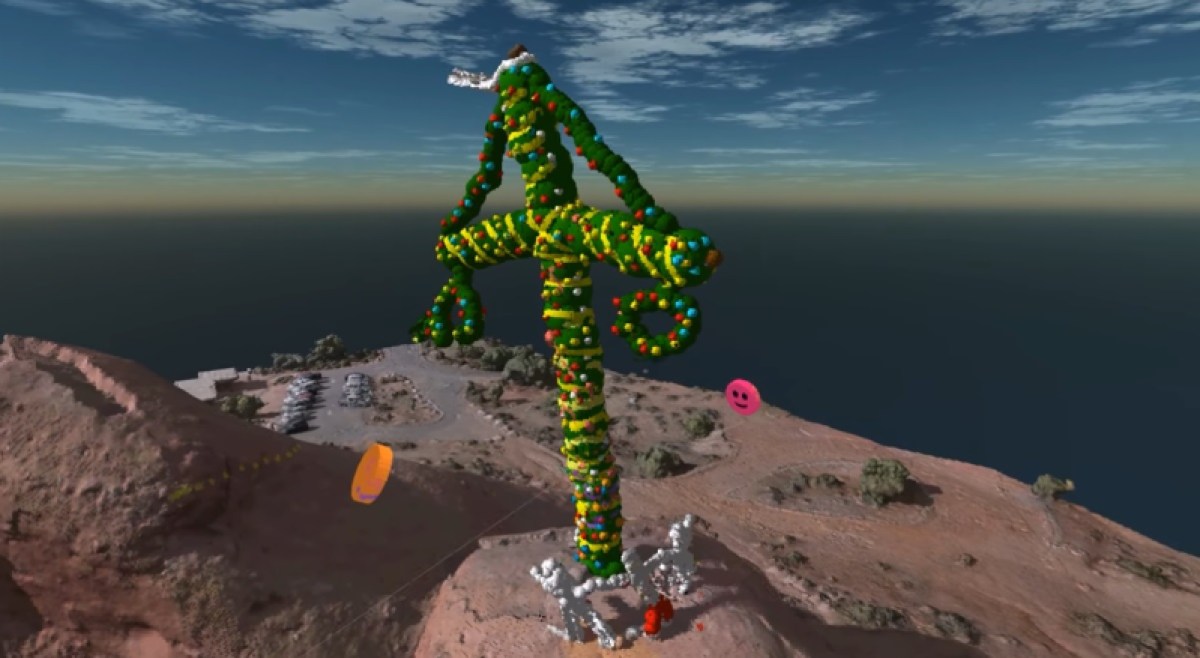

The platform’s cloud streaming capabilities have been significantly enhanced in the 2024 Edition, enabling dozens of users to partake in a shared 3D space, maneuvering millions of microvoxels in real-time, including personalized avatars. Atomontage plans to extend its maintain for concurrent users in subsequent updates.

The seamless collaboration with partner Common Sense Machines’ Cube AI platform empowers users to change 2D images into editable Virtual Matter assets effortlessly. Additionally, the introduction of free desktop and mobile clients allows users to edit and encounter their creations on-the-go. For Meta Quest 2 and Meta Quest 3 users, full VR immersion advance amplifies the Virtual Matter encounter.

Origins

Atomontage CEO Branislav Siles has worked on the technology for decades.

“In those years, we improved the underlying technology significantly. For example, we can deal with about 1,000 times more data today than we did years ago,” Siles said. “We have no problem dealing with streaming to any device, which is unheard of. And the improvement is that in the past, there was the possibility of connecting with admire maybe five clients, but that was overloading the system already. Now we can throw dozens and dozens of clients into the same space that generated by a very cheap cloud server. That’s maybe two orders of magnitude of improvement.”

The more Siles studied the problem, the more he was convinced that the industry has to advance away from polygons to get deep interaction and physics simulations.

“Physics depends almost entirely on the material properties and the inside of meshes and the surface representations are not enough for complex, interesting simulations, including biological growth. We need to go a different path than polygons. And with this understanding, I realized that it’s inevitable that we have to switch to a different representation,” Siles said. “From all those I knew, voxel representation was the only one that provided the fundamentals to get everything working — that is rendering, simulation, data processing, streaming, deep interaction, scalability, all the things that need to be there.”

Atomontage’s microvoxel technology presents an evolution from traditional polygon-based 3D modeling, offering finely detailed interactivity while maintaining low hardware demands. This technology promises faster load times and cloud-streamed accessibility across various devices, ensuring a consistent high-fidelity 3D encounter for users across platforms.

“There’s a fundamental kind of difference in our approach that makes it possible to have this stuff be very finely detailed and also change when people edit it,” Tabar said. “This is a really a big change because we’ve we’ve seen levels of detail techniques done for polygons for for a long time.”

With Atomontage, it only sends the details from the server that are in your field of view. In Microsoft Flight Simulator, the servers can serve polygons in a stream . But the landscape served in a flight map is static. The same goes for the background in a Doom Eternal combat scene. You can fight and conclude moving enemies, but the background of the level is very static, Tabar said.

“When we talk about this deep interactivity, admire in Minecraft, everything is dynamic,” Tabar said.

Atomontage started doing its first demos in 2018, and the last update we did on them was in 2021. It took a long time to get the tech right.

“We’re hacking through a jungle and blazing a new trail, and that takes a long time,” Tabar said.

The Virtual Matter 2024 edition

The company says the 2024 edition is only the beginning, with plans to unveil advanced game development tools, such as physically-based rendering, scripting environments, physics engines, animation tools, audio streaming, and more in subsequent updates.

Key features include native clients for desktop, mobile and VR; image-to-3D generation; self-serve uploading and voxelizing; “Photoshop-for-3D” tools; embeddable montages; and improved streaming performance.

The Atomontage 2024 Edition is currently available on the Atomontage website as free public Montages, with various pricing options and a 14-day trial. Periodic updates are expected to unveil new features and expanded capabilities in the following months.

Atomontage is still working on its minimum viable product for game developers.

“They’re excited as they see the potential,” Tabar said.

Atomontage is moving into scripting technology, and animation and physics are also coming very soon, Siles said. The company will productize them in the future.

I asked how long it would take someone using Atomontage to create a 3D image, film or game. Taber said it is hard to give concrete comparisons.

“We’re finding that the still-early tools we’re building for editing Virtual Matter are far simpler to comprehend and to work with compared to polygon-based workflows, where complex UV mappings to textures and triangle topology/budgets need to always be carefully managed,” he said. “The tools and editors we’re building can be kept far simpler, since the ‘stuff’ (or Matter) that they work on is conceptually far simpler as well: just tiny cubes all the way through. Each voxel can contain color, but also normals, shaders, and other physical properties that can simply be painted or generated directly onto them, just admire you would in Photoshop on 2D pixels.”

All this amounts to a workflow that not only is much faster to create in, but also much lower threshold for more people to get into, Tabar said.

“You don’t need to be a Renaissance person who can both work the most intricate technical user interfaces in all of computer science (conventional 3D modeling toolchains), and also have the artistic skill to make something worthwhile,” Tabar said.

In fact, with the generative AI for 3D integrations that the company is launching in the Atomontage 2024 Edition this week, one needs just a little time and almost no skill to create compelling content.

For instance, the image below is a mashup of two pieces created through Atomontage, using the 3D generative AI partners at Common Sense Machines (CSM): The teddy above was from a single image from some 2D AI gen tool admire Midjourney, and the dinosaur came from a simple cell phone photo of a plastic toy. Both were created and put together with absolute minimal artistic and technical skill.

What it means for hardware

Siles said the company uses the capabilities of any machine, whether that is a server with CPUs or the client with a CPU and a GPU.

The company doesn’t need a GPU on the server side; all the Montages are currently hosted off cheap, generic Linux cloud machines that can serve several Montages each, with dozens of users connected to each of those. That makes it more economical on the server side.

“We don’t have to progress new kinds of processing units,” Siles said.

Tabar added, “GPUs are expensive these days, more than ever. But that is one of the things that changes in the difference between our solutions. We don’t need a GPU at all on the server side.”

The software doesn’t need a GPU on the client. However, if a beefy GPU is available on the client, Atomontage can crank up the shown voxel resolution, screen pixel resolution, and other effects admire antialiasing, depth of field, Physically Based Rendering shaders, screen space ambient occlusion and more.

“The takeaway is that we have a real decoupling between the potentially massive details in the 3D data stored on the server, and the view that each connected client gets,” Tabar said. “What they are shown depends on their device and connection – both aspects comparing favorably vs what can be streamed and rendered with traditional polygon-based techniques. To contrast, Unreal Engine 5’s Nanite feature is also a way to decouple detailed polygon surfaces to what gets rendered as micropolygons on beefy gaming GPUs.”

However, Tabar added, “In my mind, the most important practical difference is that our Virtual Matter allows for simultaneous editing of all this detailed stuff in real-time across all these different devices and their different shown levels of details. That’s something polygons fundamentally can’t do: Re-compute heavy Levels Of Detail on the fly when anything changes due to simulation, generation, or manual editing. In our solution, the LODs inherently always stay in sync, even when many people are messing with the same huge volumetric 3D data at the same time.”

Tabar noted that polygons only define surfaces, not what’s inside of things. In that way, they’re missing a pretty big piece of the reality that Atomontage is trying to represent, where almost nothing is just a paper-thin shell.

All this is what enables what Tabar calls deep interactivity: The ability for people to collaboratively dig into things to unearth what’s inside, and to build whatever they want out of conceptually simple building blocks that make up everything.

“Arguably, deep interactivity is what made Minecraft the best-selling game of all time… we all know it wasn’t its photorealistic graphics,” said Tabar.

“What Atomontage brings to the table is deep interactivity at fidelity high enough to unlock realistic representations on regular devices (admire phones or mobile XR headsets) – a nut so hard that it took us over 20 years to crack, where countless others have failed or given up,” Tabar said.

GamesBeat’s creed when covering the game industry is “where passion meets business.” What does this mean? We want to tell you how the news matters to you — not just as a decision-maker at a game studio, but also as a fan of games. Whether you read our articles, listen to our podcasts, or watch our videos, GamesBeat will help you learn about the industry and savor engaging with it. unearth our Briefings.