OPINION: Apple Intelligence was, hands-down, the most exciting announcement at WWDC 2024 earlier this week.

It’s Apple’s long-awaited entry into the world of generative AI and it didn’t disappoint, with a whole host of AI-powered features that match – and in places, goes much further than – the Android competition.

Take Siri for example; the virtual assistant will be able to interact with apps on your behalf, allowing you to say things like ‘play the podcast I was listening to last week’ or ‘show me the last document I opened in Files’, with support for third-party apps also in the works.

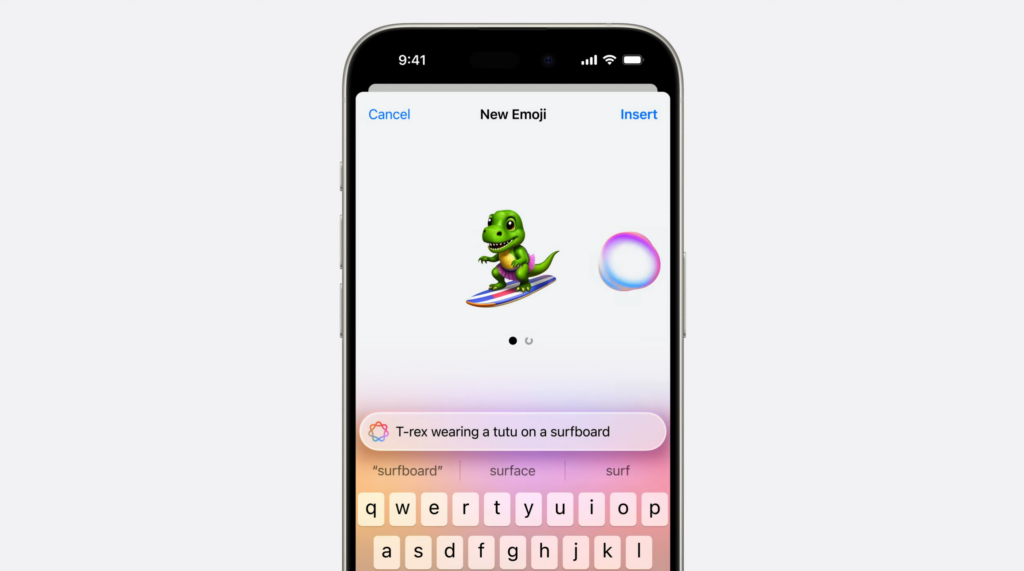

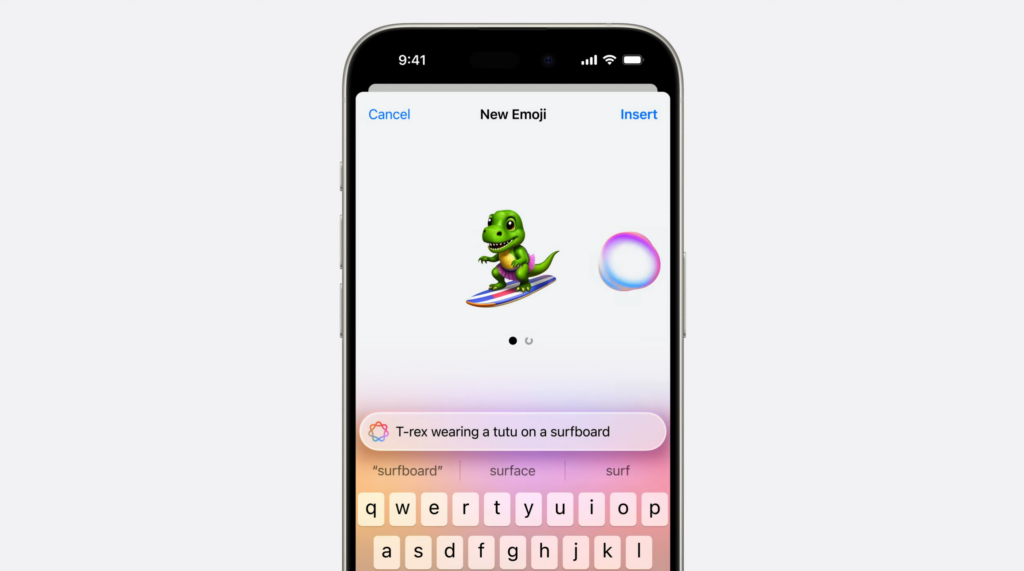

It also features integration with one of the most popular LLMs around, ChatGPT 4o, which will analyse images and, for example, suggest a new colour to paint the walls in your living room. Throw in generative AI writing support, the ability to remove distractions from photos, Genmoji and a plethora of other AI-powered features and it’s hands-down the biggest feature that Apple has announced in years.

So, what’s the big problem? It all boils down to which models of iPhone will be able to take advantage of Apple Intelligence once it’s released with the big iOS 18 update due later this year. Reader, I’m afraid to say that it’s an exclusive list.

So exclusive in fact that only two models of iPhone can support it; the iPhone 15 Pro and iPhone 15 Pro Max. Yep, even if you bought an iPhone 15 or iPhone 15 Plus this year, you won’t be able to take advantage of the GenAI tech when it’s made available.

As for why, it’s a frustratingly simple answer; only the A17 Pro has been deemed powerful enough to handle the Apple Intelligence experience, along with M-series chips on the iPad and Mac fronts. That’s particularly important because of Apple’s decision to handle most of the AI processing entirely on-device.

It’s a move that’s sure to be massively appreciated by the more privacy-focused among us, continuing Apple’s years-long dedication to user data privacy, but does the average consumer really care? I’d wager that most people will care more that they can’t access this new snazzy set of features on their iPhone, even if it’s only a year old.

Now, don’t misunderstand me here; I completely agree that on-device generative AI processing is the right way to handle this. It stops user inputs from being stored in a cloud server somewhere, and also stops potentially sensitive data from being used for LLM training and served up to other users.

Instead, I feel the better approach would’ve been to introduce a handful of the lesser-demanding Apple Intelligence features to older models of iPhone, bringing tens of millions of iPhone users into the fold instead of being left in the cold completely.

Now I’m not an AI scientist and I don’t have intimate knowledge of just how Apple Intelligence works – shocking, I know – so I can’t say which of the features are less demanding than others, but I’ve got to assume that on-device generative fill is more demanding than transcribing voice recordings.

Even if the process takes slightly longer on older devices, I think that’d be an acceptable compromise, and one that gives consumers the choice of whether to upgrade to an iPhone 15 Pro or Pro Max (or one of the models of iPhone 16 later this year) rather than their hand being forced.

I think that’s the hardest pill to swallow for me; this is an obvious way for Apple to get existing iPhone owners to upgrade their devices early, even if in cases for the likes of the iPhone 14 Pro and 14 Pro Max and iPhone 15 and 15 Plus, they’re perfectly capable devices in practically every other regard – especially with Apple confirming recently that iPhone sales have slumped by 10% year-on-year.