A hot potato: AMD is fighting back at Nvidia’s claims about the H100 GPU accelerator, which according to Team Green is faster than the competition. But Team Red said Nvidia didn’t tell the whole story, and provided advance benchmark results with industry-standard inferencing workloads.

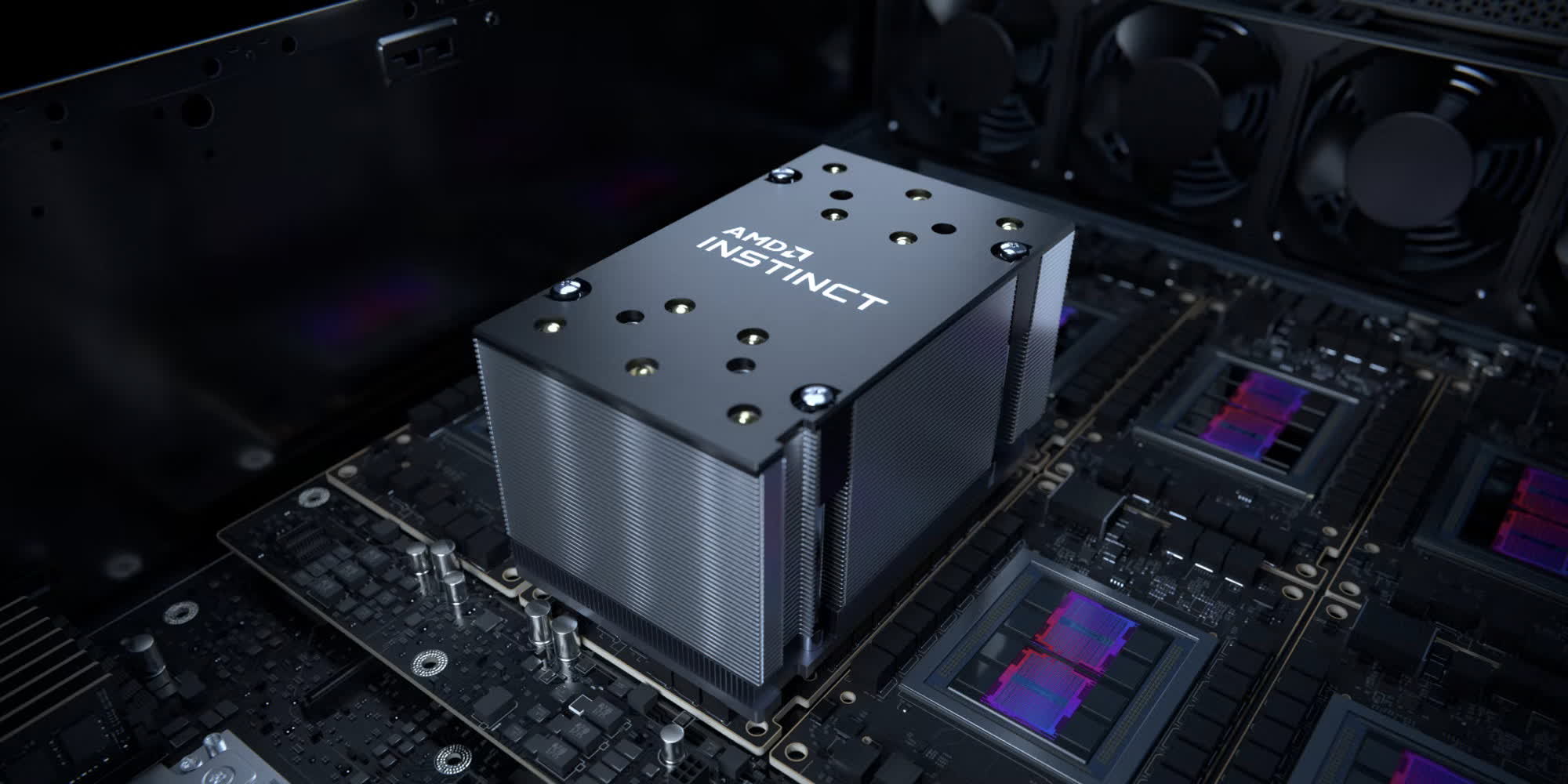

AMD has finally launched its Instinct MI300X accelerators, a new generation of server GPUs designed to supply compelling performance levels for generative AI workloads and other high-performance computing (HPC) applications. MI300X is faster than H100, AMD said earlier this month, but Nvidia tried to refute the competitor’s statements with new benchmarks released a couple of days ago.

Nvidia tested its H100 accelerators with TensorRT-LLM, an open-source library and SDK designed to efficiently accelerate generative AI algorithms. According to the GPU company, TensorRT-LLM was able to run 2x faster on H100 than on AMD’s MI300X with proper optimizations.

AMD is now providing its own version of the story, refuting Nvidia’s statements about H100 superiority. Nvidia used TensorRT-LLM on H100, instead of vLLM used in AMD benchmarks, while comparing performance of FP16 datatype on AMD Instinct MI300X to FP8 datatype on H100. Furthermore, Team Green inverted AMD’s published performance data from relative latency numbers to absolute throughput.

AMD suggests that Nvidia tried to rig the game, while it is still busy identifying new paths to unlock performance and raw power on Instinct MI300 accelerators. The company provided the latest performance levels achieved by the Llama 70B chatbot model on MI300X, showing an even higher edge over Nvidia’s H100.

By using the vLLM language model for both accelerators, MI300X was able to accomplish 2.1x the performance of H100 thanks to the latest optimizations in AMD’s software stack (ROCm). The company highlighted a 1.4x performance advantage over H100 (with equivalent datatype and library setup) earlier in December. vLLM was chosen because of its broad adoption within the community and the ability to run on both GPU architectures.

Even when using TensorRT-LLM for H100, and vLLM for MI300X, AMD was still able to supply a 1.3x improvement in latency. When using lower-precision FP8 and TensorRT-LLM for H100, and higher-precision FP16 with vLLM for MI300X, AMD’s accelerator was seemingly able to display a performance advantage in absolute latency.

vLLM doesn’t uphold FP8, AMD explained, and FP16 datatype was chosen for its popularity. AMD said that its results show how MI300X using FP16 is comparable to H100 even when using its best performance settings with FP8 datatype and TensorRT-LLM.