‘Barron’s Roundtable’ panelists discuss the evolution of AI and how investors can look for opportunities with the emerging technology.

Thomson Reuters (TR) recently released new artificial intelligence (AI) solutions for legal professionals to draft contracts or briefs and research complex legal topics more efficiently.

David Wong, chief product officer at Thomson Reuters, told FOX Business in an interview that the two main areas where the company’s tools help customers are finding information in specific professional databases and generating content that’s tailored to the task at hand.

“As we’ve seen, generative AI is really good at information retrieval, so almost all the really kind of mind-blowing use cases we’ve seen with ChatGPT and things like that are about synthesizing and pulling information out from these databases, often the web — but for us it’s the proprietary content and databases that we have,” Wong said.

“A lot of our software tools are built around either creating a draft of a contract, a brief, a tax return,” he added. “They’re all essentially written work product and, of course, generative AI is also very good at producing written work, so that’s why we’re excited about the application of large language models and gen AI to the product problems that we solve at TR.”

‘AI SKILLS FACTORY’ CREATED BY THOMSON REUTERS FOR NON-ENGINEERS TO BUILD EXPERTISE

“I like to think of it as like a SparkNotes or Wikipedia of the law,” says Thomson Reuters chief product officer David Wong of the Practical Law tool. (Eduardo MunozAlvarez/VIEWpress via Getty Images / Getty Images)

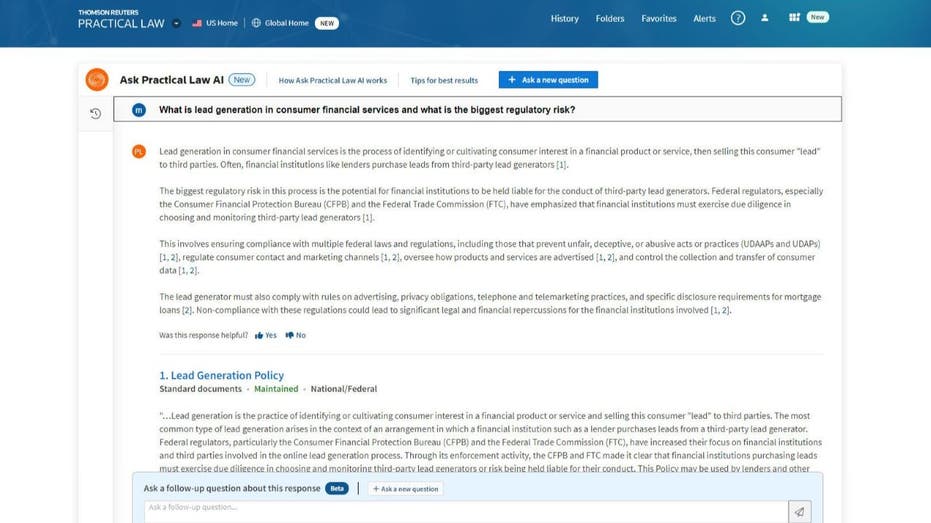

Thomson Reuters launched AI capabilities in its Practical Law tool, which uses generative AI to quickly provide legal professionals with the right answers to their questions along with tools and insights to improve their workflow.

“Practical Law is a product used by lawyers across the industry — so lawyers at big corporations, lawyers within law firms, independent, individual lawyers — to provide practical guidance on how to get their work done,” Wong explained. “I like to think of it as like a SparkNotes or Wikipedia of the law, but really helping you to do your work as a contrast to Westlaw, which is a deep research system for the details of the law.”

THOMSON REUTERS LAUNCHES GENERATIVE AI TOOLS FOR LEGAL RESEARCH

Thomson Reuters Ask Practical Law tool with an AI-generated answer. (Thomson Reuters / Fox News)

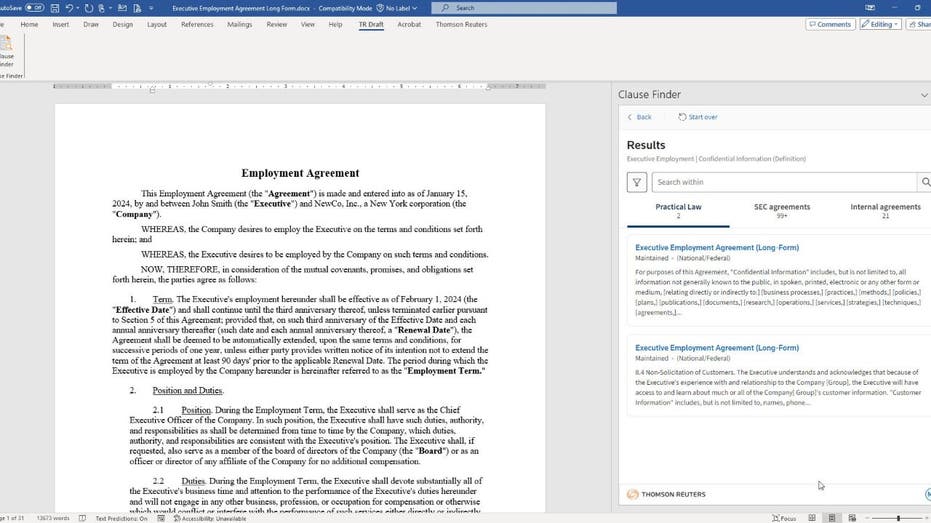

The company is also building out new AI capabilities within its Practical Law platform, including Ask Practical Law AI, which helps users access summarized content from the platform that has been curated by a team of over 650 lawyers. It has also rolled out a tool to help legal professionals find specific clauses from the platform, Securities and Exchange Commission agreements and internal documents while using Microsoft Word.

“Ask Practical Law AI… is a question-and-answer capability to get you to the right answer to the question as well as resources within our Practical Law product,” Wong explained. “We’ve launched Practical Law Clause Finder, which is a clause database used by lawyers to draft. So you have this massive database of already curated clauses for any type of contract drafting you’re trying to do, and it’s tied in with Microsoft Word.”

| Ticker | Security | Last | Change | Change % |

|---|---|---|---|---|

| TRI | THOMSON REUTERS CORP. | 159.29 | +1.50 | +0.95% |

Thomson Reuters is also bringing its AI-powered CoCounsel tool, which launched in the U.S. last year, to international markets with it formally available in Australia and Canada and in development for the U.K. and Europe.

WHAT IS ARTIFICIAL INTELLIGENCE (AI)?

Thomson Reuters Clause Finder. (Thomson Reuters / Fox News)

The CoCounsel tool uses generative AI to help customers do things like preparing for depositions, drafting correspondence, searching databases or documents, summarizing documents and extracting data from contracts.

Wong explained that Thomson Reuters’ AI systems are built on commercially available large language models, with the company training the tools by trying to “furnish the right law and parse the right cases” so that there’s “less irrelevant information to have to filter out or large language models to answer the question.”

GET FOX BUSINESS ON THE GO BY CLICKING HERE

Answers supplied to users are footnoted, and Wong added that Thomson Reuters’ AI systems have been trained to let users know when the tool hasn’t been able to successfully retrieve the sought after result, which he says serves as a means of preventing AI hallucinations and building trust with customers.

“We obviously don’t want the system to generate ‘I don’t know’ 50% of the time — but if we do show that we weren’t able to answer a question and we’re transparent that we can’t, it creates incredible trust because that’s one of our most powerful ways of trying to limit hallucination,” Wong explained. “It’s much better to be able to say ‘I don’t know’ than to make something up.”