BING-JHEN HONG

Introduction & Investment Thesis

Nvidia (NASDAQ:NVDA)(NEOE:NVDA:CA) helps their customers accelerate workloads across thousands of compute nodes by providing a full-stack platform that involves designing and manufacturing computer graphics processors ((GPUs)), chipsets, and software. The stock has massively outperformed the S&P 500 and Nasdaq 100 YTD.

The company reported its Q1 FY25 earnings, where revenue and earnings grew 262% and 690% YoY, respectively, beating estimates. During the quarter, the company saw its Data Center revenue grow 427% YoY, contributing 87% to Total Revenue, as demand remained strong across large cloud providers, enterprises, and consumer internet companies. Meanwhile, inferencing revenue contributed 40% to total Data Center revenue, and I believe that Nvidia is positioning itself to drive growth in this market with its full-stack platform, which is designed to optimize and deliver value to its customers in real time with a higher return on investment, especially as demand for its H200 and Blackwell chips is still ahead of supply. Simultaneously, Nvidia is also seeing success in gaining market share in the public sector as governments around the world prepare to invest in Sovereign AI.

Although there could be short-term volatility in the stock price, given the elevated level of investor optimism amidst a capex boom in AI, which could decelerate should we see a macroeconomic slowdown, coupled with the probability of competitors slowly catching up, especially hyperscalers building custom chips to meet their customers’ AI needs, I believe that Nvidia is well positioned to lead AI-enabled accelerated computing, where I believe we will likely see growing adoption among enterprises to leverage Nvidia’s AI infrastructure for faster training, inferencing, and networking across use cases and workloads. Plus, I believe that Nvidia’s penetration in the public sector will further diversify its revenues, shielding it to a greater extent from large-scale cyclicality.

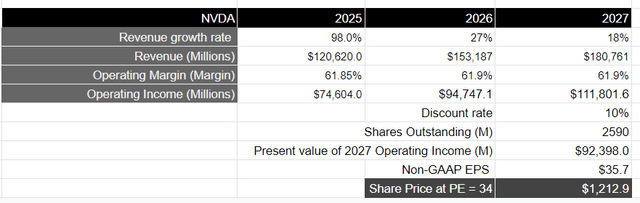

Assuming that Nvidia grows its revenue in line with consensus estimates, coupled with earnings growing in line with revenue growth until FY27, I believe there is still room for upside of 16–17% in the stock, making it a “buy”.

The good: Inference contributing 40% to Data Center Revenue with the rise of AI factories, Sovereign AI to become a billion-dollar business, Blackwell GPUs in production, and Margins continuing to expand.

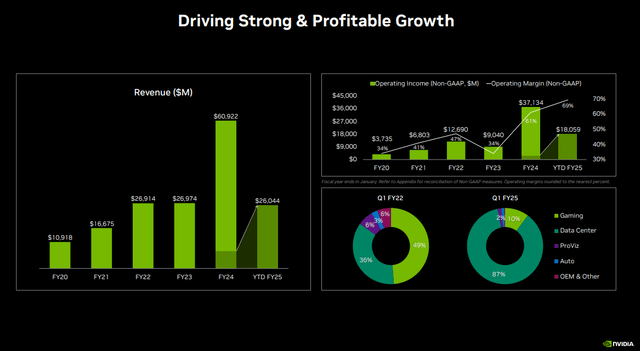

Nvidia reported its Q1 FY25 earnings, where it saw its revenue grow 262% YoY to $26.0B. Out of the $26B in Revenue, Data Center revenue contributed close to 87%, growing 427% YoY to $22.6B, while the remaining 13% was derived from a combination of Gaming, Professional Visualization, Auto, OEM and others. Given the magnitude of growth in the Data Center, I am going to focus this post on understanding the drivers, innovation levers, and future prospects pertaining to the Data Center business in order to build my investment thesis for Nvidia.

Q1 FY25 Earnings Slides: Strong revenue coupled with expanding profitability

To start, the growth in Data Center revenue was driven by customers of all types, with large cloud providers contributing a mid-40% percentage to Data Center Revenue, while enterprises and consumer internet companies contributed the remaining 60% of Data Center Revenue. I believe the strong demand for Nvidia’s GenAI infrastructure is indicating that Nvidia remains materially ahead of its competitors in its ability to deliver training and efficiency, thus leading to a strong return on investment (ROI) for cloud providers as companies transition from general-purpose to accelerated computing. During the earnings call, Colette Kress, CFO at Nvidia, outlined that for every $1 spent on Nvidia’s AI infrastructure, cloud providers have an opportunity to earn $5 in GPU instant hosting revenue over a span of four years.

Meanwhile, enterprises also drove strong sequential growth in Data Center, with Nvidia management projecting automotive to be their largest enterprise vertical within the Data Center segment in FY25, as Nvidia AI infrastructure is positioned uniquely to enable autonomous driving capabilities across the industry.

Finally, the management also expects to see growth opportunities in consumer internet companies as demand for AI compute continues to grow with inference scaling from increasing model complexities, the number of users, and the number of queries per user.

This brings me to the distribution of training vs. inference revenue for Nvidia’s Data Center business. In Q1, inference drove about 40%, and I expect inference as a share of total Data Center revenue to grow as LLMs become an important part of daily workloads, such as with the rise of copilots, digital assistants, and others. During the earnings call, the management discussed how Nvidia is planning to stay ahead as it integrates both its hardware and software advancements into one full stack in order to power the “next industrial revolution” of accelerated computing by building “AI factories,” which are optimized for refining data, training, inference, and generating AI. For instance, Cuda, which is the software layer, enabled Nvidia to boost inference capabilities on its H100 by 3x, and I believe that as Nvidia controls every piece of its infrastructure, it is positioned to drive faster improvements than others can, while the lack of dependency on third-party vendors translates to optimizing and delivering value to its customers in real time.

Meanwhile, Nvidia continues to expand its Data Center customers to governments around the world as they invest in “Sovereign AI,” which it defines as a “nation’s capability to produce artificial intelligence using their own infrastructure, data, workforce, and business networks.” The company expects that Sovereign AI revenue will approach the high single-digit billions in FY25, and I believe that Nvidia is well positioned with its end-to-end compute to networking technologies, full-stack software, and AI expertise to guide their public sector clients better than others in training geo-specific models, with Italy, France, Singapore, and Japan choosing Nvidia as their partner.

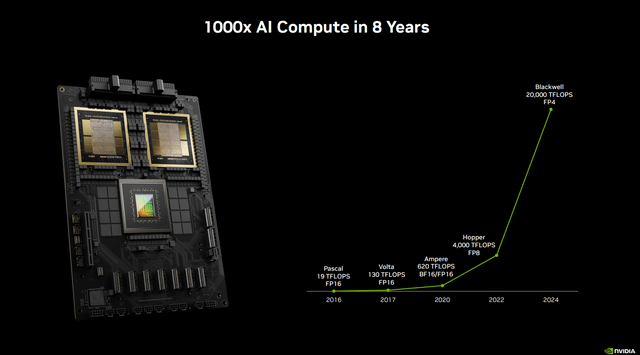

Turning our attention to product innovation, Nvidia delivered its first H200 to OpenAI in Q1 in order to power GPT-4o, which has double the inference performance of H100. Meanwhile, Meta’s Llama models are using Nvidia’s HGX H200 servers, which are Nvidia-built computer systems offering H200 chips, memory, storage, and networking to deliver 24000 tokens per second per server, realizing an average ROI of $7 in revenue for every $1 spent on these servers over a period of 4 years. Simultaneously, the management also mentioned that its newest Blackwell GPUs, which train models 4x faster than H100 with 30x more powerful inference, are in full production, with demand likely to exceed supply well into the next year.

Q1 FY25 Earnings Slides: The role of Blackwell in Accelerated Computing

Shifting gears to profitability, Nvidia saw its gross profits expand 339%, with a gross margin expansion of 1400 basis points to 78.3%, which was driven by lower inventory targets, favorable component costs, as well as cost and time-to-solution savings from the full-stack architecture. In terms of operating income, the company generated $16.9B in GAAP terms with a margin of 65%, which represents an improvement of 3500 basis points from the previous year. This is primarily led by the exponential increase in Data Center revenue due to the massive capex boom in AI acceleration, leading to a demand for ever greater GPU computing clusters, which has allowed the company to rapidly expand its operating leverage.

The bad: Macroeconomic slowdown can put brakes on the AI capex boom along with growing competition

While Nvidia’s Data Center performance has been nothing short of extraordinary with the boom in capex spending as companies race to build the most powerful LLMs, the key question is how long this capex phase will last. As we know, infrastructure investments are notoriously cyclical, which can often hurt semiconductor companies. Nvidia hasn’t yet seen any signs of weakness from its customers, with the demand for H200 and Blackwell well ahead of supply into next year. However, should we see a macroeconomic slowdown with inflation still above the Fed’s 2.2% target and interest rates kept higher for longer, I would expect Nvidia’s customers to scale back on their capex plans for AI training. While the growing inference market as well as revenue diversification into the public sector can shield the negative impact of a capex slowdown to a certain extent, it can certainly hurt investor optimism.

In terms of the competitive landscape, both AMD (NASDAQ:AMD)(AMD:CA) and Intel (NASDAQ:INTC)(INTC:CA) are small compared to Nvidia, and I believe that will likely remain the case for the coming two to three years. Long term, as Intel and AMD build out their software stack along with versatile hardware, they should be able to take some market share in the datacenter GPU market. However, given the pace of innovation in AI, Nvidia is extremely well positioned, given their stack and scale, to move fast and keep up with the AI industry. On the other hand, hyperscalers such as Google (NASDAQ:GOOG)(GOOG:CA), Amazon (NASDAQ:AMZN)(AMZN:CA), Microsoft (NASDAQ:MSFT)(MSFT:CA) and other deep-pocketed players should be able to build custom chips to meet their customers’ AI needs through attractive pricing, which could pose competition for Nvidia.

Tying it together: Although short-term volatility may arise, Nvidia is a long-term “buy”.

Looking forward, Nvidia expects to generate $28B in revenue in Q2, which would represent a sequential and annual increase of 8% and 107%, respectively. On a sequential basis, it will be growing at a slower rate than in the prior quarter, where revenue grew 18% QoQ. With a GAAP gross margin of 74.8%, coupled with operating expenses of $4.0B, Nvidia should generate a GAAP operating income of $16.9B in Q2 with a margin of 60.5%, down approximately 450 basis points sequentially.

Although it is difficult to predict when the capex boom in AI training will likely hit a peak, it is important to note that Nvidia is going to be facing tougher comparisons moving forward, where we will likely see the rate of acceleration in Data Center revenue peak. However, given the competitive positioning of Nvidia as LLMs become prevalent in daily workloads around the globe, I believe that it should be able to grow its revenue as per consensus estimates up until FY27 as it rolls out its Blackwell chips with faster training and inference speed, where demand is estimated to outpace supply into the next year, thus enabling its customers to build their AI factories. This would translate to Nvidia generating approximately $180B in revenue by FY27.

In terms of profitability, the management has projected gross margins to be in the mid-70’s range, with operating expenses growing in the low-40% range. This would translate to an operating income of approximately $74.6B, assuming revenue grows at par with consensus estimates of 98% at $120B. Assuming that operating income continues to grow in line with revenue until FY27, that would translate to Nvidia generating a total operating income of $111.8B, which is equivalent to a present value of $92B when discounted at 10%.

Taking the S&P 500 as a proxy, where its companies grow their earnings on average by 8% over a 10-year period with a price-to-earnings ratio of 15–18, I believe that Nvidia should trade at least twice the multiple, given the growth rate of its earnings. This would translate to a PE ratio of 34, or a price target of $1212, which represents an upside of 17% from its current levels.

Although Nvidia’s stock could be subject to short-term volatility, especially given the level of investor optimism and the extended PE of the S&P 500 from its 5- and 10-year averages of 19.1 and 17.7, respectively, I believe that Nvidia is a long-term beneficiary of AI-enabled accelerated computing, where we are likely to see growing adoption from enterprises across use cases and workloads such as autonomous driving, industrial digitalization, and more where they leverage Nvidia’s AI infrastructure for faster training, inference, and networking, coupled with a growing number of nations building out their Sovereign AI capabilities with Nvidia.

Please note, however, that the calculations are based on the current share count; however, the company announced a 10-for-1 split of their shares, which will be in effect on June 10th. This, however, will not change the upside that has been shown in the valuation below.

Conclusions

Although Nvidia may see some short-term volatility, especially if we see a macroeconomic slowdown dampen the capex boom in AI infrastructure, I believe that Nvidia is a long-term beneficiary as companies worldwide transition from general-purpose computing to accelerated computing, where LLMs become a prevalent part of daily workloads with the rise of copilots, digital assistants, and more, coupled with its robust pace of innovation across both its hardware and software to better control every piece of its infrastructure. I believe that the stock still has room for expansion at its current levels, and therefore, I will rate it as a “buy”.