Any doubts about Nvidia‘s (NVDA 4.00%) ability to sustain its red-hot stock market momentum were put to rest last week following the release of the company’s fourth-quarter fiscal 2024 results (for the quarter ending Jan. 28). The semiconductor giant not only crushed Wall Street’s expectations handsomely, but it also guided strongly for the current quarter, suggesting that its artificial intelligence (AI)-fueled growth is here to stay.

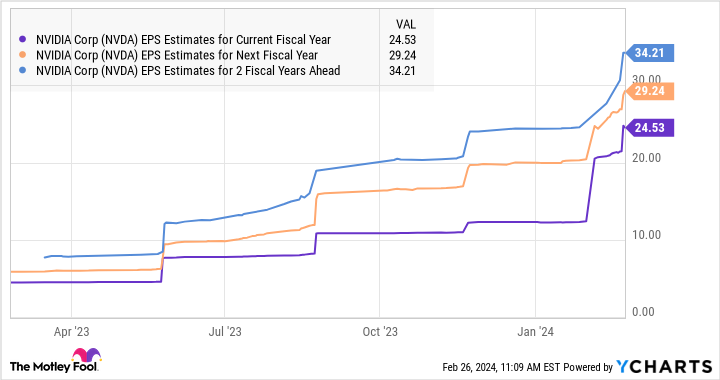

Not surprisingly, Nvidia stock soared impressively following its earnings release. Additionally, analysts have started raising their growth forecasts for the current and the next two fiscal years, suggesting that its outstanding growth is here to stay.

NVDA EPS Estimates for Current Fiscal Year data by YCharts

However, a key statement from Nvidia CFO Colette Kress on the company’s earnings conference call suggests that it can sustain its terrific growth for a much longer period: “We estimate in the past year approximately 40% of data center revenue was for AI inference.”

Let’s dive into the details.

AI inferencing could help Nvidia maintain its supremacy in this lucrative market

Nvidia’s graphics processing units (GPUs) shot into the limelight once it was evident that they are playing a mission-critical role in training large language models (LLMs), which form the backbone of popular generative AI applications such as ChatGPT. Multiple cloud service providers have lined up to get their hands on Nvidia’s GPUs to train their AI models and bring generative AI services to the market. As a result, Nvidia controls a whopping 90% share of the AI training chip market.

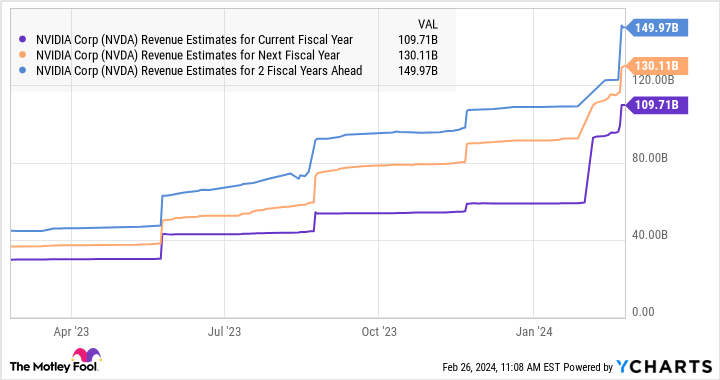

As it turns out, the demand for Nvidia’s flagship H100 AI GPU is so strong that customers are willing to wait between 36 and 52 weeks to get their hands on this piece of hardware. Nvidia is shoring up its supply capacity to meet that solid demand, and that explains why the company is expected to keep growing at a terrific pace and more than double its revenue by fiscal 2027 as compared to fiscal 2024’s reading of $60.9 billion.

NVDA Revenue Estimates for Current Fiscal Year data by YCharts

However, Kress’ comment that 40% of Nvidia’s data center revenue is coming from selling chips used for AI inference applications suggests that it could keep up its eye-popping growth for a very long time. That’s because AI inference chips are expected to account for the majority of the overall AI chip market. According to technology news and analysis provider TechSpot, inference is expected to account for 45% of the AI chip market, while AI training chips will account for 15% of this space in the future.

That’s not surprising, as once an AI model is trained using general-purpose computing chips such as graphics cards, which Nvidia sells, it will be put to use to generate results using new data sets. This process of generating results from an already trained AI model is known as inferencing. By 2030, Verified Market Research estimates that the demand for AI inference chips could jump from an estimated $16 billion in 2023 to almost $91 billion in 2030.

Based on Kress’ statement, Nvidia sold $19 billion worth of AI inference chips last year (40% of its total data center revenue of $47.5 billion for the year). That’s higher than the $16 billion revenue estimate from Verified Market Research. This means that Nvidia is dominating the AI inference market already, and the potential revenue opportunity bodes well for the company’s future.

If the AI inference chip market indeed generates $90 billion in revenue as Verified Market Research estimates, then Nvidia’s data center revenue could keep growing nicely through the end of the decade and supercharge its overall business.

What’s more, Nvidia is taking steps to ensure that it remains the leading player in the AI inference space with a potential move into custom chips, formally known as application-specific integrated circuits (ASICs). These chips are specifically designed for AI inference purposes thanks to their computing power and low power consumption. According to third-party estimates, the market for custom chips used for inferencing could jump from an estimated $30 billion in 2023 to $50 billion in 2025.

In all, it is easy to see why analysts are forecasting outstanding growth for Nvidia.

The valuation makes the stock an enticing buy

Nvidia’s earnings and revenue are set to increase rapidly in the coming years. The market should ideally reward Nvidia stock with handsome gains if it can indeed deliver on that front.

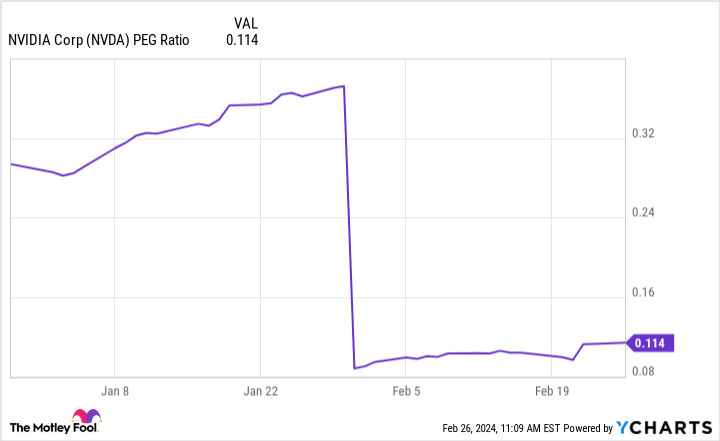

That’s why buying this AI stock right now looks like a no-brainer, especially considering its forward earnings multiple of 33 is currently lower than its five-year average forward earnings multiple of 39. Meanwhile, Nvidia remains extremely cheap when you look at its price/earnings-to-growth ratio (PEG ratio).

The PEG ratio is calculated by dividing a company’s P/E ratio by the estimated annual earnings growth it could deliver. As the following chart shows, Nvidia’s PEG ratio is way below 1, the reading below which a stock is considered to be undervalued.

NVDA PEG Ratio data by YCharts

All this means that investors are still getting a good deal on Nvidia despite its big surge in the past year, and they should consider buying this AI stock hand over fist given the moves it is making to ensure solid growth.

Harsh Chauhan has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Nvidia. The Motley Fool has a disclosure policy.