A hot potato: Nvidia has thus far dominated the AI accelerator business within the server and data center market. Now, the company is enhancing its software offerings to deliver an improved AI experience to users of GeForce and other RTX GPUs in desktop and workstation systems.

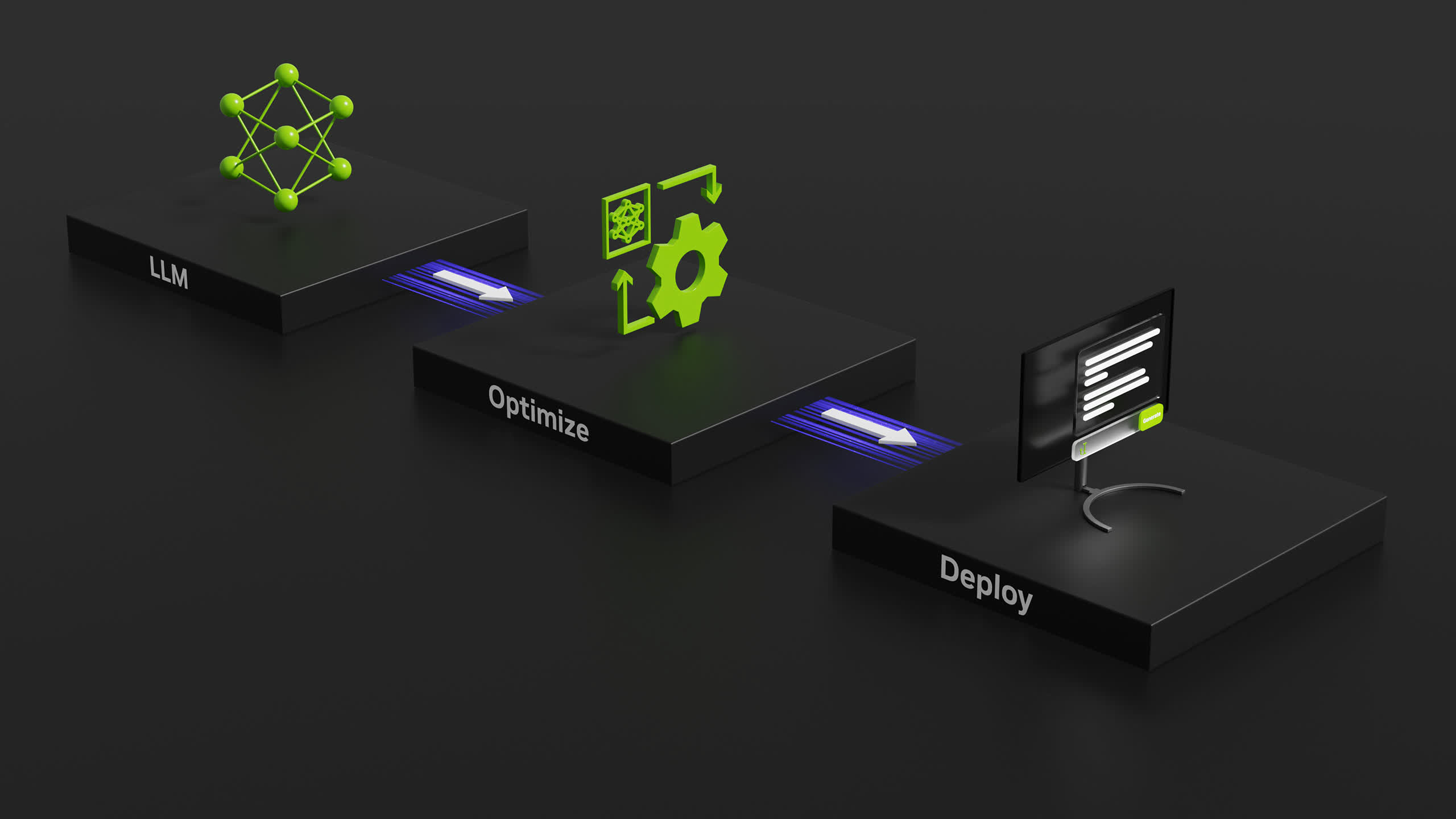

Nvidia will soon release TensorRT-LLM, a new open-source library designed to accelerate generative AI algorithms on GeForce RTX and professional RTX GPUs. The latest graphics chips from the Santa Clara corporation include dedicated AI processors called Tensor Cores, which are now providing native AI hardware acceleration to more than 100 million Windows PCs and workstations.

On an RTX-equipped system, TensorRT-LLM can seemingly deliver up to 4x faster inference performance for the latest and most advanced AI large language models (LLM) like Llama 2 and Code Llama. While TensorRT was initially released for data center applications, it is now available for Windows PCs equipped with powerful RTX graphics chips.

Modern LLMs drive productivity and are central to AI software, as noted by Nvidia. Thanks to TensorRT-LLM (and an RTX GPU), LLMs can operate more efficiently, resulting in a significantly improved user experience. Chatbots and code assistants can produce multiple unique auto-complete results simultaneously, allowing users to select the best response from the output.

The new open-source library is also beneficial when integrating an LLM algorithm with other technologies, as noted by Nvidia. This is particularly useful in retrieval-augmented generation (RAG) scenarios where an LLM is combined with a vector library or database. RAG solutions enable an LLM to generate responses based on specific datasets (such as user emails or website articles), allowing for more targeted and relevant answers.

Nvidia has announced that TensorRT-LLM will soon be available for download through the Nvidia Developer website. The company already provides optimized TensorRT models and a RAG demo with GeForce news on ngc.nvidia.com and GitHub.

While TensorRT is primarily designed for generative AI professionals and developers, Nvidia is also working on additional AI-based improvements for traditional GeForce RTX customers. TensorRT can now accelerate high-quality image generation using Stable Diffusion, thanks to features like layer fusion, precision calibration, and kernel auto-tuning.

In addition to this, Tensor Cores within RTX GPUs are being utilized to enhance the quality of low-quality internet video streams. RTX Video Super Resolution version 1.5, included in the latest release of GeForce Graphics Drivers (version 545.84), improves video quality and reduces artifacts in content played at native resolution, thanks to advanced “AI pixel processing” technology.