VB Transform 2024 returns this July! Over 400 enterprise leaders will gather in San Francisco from July 9-11 to dive into the advancement of GenAI strategies and engaging in thought-provoking discussions within the community. Find out how you can attend here.

Attackers are weaponizing AI to misdirect elections, defraud current exchanges and nations of millions and attack critical infrastructure.

These adversaries include nation-state attackers and cybercrime gangs that rely on AI to create and launch increasingly sophisticated identity attacks to finance their operations.

Weaponized AI attacks on identities are growing

Attackers’ tradecraft using generative AI to launch identity-based attacks ranges from phishing and social engineering-based attacks to password and privileged access credential takeover to create and launch synthetic identity fraud attacks aimed at financial institutions, retailers and the global base of e-commerce merchants.

With identity theft being their revenue lifeline, nation-state attackers are doubling down on AI to scale their efforts. That’s making synthetic identity fraud one of the fastest-growing types of fraud, posting a 14.2% year-over-year increase.

VB Transform 2024 Registration is Open

Join enterprise leaders in San Francisco from July 9 to 11 for our flagship AI event. Connect with peers, explore the opportunities and challenges of Generative AI, and learn how to integrate AI applications into your industry. Register Now

Financial institutions face $3.1 billion in exposure to suspected synthetic identity fraud for U.S. auto loans, bank credit cards, retail credit cards and unsecured personal loans, the highest level ever. TransUnion found suspected digital fraud in nearly 14% of all newly created global digital accounts last year. Retail, travel, leisure and video games are the hardest-hit industries.

Deepfakes are the cutting edge of AI-driven identity attacks. There was an estimated 3,000% increase in the use of deepfakes last year alone. Deepfake incidents are expected to increase by 50 to 60% in 2024, reaching 140,000-150,000 cases globally.

Last year, deepfakes were involved in nearly 20% of synthetic identity fraud cases, making it the fastest-growing category of weaponized AI. Attackers are relentless in improving their tradecraft, capitalizing on the latest AI apps, video editing and audio techniques. Deepfake-related identity fraud attempts are projected to reach 50,000 this year.

Deepfakes have become so commonplace that the Department of Homeland Security has issued the guide Increasing Threats of Deepfake Identities.

Most enterprises aren’t ready for AI-driven identity attacks

Today, one in three organizations don’t have a documented strategy to address gen AI risks, according to Ivanti’s 2024 State of Cybersecurity Report. CISOs and IT leaders admit they’re not ready for AI-driven identity attacks.

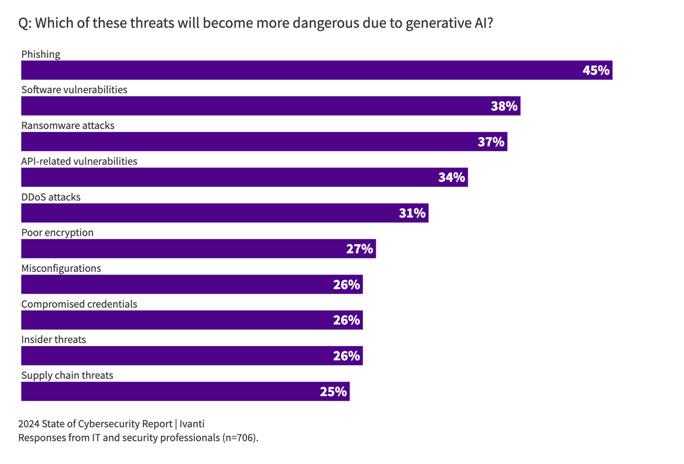

Ivanti’s report found that 74% of organizations are already seeing the impact of AI-powered threats, and 89% believe that AI-powered threats are just getting started. Of the majority of CISOs, CIOs and IT leaders interviewed, 60% fear their organizations are not prepared to defend against AI-powered threats and attacks. Phishing, software vulnerabilities, ransomware attacks and API-related vulnerabilities are the four most common threats CISO, CIOs and IT leaders expect to become more dangerous as attackers fine-tune their tradecraft with gen AI.

Ping Identity’s recent report, Fighting The Next Major Digital Threat: AI and Identity Fraud Protection Take Priority, reflects how unprepared most organizations are for the next wave of AI-powered identity attacks. “AI-powered cyber threats and identity attacks are about to explode, with over 40% of businesses saying they expect fraud to increase significantly next year,” writes Jamie Smith, one of the report’s authors and founder of Customer Futures. Ping Identity’s report found that 95% of organizations are expanding their budgets to fight AI-based threats.

Despite AI-based the fast growth of identity attacks, organizations aren’t taking advantage of the latest technologies to counter threats. Just under half (49%) are using one-time passcode authentication, and 46% are relying on digital credential issuance and verification. Just 45% are adopting two-factor or multifactor authentication (MFA). CISOs have told VentureBeat that MFA is a quick win, especially when it is part of a broader zero-trust framework strategy. Further, 44% of security leaders are using biometrics or behavioral biometrics.

The goal: Fight back against identity fraud while improving user experience

The challenge for many organizations is hardening their identity and access management (IAM), privileged access management (PAM) and authentication systems without negatively impacting user experience. CISOs have long told VentureBeat that the best cybersecurity safeguards are invisible to users.

Momentum is shifting in favor of replacing passwords with authentication technologies that resist AI-driven attacks, making it more difficult for attackers to steal credentials. Gartner predicts that by next year, 50% of the workforce and 20% of customer authentication transactions will be passwordless. APIs, biometrics and passwordless technologies are all considered strong replacements for traditional passwords.

Leading passwordless authentication providers include Microsoft Azure Active Directory (Azure AD), OneLogin Workforce Identity, Thales SafeNet Trusted Access and Windows Hello for Business. Of these, Ivanti’s Zero Sign-On (ZSO) makes use of the company’s unified endpoint management platform (UEM) platform to combine passwordless authentication while also supporting customers’ zero trust frameworks to streamline user experiences. Ivanti’s FIDO2 protocols eliminate passwords and support biometrics like Apple’s Face ID, making compromised credentials harder to access via AI-based identity attacks. Passwordless authentication and mobile integration are stopping AI-driven identity threats.

Stopping AI-based identity attacks by using application programming interfaces (APIs) that consolidate omnichannel verification traffic into one API that streamlines transactions is also reducing fraud. Telesign started working with customers on AI-enabled APIs to consolidate verification channels early on. Their Verify API evolved quickly from a customer-driven idea within a matter of months. This new omnichannel API integrates seven leading user verification channels: SMS, silent verification, push, email, WhatsApp, Viber, and RCS (rich communication services) into a unified API.

Telesign CEO Christophe Van de Weyer told VentureBeat during a recent interview that “with the growing threat of synthetic identity fraud, businesses look to onboarding as the most effective place to stop fraud by ensuring their customers are who they say they are during registration. More than ever, it’s become crucial for companies to protect the identities, credentials and PII of their customers. Telesign’s onboarding model delivers a risk assessment score to help businesses block, flag and detect synthetic identities while introducing the appropriate amount of user friction.”

Telesign’s Verify API integrates multiple verification channels using AI and machine learning (ML) to improve security and reduce fraud. This method improves customer identity protection across platforms by detecting and assessing fraud in real-time.

Van de Weyer added that, “verifying customers is so important because one thing that many kinds of fraud have in common is that they can often be stopped at the ‘front door,’ so to speak. Our recently introduced Verify API solution takes an omnichannel approach to empower every company to seamlessly select the newest, most secure and customer-friendly verification channels for their specific use cases. With a single integration, Verify API enables businesses to effortlessly integrate seven commonly preferred authentication channels with minimal development resources to make it easier to verify end-users and to stabilize the price for verification.”

Whoever controls the identities of a company, owns the company

Trafficking in stolen credentials and creating synthetic identities using AI are just two of the many ways nation-state and cybercrime organizations turn stolen identities into cash to fund their operations. With nation-state attackers turning to deepfakes to achieve their ideological and financial goals, the threatscape organizations have to contend with is changing fast. Organizations need to consider where the gaps and weaknesses are in how they manage identities or put their teams at risk of losing the AI war.