Discover how companies are responsibly integrating AI in production. This invite-only event in SF will explore the intersection of technology and business. Find out how you can attend here.

Today’s large language models (LLMs) are increasingly complex, but often, the data they use to generate responses doesn’t extend beyond their training — meaning it can often be weeks or even months out of date.

This has made retrieval-augmented generation (RAG) essential for modern enterprises, as it enables up-to-date, company-specific outputs. Still, retrieval presents its own issues when it comes to accuracy, scalability and security; enterprise content is intricate and complex.

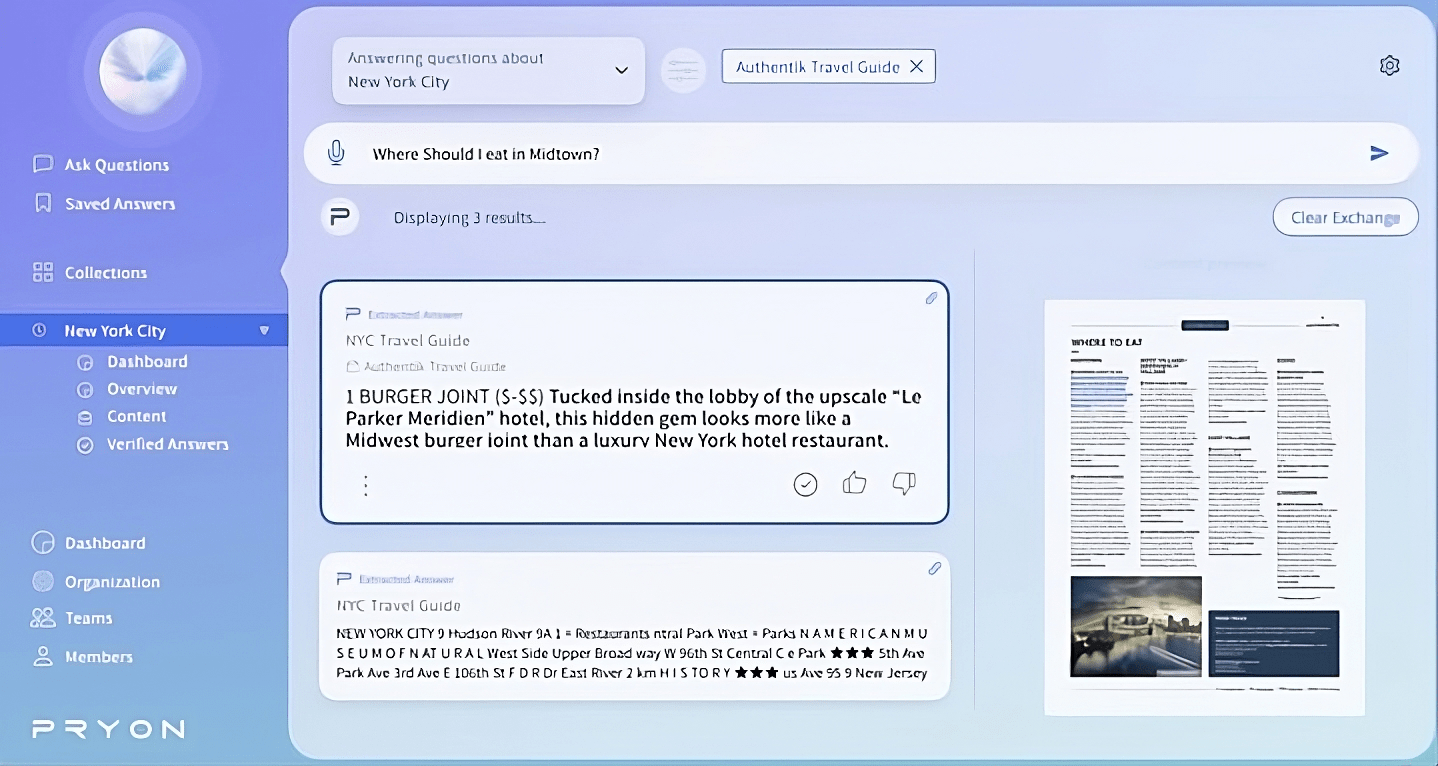

RAG platform Pryon says it has overcome many of these challenges, and is today extending its capabilities with the Pryon Retrieval Engine. The platform securely extracts information from complex, sprawled content to help organizations get the most out of today’s sophisticated AI tools.

“The reliability of generated content is not there, bias in the results is a big problem,” Chris Mahl, Pryon’s president, COO and board member,” told VentureBeat. “Information in some of these models is frozen in time. So, while I may be asking a question that we think is really smart, the answer is grounded in history, not in current events. Which is a big problem.”

Pulling together a ‘collection’ of company-specific data

Current methods of ingestion often struggle to handle complex document-based content, and accuracy is difficult at scale, Mahl explained. Further compounding this is the fact that content is scattered across numerous systems and file types.

The Pryon Retrieval Engine transcends these issues, blending millions of pieces of enterprise data into one knowledge base, which Pryon calls a “collection.”

The system uses semantic neural networks, document analysis and proprietary optical character recognition (OCR) to pull text from images, graphics, tables, schematics and even hand-written notes. Video segmentation identifies and labels important components, normalizes and filters content to remove unnecessary objects and uses visual semantic segmentation to group documents.

Users can ask questions in whatever form they want and receive an answer “in milliseconds,” said Mahl. He likes to refer to this layered information as a “knowledge fabric” rather than retrieval, as the latter isn’t “intellectually strong enough” to explain its complexity.

To help maintain security, Pryon integrates access control lists (ACLs), which specify which users or systems have access to a given resource. The system is also portable and can be deployed on-premises, in public and private clouds and in air-gapped environments, said Mahl.

Pre-built components allow organizations to implement production-ready gen AI applications in as little as two week’s time, and a no-code interface allows admins to update content in real time.

The engine is API-enabled to support custom deployments and is also accessible through as a full end-to-end platform. Further, it integrates with a number of systems including Microsoft SharePoint, Confluence, AWS S3, Google Drive, Zendesk, ServiceNow and Salesforce.

“All this information around a corporation that’s proprietary, in different formats — complicated schematics, reams of reports, it doesn’t matter — we lift it all up and put it into a model safely and securely that users can then interact with conversationally in milliseconds and get accurate and attributable answers,” said Mahl.

From gaming to insurance underwriting

One customer used Pryon on-premises to bring together 400,000 technical documents that 5,000 users and 500 concurrent users per second accessed to get accurate answers in milliseconds, Mahl explained.

In another instance, Pryon supported a consumer gaming technology company with millions of customers who are constantly asking sophisticated technical questions of a support site. Now, they are invited to visit a portal to ask questions in the natural language of tens of thousands of knowledge articles. That collection is refreshed many times a day and has been live 24/7 for the last two years.

Pryon also supported an engineering company with millions of documents that engineers need to maintain critical systems. The retrieval engine not only provides answers, but near-instant access to original documents.

In other cases, insurance companies have incorporated Pryon to support their underwriting cycles, and companies dealing with sophisticated products have used the engine to ensure their salespeople have knowledge at the point of sale.

“The retrieval data layer in a company, the RAG-ready data layer, is the most important asset any company will have,” said Mahl. “Therefore, having a secure, scalable infrastructure that protects, manages and oversees that layer of information is one of the most important pieces of IP that you can manage.”

Data in modern enterprise is ‘fractured’

Data is the “original value,” but how well organizations understand it is “very, very low,” said Mahl. Data is “potentially everywhere” — video, long text, emails, finance documents and even microfiche.

Organizations have an “amazing amount of complex, rich, high-value information” that, arguably, can accelerate product development. Yet, it’s often impossible to locate the most important relevant data for a given project.

For instance, a chip engineering company may have four different research departments with a million documents in each, not to mention scientists repeating experiments all the time.

“It’s been an interesting journey for us to work with these prestigious, highly curated firms and realize that much of the information they have either internally or externally is just so fractured,” said Mahl. “Generative is now here, and it turns out that the biggest problem with generative is data inside companies.”

Mahl underscored the security element, saying that there is much excitement with gen AI, but also, “the privacy topics are profound; the data security concerns are profound.” Gen AI is expected to bring up to $4.4 trillion in economic benefits annually across the globe, but organizations have concerns about releasing their proprietary data to public LLMs and the cloud.

“I would drive home security, security, security,” said Mahl. “That level of security control is one of our true norths.”

Not just delivering answers; understanding the question

For humans to answer questions, we must first comprehend what’s being asked of us — and AI should be able to do the same, said Mahl.

Pryon’s systems are designed to understand the complexity of a question, “from the headers to the footers to the design layout to the subjects.” The engine uses query expansions, out-of-domain detection and query embedding to understand natural language queries, then uses three proprietary models to identify and rank matched content.

Mahl pointed out that different people will ask questions about the same subject differently. For instance, ‘how much higher is revenue this year than last year?’ compared to ‘what was the revenue last year?’

“Once you have intelligence organized, how do you prepare it to have an answer that responds to the same question asked in 14 degrees of itself?” Mahl posited. This requires looking at the reverse of a question being asked, looking at content and “bouqueting out all the possible questions.”

LLMs that attribute their answers

Attribution is also critical to ensure that models aren’t “hallucinating,” or offering up incorrect information.

“We all use chat GPT and other new models,” said Mahl. “I’ve asked some pretty cool questions, but I don’t really know where the answer came from.”

He explained that the only answers Pryon generates are from the “information that is the canonical representation of truth.”

This means that, for instance, users can ask multi-part questions, and answers will be pulled from different sources with references included.

“Pryon’s platform is designed to be the advocate and the scaling partner for CIOs, CTOs and technicians,” said Mahl, “to give them the control of their unstructured and semi-structured information that puts it in its most performance state.”