WTF?! If you’re a gamer lending your GPU power to a cloud computing company called Salad, there’s a good chance it’s being used to create AI-generated adult content in exchange for video game currencies and items. Yep, you read that right.

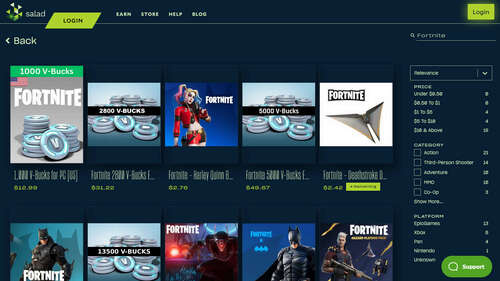

According to a report from 404 Media, Salad has found a clever way to massively scale up AI content generation, including images, videos, and text, by outsourcing the heavy computational work to the powerful GPUs found in gaming rigs. Gamers who sign up to “rent” out their idle GPUs get paid in digital goods like Fortnite cosmetics, Roblox credit, Minecraft skins, and other gaming-related rewards through the Salad store.

It’s easy money – powerful graphics cards are well suited for the processing power needed to run AI models like Stable Diffusion to generate media. But here’s the kicker: part of the AI content Salad is generating using rented GPU power is adult content.

When signing up, Salad gives users the choice to opt out of being involved in generating explicit adult content. However, the company’s Discord mods have reportedly claimed that by default, everyone is opted out, and some countries don’t allow opting in due to local laws. Salad’s website also suggests that users who don’t generate adult content could make less money.

“There might be times where you earn a little less if we have high demand for certain adult content workloads, but we don’t plan to make those companies the core of our business model,” reads an excerpt from their FAQs.

The real shadiness emerges with Salad’s involvement with Civitai, a platform where AI models are shared and images are generated. 404 Media notes that Civitai has previously allowed non-consensual porn generation, including one particularly disturbing incident where AI-generated images “could be categorized as child pornography” slipped through from another company working with Civitai.

Neither Salad nor Civitai have been transparent about just how prevalent non-consensual and illegal content generation is among the reams of AI porn being cranked out. But given Civitai’s track record and the sheer scale of opaque processing power Salad is leveraging, it’s quite possible that some messed-up stuff is being created, unbeknownst to the gamers whose GPUs are being rented.

It’s a grim situation emblematic of the larger ethical pitfalls emerging in commercial AI development – companies rushing to stake claims and scale without adequate safeguards, transparency, or accountability measures in place.

404 Media speculated that Salad marks all Stable Diffusion image jobs as “adult content” by default because it can’t actually verify what individual users are generating.

So while you may just be hoping to score some free Fortnite goodies by lending your GPU to Salad’s cloud network, there’s a chance your hardware is churning out digital depravity behind the scenes.

Let’s just hope more thoughtful regulation emerges before the metastasizing of non-consensual AI porn renders the entire sector a dumpster fire.