Nvidia unveiled its Omniverse Cloud application programming interfaces (APIs), enabling a leap forward in digital twin software tools.

With industry giants like Ansys, Cadence, and Siemens on board, the APIs are set to power a new wave of digital twin applications, enhancing design, simulation, and operation processes, Nvidia announced at its Nvidia GTC 2024 event in San Jose, California today.

The launch of Omniverse Cloud APIs represents a leap for digital twin technology. By integrating core Omniverse capabilities directly into existing software applications, developers can now streamline workflows and accelerate the creation of digital twins for a wide range of industrial applications. I am moderating a panel on industrial digitilization and digital twins on Tuesday at 4 p.m. at GTC.

“We believe that everything manufactured will have digital twins,” said Jensen Huang, CEO of Nvidia, in a keynote talk today at Nvidia GTC 2024. “Omniverse is the operating system for building and operating physically realistic digital twins. With generative AI and Omniverse, we are digitalizing the heavy industries market, opening up boundless opportunities for creators.”

GB Event

GamesBeat Summit Call for Speakers

We’re thrilled to open our call for speakers to our flagship event, GamesBeat Summit 2024 hosted in Los Angeles, where we will explore the theme of “Resilience and Adaption”.

While the consumer metaverse is making slow progress, the industrial metaverse has moved into high gear. Nvidia has been investing in Omniverse for five years, using the foundation of Universal Scene Description (USD), a file format created by Pixar for universal data exchange for 3D simulations. Nvidia licenses Omniverse to enterprises for $4,500 per year. The new part of this GTC event is the Omniverse Cloud APIs.

“Omniverse is a platform for developing and deploying physically based industrial digitalization applications. It’s the feedback loop for AI to enter the physical world. Omniverse is not a tool. It’s a platform of technologies that supercharge other tools and let them connect to the world’s largest design, CAD and simulation industry ecosystems, such as Siemens, Autodesk, or Adobe,” said Rev Lebaredian, vice president of simulation at Nvidia in a press briefing and interview with GamesBeat. “Our software partners like Siemens and Ansys use Omniverse to build new tools and services. Our end and industry customers like BMW, Amazon robotics and Samsung use Omniverse to develop their own tools to connect their industrial processes.”

Both generative AI and digital twins are changing the way companies in multiple industries design, manufacture and operate their products.

Traditionally, companies have relied heavily on physical prototypes and costly modifications to complete large-scale industrial projects and build complex, connected products. That approach is expensive and error-prone, limits innovation and slows time to market, said Lebaredian.

The theory behind the digital twin is to build a factory in a digital form first, simulating everything in a realistic way so that the design can be iterated and perfected before any one has to break ground on the physical factory. Once the factory is built, the digital twin can be used to reconfigure the factory quickly. And with sensors capturing data on the factory’s operation, the designers can modify the digital twin so that the simulation is more accurate. This feedback loop can save the enterprise a lot of money.

Lebaredian pointed out that the world’s “heavy industries” are facing labor shortages, supply constraints and a difficult geopolitical landscape. That’s why they’re racing to become autonomous and software defined.

“They’re creating digital twins to design, simulate, build, operate, and optimize their assets and processes,” he said. “This helps them increase operational efficiencies, and save billions and cost. Once built, these digital twins become the birthplace of robotic systems, and virtual training grounds for AI, like the need for reinforcement learning and human feedback for training large language models. This new era of digital twins needs physics feedback to ensure that AI learned in these virtual worlds can perform in the physical world.”

Omniverse Cloud APIs

The five new Omniverse Cloud APIs offer a comprehensive suite of tools for developers. They include USD Render which generates fully ray-traced renders of OpenUSD data; USD Write, which allows users to modify and interact with OpenUSD data; USD Query, which enables scene queries and interactive scenarios; USD Notify, which tracks USD changes and provides updates; and Omniverse Channel, which facilitates collaboration across scenes, connecting users, tools, and worlds.

“Omniverse Cloud APIs let developers integrate core Omniverse technologies for OpenUSD and RTX directly into their existing apps and workflows. This gives ISVs (independent software vendors) all the powers of Omniverse interoperability across tools physically based on real time rendering, and the ability to collaborate across users and devices,” Lebaredian said. “The APIs will be available on Microsoft Azure later this year, enabling developers to either sell enterprises or consumers managed services. The world’s largest industrial software companies are adopting these APIs and bringing hundreds of thousands of users. Our first partner is Siemens.”

Companies like Siemens and Ansys are already integrating Omniverse Cloud APIs into their platforms. Siemens, for example, is incorporating the APIs into its Xcelerator Platform, enhancing the capabilities of its cloud-based product lifecycle management software, Teamcenter X.

“Through the Nvidia Omniverse API, Siemens empowers customers with generative AI to make their physics-based digital twins even more immersive,” said Roland Busch, president and CEO of Siemens AG, in a statement. “This will help everybody to design, build, and test next-generation products, manufacturing processes, and factories virtually before they are built in the physical world.”

I interviewed Busch about digital twins at CES 2024 in January.

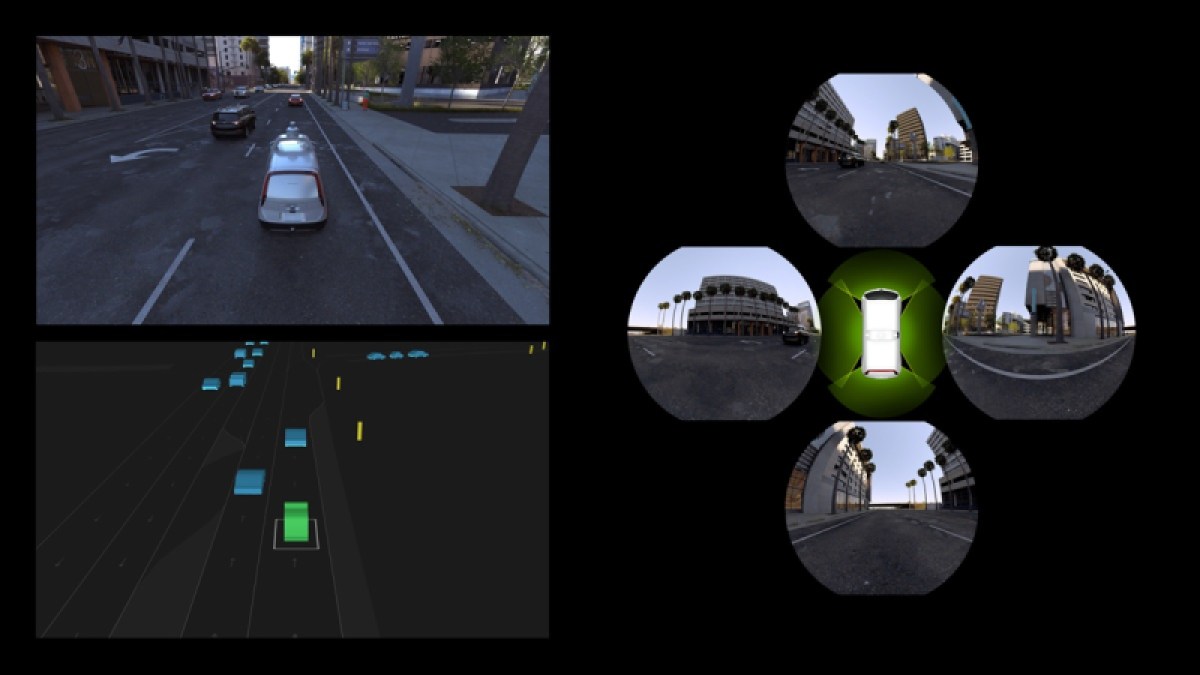

Ansys and Cadence are also leveraging Omniverse Cloud APIs to enable data interoperability and real-time visualization in their respective software solutions for autonomous vehicles and digital twin platforms.

With the demand for autonomous machines on the rise, the need for efficient end-to-end workflows has become paramount. Omniverse Cloud APIs bridge the gap between simulation tools, sensor solutions, and AI-based monitoring systems, enabling developers to accelerate the development of robots, autonomous vehicles, and AI-based monitoring systems.

“The next era of industrial digitalization has arrived,” said Andy Pratt, corporate vice president at Microsoft Emerging Technologies, in a statement. “With Nvidia Omniverse APIs on Microsoft Azure, organizations across industries and around the world can connect, collaborate, and enhance their existing tools to create the next wave of AI-enabled digital twins.”

The launch of Omniverse Cloud APIs marks a pivotal moment in the digital transformation of industries worldwide. With the power of Nvidia’s AI-driven technology, companies can unlock new levels of innovation and efficiency, transforming the way they design, simulate, build, and operate in the digital age.

The industrial software makers using Omniverse Cloud APIs include Ansys, Cadence, Dassault Systèmes for its 3DExcite brand, Hexagon, Microsoft, Rockwell Automation, Siemens and Trimble.

Focus on Nvidia and Siemens

Siemens is one of the companies that is all-in on Omniverse and digital twins. The Siemens Xcelerator platform offers immersive visualization that enhances product lifecycle management. Siemens said it is adopting the new Nvidia APIs in its Siemens Xcelerator platform applications, starting with Teamcenter X.

Teamcenter X is Siemens’ cloud-based product lifecycle management (PLM) software. Enterprises of all sizes depend on Teamcenter software, part of the Siemens Xcelerator platform, to develop and deliver products at scale.

By connecting Nvidia Omniverse with Teamcenter X, Siemens will be able to provide engineering teams with the ability to make their physics-based digital twins more immersive and photorealistic, helping eliminate workflow waste and reduce errors.

Through the use of Omniverse APIs, workflows such as applying materials, lighting environments and other supporting scenery assets in physically based renderings will be dramatically accelerated using generative AI.

AI integrations will also allow engineering data to be contextualized as it would appear in the real world, allowing other stakeholders — from sales and marketing teams to decision-makers and customers — to benefit from deeper insight and understanding of real-world product appearance.

By connecting Omniverse Cloud APIs to the Xcelerator platform, Siemens will enable its customers to enhance their digital twins with physically based rendering, helping supercharge industrial-scale design and manufacturing projects. With the ability to connect generative AI APIs or agents, users can effortlessly generate 3D objects or high-dynamic range image backgrounds to view their assets in context.

This means that companies like HD Hyundai, a leader in sustainable ship manufacturing, can unify and visualize complex engineering projects directly within Teamcenter X. At Nvidia GTC, Siemens and Nvidia demonstrated how HD Hyundai could use the software to visualize digital twins of liquified natural gas carriers, which can comprise over 7 million discrete parts, helping validate their product before moving to production.

Redesigning data centers

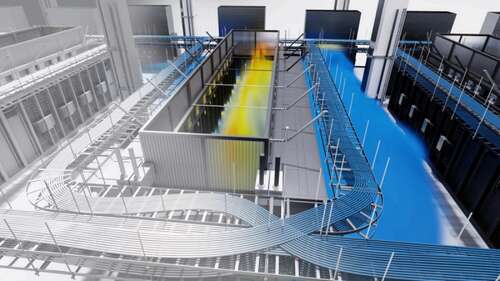

Nvidia also unveiled a digital blueprint for building next-generation data centers using Omniverse digital twins supported by Ansys, Cadence, Patch Manager, Schneider Electric, Vertiv and more.

Nvidia said that designing, simulating and bringing up modern data centers is incredibly complex, involving multiple considerations like performance, energy efficiency and scalability. It also requires bringing together a team of highly skilled engineers across compute and network design, computer-aided design (CAD) modeling, and mechanical, electrical and thermal design. I’m assuming these are the “sovereign AI” data centers that Huang has been talking about.

On the show floor, Nvidia is showing a demo of a fully operational data center, designed as a digital twin in the Nvidia Omniverse. The latest design shows advanced AI supercomputers consisting of a large cluster based on an Nvidia liquid-cooled system, with two racks, 18 Grace CPUs, and 36 Nvidia Blackwell GPUs, connected by fourth-generation Nvidia NVLink switches.

To bring up new data centers as fast as possible, Nvidia first built its digital twin with software tools connected by Omniverse. Engineers unified and visualized multiple CAD datasets in full physical accuracy and photorealism in Universal Scene Description (OpenUSD) using the Cadence Reality digital twin platform, powered by Nvidia Omniverse APIs.

The new GB200 cluster is replacing an existing cluster in one of Nvidia’s legacy data centers. To start the digital build-out, technology company Kinetic Vision scanned the facility using the NavVis VLX wearable lidar scanner to produce highly accurate point cloud data and panorama photos.

Then, Prevu3D software was used to remove the existing clusters and convert the point cloud to a 3D mesh. This provided a physically accurate 3D model of the facility, in which the new digital data center could be simulated.

Engineers combined and visualized multiple CAD datasets with enhanced precision and realism by using the Cadence Reality platform. The platform’s integration with Omniverse provided a powerful computing platform that enabled teams to develop OpenUSD-based 3D tools, workflows and applications.

Omniverse Cloud APIs also added interoperability with more tools, including Patch Manager and Nvidia Air. With Patch Manager, the team designed the physical layout of their cluster and networking infrastructure, ensuring that cabling lengths were accurate and routing was properly configured.

The demo showed how digital twins can allow users to fully test, optimize and validate data center designs before ever producing a physical system. By visualizing the performance of the data center in the digital twin, teams can better optimize their designs and plan for what-if scenarios.

Users can also enhance data center and cluster designs by balancing disparate sets of boundary conditions, such as cabling lengths, power, cooling and space, in an integrated manner — enabling engineers and design teams to bring clusters online much faster and with more efficiency and optimization than before.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.