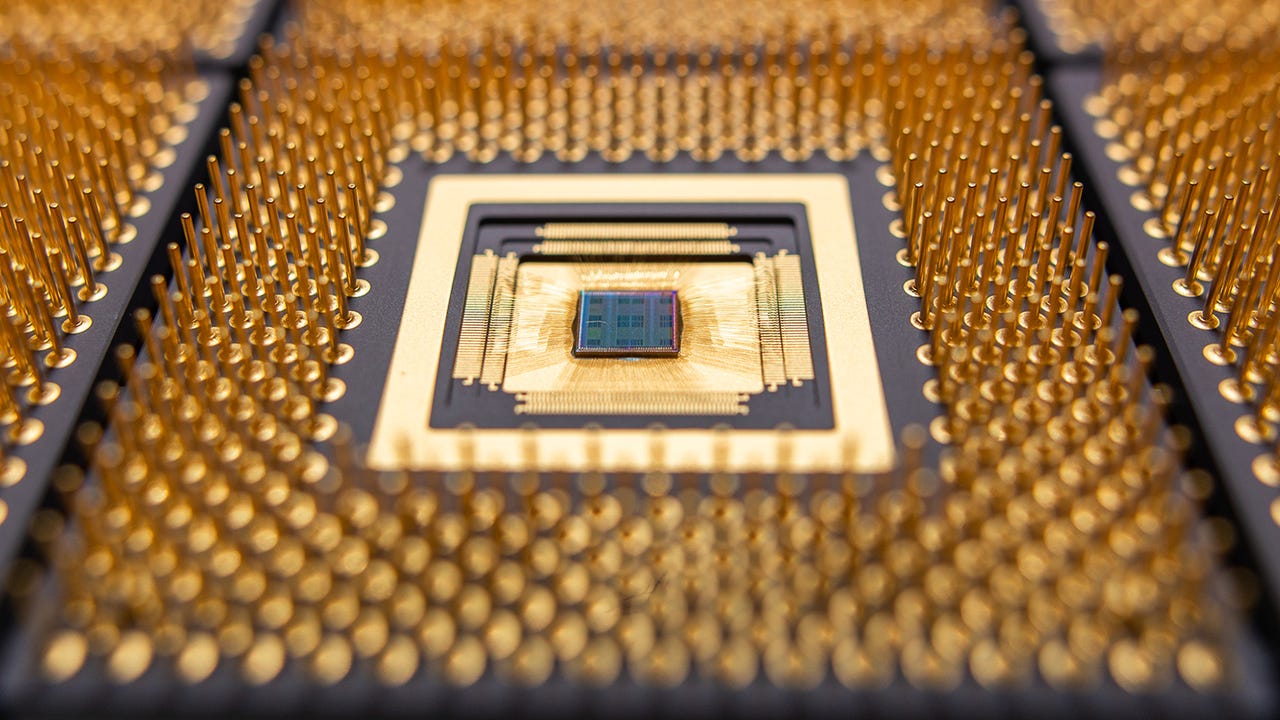

A prototype mixed digital and analog chip does the most demanding portions of GenAI, the accumulate operation, in low-power analog circuitry. EnCharge AI

2024 is expected to be the year that generative artificial intelligence (GenAI) goes into production, when enterprises and consumer electronics start actually using the technology to make predictions in heavy volume — a process known as inference.

For that to happen, the very large, complex creations of OpenAI and Meta, such as ChatGPT and Llama, somehow have to be able to run in energy-constrained devices that consume far less power than the many kilowatts used in cloud data centers.

Also: 2024 may be the year AI learns in the palm of your hand

That inference challenge is inspiring fundamental research breakthroughs toward drastically more efficient electronics.

On Wednesday, semiconductor startup EnCharge AI announced that its partnership with Princeton University has received an $18.6 million grant from the US’s Defense Advanced Research Projects Agency, DARPA, to advance novel kinds of low-power circuitry that could be used in inference.

“You’re starting to deploy these models on a large scale in potentially energy-constrained environments and devices, and that’s where we see some big opportunities,” said EnCharge AI CEO and co-founder Naveen Verma, a professor in Princeton’s Department of Electrical Engineering, in an interview with ZDNET.

EnCharge AI, which employs 50, has raised $45 million to date from venture capital firms including VentureTech, RTX Ventures, Anzu Partners, and AlleyCorp. The company was founded based on work done by Verma and his team at Princeton over the past decade or so.

EnCharge AI is planning to sell its own accelerator chip and accompanying system boards for AI in “edge computing,” including corporate data center racks, automobiles, and personal computers.

Also: Nvidia boosts its ‘superchip’ Grace-Hopper with faster memory for AI

In so doing, the company is venturing where other startups have tried and failed — to provide a solution to the inferencing problem at the edge, where size, cost, and energy-efficiency dominate.

EnCharge AI’s approach is part of a decades-long effort to unite logic circuits and memory circuits known as in-memory compute (IMC).

The real energy hog in computing is memory access. The cost to access data in memory circuits can be orders of magnitude more than the energy required by the logic circuits to operate on that data.

When it comes to cracking the inference market for AI, says EnCharge AI’s Naveen Verma, “Having a major differentiation in energy efficiency is an important factor.” EnCharge AI

GenAI programs consume unprecedented amounts of memory to represent the parameters, the neural “weights” of large neural networks, and tons more memory to store and retrieve the real-world data they operate on. As a result, GenAI’s energy demand is soaring.

The solution, some argue, is to perform the calculations closer to memory or even in the memory circuits themselves.

EnCharge AI received the funding as part of DARPA’s $78 million program targeted at IMC — Optimum Processing Technology Inside Memory Arrays (OPTIMA). As part of a broader electronics resurgence Initiative at DARPA, OPTIMA’s specifications outline a broad goal of reaching 300 trillion operations per second (TOPS) per watt of energy expended, the critical measure of energy efficiency in computing. That would be 15 times the industry’s current state of the art.

Also: Intel spotlights AI in new Core Ultra, 5th-gen Xeon chips

The key insight by Verma and other pioneers of IMC research is that AI programs are dominated by a couple of basic operations that draw on memory. Solve those memory-intense tasks, and the entire AI task can be made more efficient.

The main calculation at the heart of GenAI programs such as large language models is what’s known as a “matrix multiply-accumulate.” The processor takes one value in memory, the input, and multiplies it by another value in memory, the weights. That multiplication is added together with lots and lots of other multiplications that happen in parallel, as an “accumulation” of multiplications, known as an “accumulate” operation.

In the case of IMC, EnCharge AI and others aim to reduce the memory usage in a matrix multiply-accumulate by doing some of the work in analog memory circuitry rather than traditional transistors. Analog can perform such matrix-multiply accumulations in parallel at far lower energy than can digital circuits.

Also: As AI agents spread, so do the risks, scholars say

“That’s how you solve the data movement problem,” explained Verma. “You don’t communicate individual bits, you communicate this reduced result” in the form of the accumulation of lots of parallel multiplications.

Analog computing, however, is notoriously difficult to achieve, and the fortunes of those who have gone before EnCharge AI have not been good. The chip-industry newsletter Microprocessor Report noted that one of the most talked-about startups in analog computing for AI, Mythic Semiconductor, which received $165 million in venture capital, is now “barely hanging on.”

“How do you get analog to work? That’s the snake that bit Mythic, that’s the snake that bit researchers for decades,” observed Verma. “We’ve known for decades that analog can be a 100 times more energy-efficient and a 100 times more area-efficient” than digital, but “the problem is, we don’t build analog computers today because analog is noisy.”

Also: How Apple’s AI advances could make or break the iPhone 16

EnCharge AI has found a way to finesse the challenges of analog. The first part is to break up the problem into smaller problems. It turns out you don’t need to do everything in analog, said Verma. It’s enough to make just the accumulate operation more efficient.

Instead of performing all of a matrix multiply-accumulate in analog, the first part — the matrix multiplication — is performed in normal digital circuits in the EnCharge AI chip, meaning, transistors. Only the accumulate portion is done in analog circuits via a layer of capacitors that sit above the digital transistors.

“When you build an in-memory computing system, it’s that reduction in that accumulate [function] that really solves the data movement problem,” said Verma, “It’s the one that’s critical for memory.”

Floor plan of a 2021 prototype of the EnCharge AI chip. Compute-in-memory cores connected by a network divided up the work of computing a large language model or other AI program in parallel. EnCharge AI

A detail of the inside of each in-memory computing block, where analog circuits perform the “accumulation” of values of a neural network. EnCharge

The second novel tack that EnCharge has applied is to go with a less-challenging approach to analog. Instead of measuring the current of an analog circuit, which is an especially noisy proposition, the company is using simpler capacitors, circuits that briefly store a charge.

“If you use a capacitor, you don’t use currents, you use charge capacitors, so you add up charge and not current,” said Verma, which is an inherently less noisy process.

Using capacitors is also more economical than prior approaches to analog, which required exotic manufacturing techniques. Capacitors are essentially free, said Verma, in the sense that they are part of normal semiconductor manufacturing. The capacitors are made from the ordinary metal layers used to interconnect transistors.

Also: For the age of the AI PC, here comes a new test of speed

“What’s important about these metal wires,” said Verma, “is they don’t have any material parameter dependencies like [carrier] mobility” like other memory circuits such as those used by Mythic. “They don’t have any temperature dependencies, they don’t have any nonlinearities, they only depend on geometry — basically, how far apart those metal wires are.

“And, it turns out, geometry is the one thing you can control very, very well in advanced CMOs technologies,” he said, referring to complementary metal-oxide semiconductor technology, the most common kind of silicon computer chip manufacturing technology.

All the data fed to the digital circuits for matrix multiplication and to the capacitors for accumulation comes from a standard SRAM memory circuit embedded in the chip as a local cache. Smart software designed by Verma and team organizes which data will be put in the caches so that the most relevant values are always near the multiply transistors and the accumulate capacitors.

Thus far, the prototypes produced have already offered a striking improvement in energy efficiency. EnCharge AI has been able to show it can process 150 trillion operations per second per watt when handling neural network inference values that have been quantized to eight bits. Prior approaches to inference — such as Mythic’s — have produced at most tens of TOPS per watt. EnCharge AI refers to its chips as being “30x” more efficient than prior efforts.

Also: 2024 may be the year AI learns in the palm of your hand

“The best accelerators from Nvidia or Qualcomm are, kind of, at 5, maybe 10, max, TOPS per watt for eight-bit compute,” said Verma.

On top of that efficiency breakthrough, smart software solves a second issue, that of scale. To handle very large models of the kind OpenAI and others are building, which are scaling to trillions of neural network weights, there will never be enough memory in an SRAM cache on-chip to hold all of the data. So, the software “virtualizes” access to memory off-chip, such as in DRAM, by efficiently orchestrating what data is kept where, on and off-chip.

“You have the benefit of all of this larger, high-density memory, all the way out to DRAM, and yet, because of the ways that you manage data movement between these [memories], it all looks like first-level memories in terms of efficiency and speed,” said Verma.

Also: Microsoft unveils first AI chip, Maia 100, and Cobalt CPU

EnCharge AI, which was formally spun out of Verma’s lab in 2022, has produced multiple samples of increasing complexity using the capacitor approach. “We spent a good amount of time understanding it, and building it from a fundamental technology to a full architecture to a full software stack,” said Verma.

Details of a first product will be announced later this year, said Verma. Although initial products will be focused on the inference opportunity, the approach of capacitors for IMC can scale to the training as well, insisted Verma. “There’s no reason, fundamentally, why our technology can’t do training, but there’s a whole lot of software work that has to be done to make that work,” he said.

Of course, marketplace factors can often restrain novel solutions, as is clear from the fact that numerous startups have failed to make headway against Nvidia in the AI training market, startups such as Samba Nova Systems and Graphcore, despite the virtues of their inventions.

Even Cerebras Systems, which has announced very large sales of its training computer, hasn’t dented Nvidia’s momentum.

Also: AI pioneer Cerebras is having ‘a monster year’ in hybrid AI computing

Verma believes the challenges in inference will make the market something of a different story. “The factors that are going to determine where there’s value in products here are going to be different than what they have been in that training space,” said Verma. “Having a major differentiation in energy efficiency is an important factor here.”

“I don’t think it’s going to be CUDA” that dominates, he said, referring to Nvidia’s software, as formidable as it is. “I think it’s going to be, you need to deploy these models at scale, they need to run in very energy-constrained environments, or in very economically efficient environments — those metrics are going to be what’s critical here.”

To be sure, added Verma, “Making sure that the overall solution is usable, very transparently usable, the way that Nvidia has done, is also going to be an important factor for EnCharge AI to win and be successful here.”

Also: AI at the edge: 5G and the Internet of Things see fast times ahead

EnCharge AI plans to pursue further financing. Semiconductor companies typically require hundreds of millions of dollars in financing, a fact of which Verma is very much aware.

“What’s driven all of our fundraising has been customer traction, and delivering on customer needs,” said Verma. “We find ourselves in a situation where the traction of the customers is accelerating, and we will need to ensure that we’re capitalized properly to be able to do that, and the fact that we have some of that traction definitely means we’ll be back up on the block soon.”