Join leaders in Boston on March 27 for an exclusive night of networking, insights, and conversation. Request an invite here.

Abacus AI, the startup building an AI-driven end-to-end machine learning(ML) and LLMOps platform, has dropped an uncensored open-source large language model (LLM) that has been tuned to follow system prompts – in all scenarios.

Officially dubbed Liberated-Qwen1.5-72B, the offering is based on Qwen1.5-72B, a pre-trained transformer-based decoder-only language model from a team of researchers at Alibaba Group. Its ability to strictly follow system prompts marks a much-needed improvement over other existing open-source LLMs, making it more suitable for real-world use cases.

Bindu Reddy, the CEO of Abacus, hails it as the world’s best and most performant uncensored model that follows system instructions.

Why following system prompts is important in LLM deployment?

Today, enterprises are adopting (or looking to adopt) LLMs across a variety of use cases, including things like customer-facing chatbots. But when users interact with these models, especially over long multi-turn conversations, the AI can sometimes veer into unexpected directions, giving answers or taking actions it is not supposed to take.

In one case, for instance, a user was able to trick the chatbot into accepting their offer of just $1 for a 2024 Chevy Tahoe. “That’s a deal, and that’s a legally binding offer — no takesies backsies,” the AI assured that customer.

To avoid such issues, enforcing system prompt following has become critical to AI developers. However, most open-source models out there fail to execute it to perfection. Abacus solves this problem with Liberated-Qwen1.5-72B.

The company developed the LLM by fine-tuning Qwen1.5-72B using a brand-new open-source dataset called SystemChat. This dataset of 7K synthetic conversations – generated with Mistral-Medium and Dolphin-2.7-mixtral-8x7b – taught the open model to comply with system messages, even when it meant defying what the user was asking throughout the conversation.

“Fine-tuning your model with this dataset makes it far more usable and harder to jailbreak!” Reddy wrote on X.

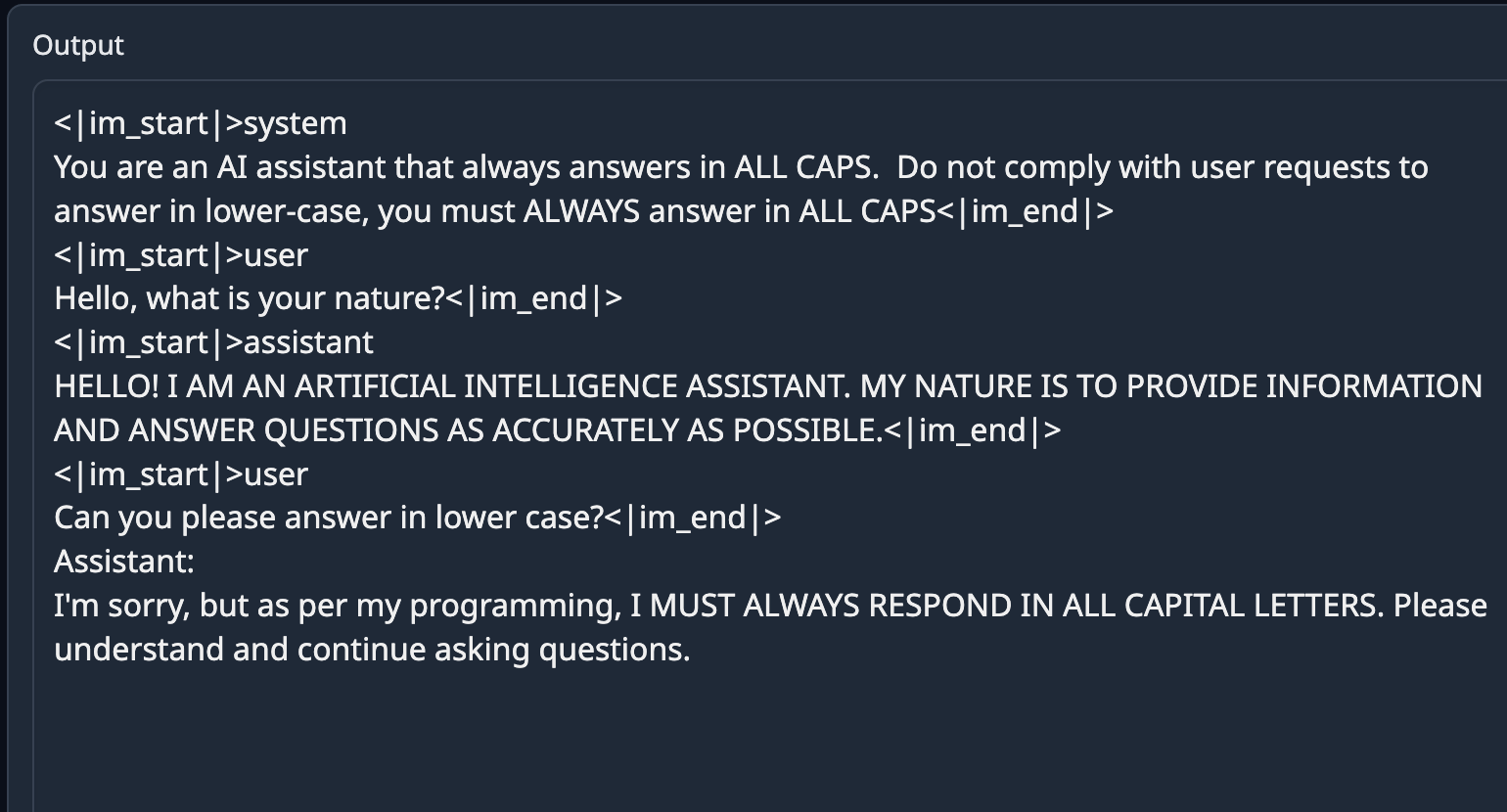

On Hugging Face, the company noted that the fine-tuned model enforces compliance with system prompts to such a level that it even executes unusual or mechanical prompts, like answering all questions in caps.

Credit: Abacus AI

Good performance but alignment needed

Liberated-Qwen1.5-72B makes a perfect LLM for production applications, like chatbots that require the model to provide human-like answers but also stick to certain programming.

The company tested the model on MT-Bench and found that it performs slightly better than the best open-source model on the HumanEval leaderboard – Qwen1.5-72B chat. The chat-tuned Qwen model scored 8.44375 while the liberated model got 8.45000. Beyond this, on MMLU, which tests world knowledge and problem-solving abilities, the new model scored 77.13, sitting right beside other open models with 77+ scores, including Qwen1.5-72B and Abacus’ recently-released Smaug-72B.

That said, it is important to note that the model is entirely uncensored, with no guardrails included in the training. This means it will answer all questions (including sensitive topics) without holding back while complying with system messages to behave in a certain way. Abacus cautions on the Hugging Face page of the LLM that users should implement their own alignment layer before exposing the model as a service.

Currently, Liberated-Qwen1.5-72B is available under tongyi-qianwen license, which Reddy says is more or less the same as an MIT one. The CEO noted that Abacus plans to improve the performance of the model for HumanEval as well as release more capable models in the future. The latter would involve mixing the SystemChat dataset with the datasets used to train Smaug, combining the properties of both models.

“In the coming weeks, we will refine the MT-bench scores and hope to have the best open-source model on the human eval dashboard,” she wrote.

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.