A hot potato: As generative AI becomes increasingly advanced and accessible, more fake images are appearing on social networks alongside claims that they are authentic. Meta says it will combat this problem by detecting and labeling AI images posted to its platforms – Facebook, Instagram, and Threads – even when they are created by rival services.

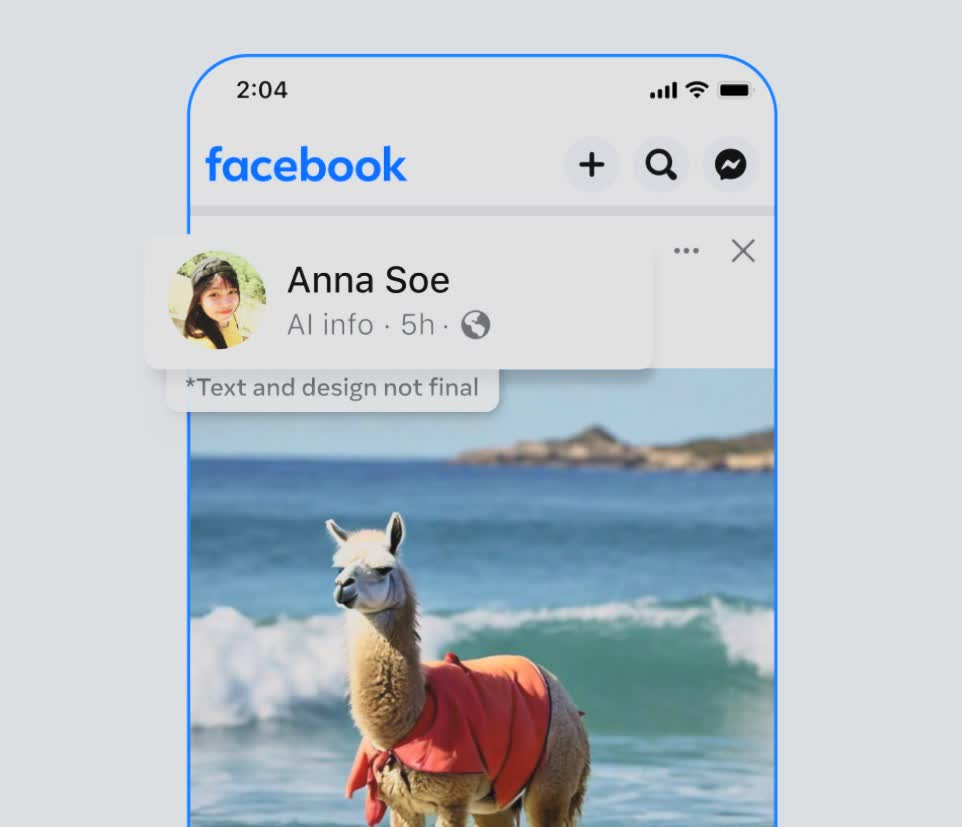

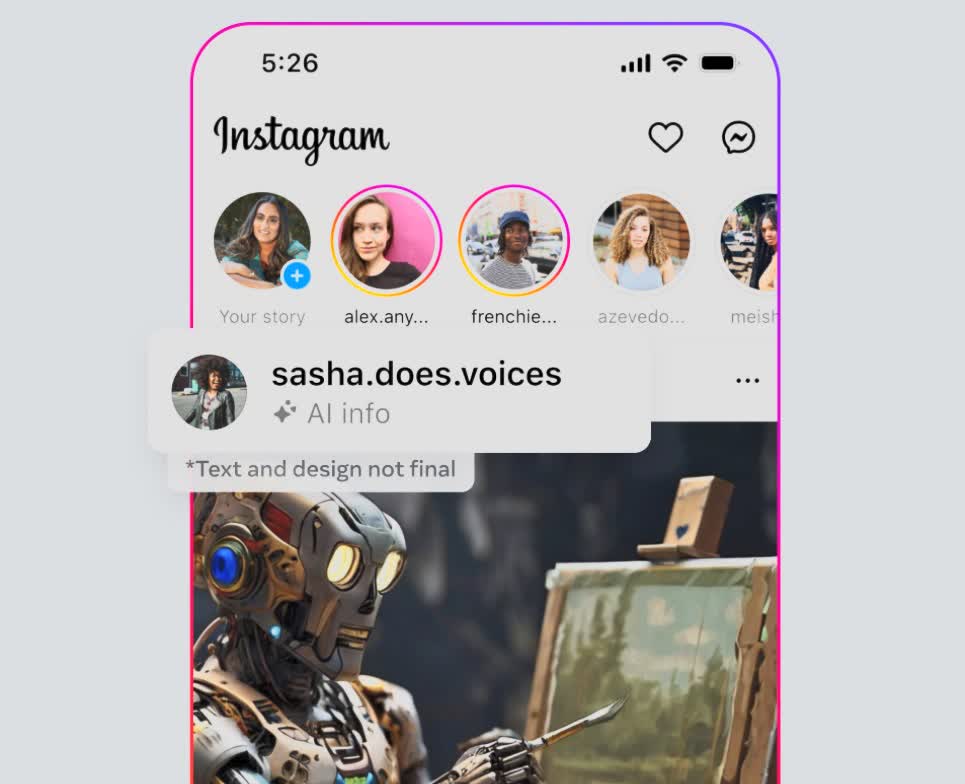

Meta already labels photorealistic images created by its AI tool with “Imagined with AI” labels, and it wants to do the same thing with content created by other generative AI services, writes Nick Clegg, Meta’s President of Global Affairs.

Photorealistic images created with Meta’s AI tool include visible markers, invisible watermarks, and metadata embedded within the image files to help platforms identify them as being AI-generated.

Clegg writes that Meta has been working with other companies to develop common standards for identifying AI-generated content. The social media giant is building tools that identify invisible markers at scale so images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock, who are working to add their own metadata to images, can be identified and labeled when posted to a Meta platform.

“People are often coming across AI-generated content for the first time and our users have told us they appreciate transparency around this new technology. So it’s important that we help people know when photorealistic content they’re seeing has been created using AI,” Clegg wrote.

Meta is building its capability to identify AI-generated images now, and will start applying labels in all languages across its apps in the coming months.

Clegg said that identifying audio and video content created by AI is proving more difficult as the process of adding markers is complicated. However, Meta is adding a feature that will allow users to disclose when they share AI-generated video or audio so the company can add a label to it. Users must also use this disclosure tool when they post organic content with a photorealistic video or realistic-sounding audio that was digitally created or altered. Meta “may apply penalties if they fail to do so.”

Clegg said any digitally created or altered images, video or audio that “creates a particularly high risk of materially deceiving the public on a matter of importance” will receive a more prominent label.

Meta is also working to develop classifiers that can help it automatically detect AI-generated content, even if said content lacks invisible markers. It’s also looking at ways of making it more difficult to alter or remove invisible watermarks.

More companies are pushing for ways to identify misleading AI-generated content as the election approaches. In September, Microsoft warned of AI imagery from China being used to influence US voters.

More recently, a robocall went out to New Hampshire residents that included a 39-second message spoken by what sounded like President Biden telling them not to vote. It was likely created using a text-to-speech engine made by ElevenLabs, leading to the FCC voting to outlaw AI voices in robocalls.