Key Takeaways

- AI poisons alter training data to render it useless or damage AI models, acting as a form of protest or self-protection by artists.

- These poisons can prevent artists’ work from being used and protect their art style and intellectual rights.

- AI poisons may be too late to achieve their goals since existing models won’t be affected and alternative “ethical” datasets are emerging.

Science-fiction stories have led us to believe that the war between humans and AI would involve more explosions, but in reality the fight against our synthetic friends has taken a much more subtle, but perhaps equally effective turn.

What Is AI Poison?

With generative AI such as GPT or DALL-E, these programs develop their ability to create writing, music, video, and images by learning from absolutely massive datasets.

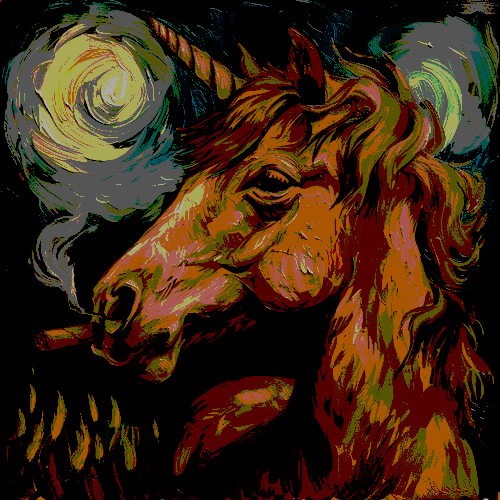

This is how a program like MidJourney can create any arbitrary image you ask for, like “A cigar-smoking unicorn in the style of Van Gogh” or “A robot drinking poison” as I somewhat ironically asked MidJourney to draw. This is certainly an amazing ability for a computer program to have, and the results have improved in leaps and bounds.

However, many of the artists whose work has been used as part of that dataset aren’t happy about it. The problem is that, as of this writing at least, using that imagery to train AI models is either in a legal gray area, or isn’t prohibited by law, depending on the region. As usual, legislation will take some time to catch up to technology, but in the meantime a potential solution in the form of “AI poison” has emerged.

An AI poison alters something that might be used to train an AI model in such a way that it either makes the data worthless or even hurts the model, making it malfunction. The changes that are made to the source material are such that a human wouldn’t notice. This is where tools like Nightshade and Glaze come into the picture.

Imagine you have a picture of a dog. An AI poison tool might alter the image’s pixels in such a way that, to us, the picture still looks like a dog, but to an AI trained on this “poisoned” image, the dog might be associated with something entirely different, like a cat or a random object. This is akin to teaching the AI the wrong information on purpose.

When an AI model is trained on enough of these “poisoned” images, its ability to generate accurate or coherent images based on its training data is compromised. The AI might start producing distorted images or completely incorrect interpretations of certain prompts.

Why Do AI Poisons Exist?

As I mentioned briefly above, AI poison tools exist to give artists (in the case of visual content) a way to fight back against having their images used to train AI models. There are a few reasons why they’re concerned, including but not limited to:

- Fear of being replaced by or losing work to AI.

- Having their unique art style and identity replicated.

- Feeling that their intellectual rights have been infringed, regardless of the current legalities that apply.

Given the nature of the internet, and how easy it is to “scrape” data from the web, these artists are powerless to prevent their images from being included in datasets. However, by “poisoning” them, they can not only prevent their art from being used but could damage the model as a whole.

So these poisons could be seen as both a practical form of self-protection, and as a form of protest.

Is This Too Little Too Late?

While the idea of AI poisons to prevent AI art models from using certain images is interesting, it may be too late for this to achieve the goals that drove their invention in the first place.

First, these poisons won’t affect any image generation models that already exist. So any abilities they already have are here to stay. It only affects the training of future models.

Second, AI researchers have learned a lot during the initial phases of training these models. There are now approaches to training models that can work with much smaller datasets. They don’t need the massive number of images they did before, and the need to scrape massive amounts of data from the web may not be the standard practice going ahead.

Third, now that the models already exist, it’s possible to train them on “ethical” datasets. In other words, datasets that have explicit permission or rights to be used to train AI. This is what Adobe’s attempted with their Firefly and its “ethically-sourced” data.

So while AI poisons can certainly prevent models from including your work, or make datasets scraped from the web as a whole useless, it’s unlikely to do anything about the advancement and implementation of the technology from this point onward.