Two months after being fired by the OpenAI nonprofit board (only to be rehired a few days later), CEO Sam Altman has displayed a noticeably softened — and far less prophetic — tone at the World Economic Forum in Davos, Switzerland about the idea that powerful artificial general intelligence (AGI) will arrive suddenly and radically disrupt society.

For example, in a conversation today with Microsoft CEO Satya Nadella — their first joint public appearance since the OpenAI drama — and The Economist editor-in-chief Zanny Minton Beddoes, Altman said that “I don’t think anybody agrees anymore what AGI means.”

“When we reach AGI,” he added, “the world will freak out for two weeks and then humans will go back to do human things.”

He added that AGI would be a “surprisingly continuous thing,” where “every year we put out a new model [and] it’s a lot better than the year before.”

This followed comments Altman made in a conversation organized by Bloomberg, in which he said AGI could be developed in the “reasonably close-ish future,” but “will change the world much less than we all think and it will change jobs much less than we all think.”

Altman had previously written about the ‘grievous harm’ of AGI

In an OpenAI blog post in February 2023, ‘Planning for AGI and Beyond,’ Altman wrote that “a misaligned superintelligent AGI could cause grievous harm to the world; an autocratic regime with a decisive superintelligence lead could do that too.” He also wrote that “we expect powerful AI to make the rate of progress in the world much faster, and we think it’s better to adjust to this incrementally.”

Yet, that post also had a grandiose tone when discussing about the potential of AGI: “If AGI is successfully created, this technology could help us elevate humanity by increasing abundance, turbocharging the global economy, and aiding in the discovery of new scientific knowledge that changes the limits of possibility.”

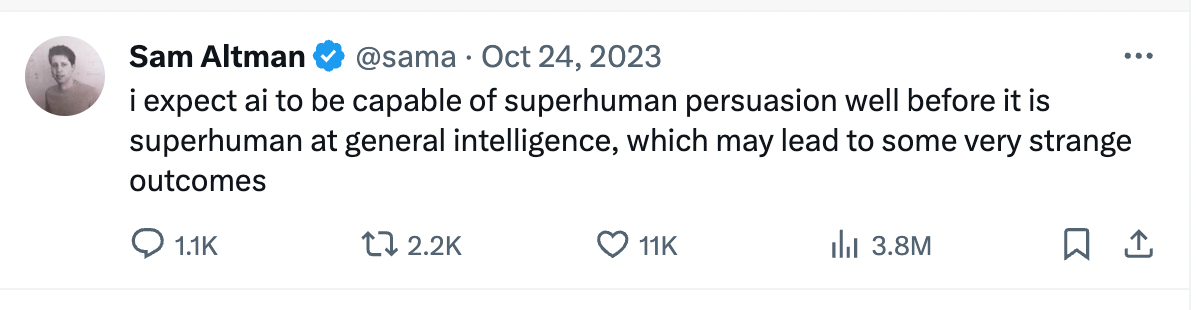

Altman also posted cryptic messages about the potential of AGI as recently as October 2023, when he wrote “I expect AI to be capable of superhuman persuasion well before it is superhuman at general intelligence, which may lead to some very strange outcomes.”

Or there was the time back in August 2023, when he posted this:

Not only do Altman’s latest, more measured AGI comments come at Davos, with its economic, rather than futuristic, focus, but they also come after the OpenAI drama around his temporary firing that led to the dissolution of the company’s board — which was laser-focused on the dangers of a powerful AGI and set up to reign such technology in if necessary.

As VentureBeat reported in the week before Altman’s firing, OpenAI’s charter says that its nonprofit board of directors will determine when the company has “attained AGI” — which it defines as “a highly autonomous system that outperforms humans at most economically valuable work.” Thanks to a for-profit arm that is “legally bound to pursue the Nonprofit’s mission,” once the board decides AGI, or artificial general intelligence, has been reached, such a system will be “excluded from IP licenses and other commercial terms with Microsoft, which only apply to pre-AGI technology.”

Most of the board members who held that responsibility to determine whether OpenAI had attained AGI are gone now — president Greg Brockman is no longer on the board and neither is former chief scientist Ilya Sutskever (whose role at OpenAI remains unclear), and of the non-employee board members, only Adam D’Angelo remains (Tasha McCauley and Helen Toner are out).

It is notable that while an OpenAI spokesperson told VentureBeat that “None of our board members are effective altruists,” D’Angelo, McCauley and Toner all had ties to the effective altruism (EA) movement — and Altman acknowledged McCauley, Toner and EA funder OpenPhilanthropy co-founder Holden Karnofsky in the February 2023 ‘Planning for AGI and Beyond’ blog post. EA has long considered preventing what its adherents say are catastrophic risks to humanity from future AGI one of its top priorities.

But now, the OpenAI board looks very different — besides D’Angelo, it includes former Treasury Secretary Larry Summers and former Salesforce co-CEO Bret Taylor. OpenAI’s biggest investor, Microsoft now has a nonvoting board seat. And The Information has reported on D’Angelo’s efforts to bring in new members — including approaching Scale CEO Alexandr Wang and Databricks CEO Ali Ghodsi.

But in the conversation with Altman and Nadella, The Economist’s editor-in-chief Zanny Minton Beddoes pushed back on Altman’s AGI comments and cited OpenAI’s unchanged charter regarding its board of directors.

“I’m gonna push you a bit on this because I think it matters a little bit for the relationship between your two companies, because correct me if I’m wrong, but I think the OpenAI charter says that your board will determine when you’ve hit AGI and once you’ve had AGI your commercial terms no longer apply to AGI — so all of the relationships you’ve got right now are kind of out the window,” said Minton Beddoes.

Altman replied in a breezy way that what the OpenAI charter says is that there is a point “where our board can sit down and there’s like a moment to reconsider.” He said “we hope that we continue to commercialize stuff, but depending on how things go — and this is where the ‘no one knows what happens next’ is important.”

The point is, he added, “our board will need a time to sit down.”

Microsoft’s Nadella, for his part, appeared unconcerned, saying that the two companies needed to put restraints into their partnerships to make sure that a future AGI amplified the benefits of the technology and dampened any unintended consequences. In addition, he said “the world is very different” now, with no one waiting for whether Microsoft or OpenAI talk about AGI. “Governments of the world are interested, civic society is interested,” he said. “they are going to have a say.”

To be clear, said Minton Beddoes to Altman and Nadella, “You’re not worried that a future OpenAI board could suddenly say we’ve reached a jam? You’ve had some surprises from the OpenAI board.”

Altman responded with a self-deprecating joke about the November drama that led to his firing and reinstatement: “I believe in learning lessons early,” he said. “when the stakes are relatively low.”

VentureBeat’s mission is to be a digital town square for technical decision-makers to gain knowledge about transformative enterprise technology and transact. Discover our Briefings.